Microsoft is quietly advancing its Copilot app, gradually expanding both visible features and those gated behind internal flags. One of the biggest public changes is the rollout of native image generation powered by OpenAI’s GPT-4o model. This update replaces DALL-E 3, allowing users across platforms to create higher-quality visuals natively within the app without needing third-party integrations or separate web tools.

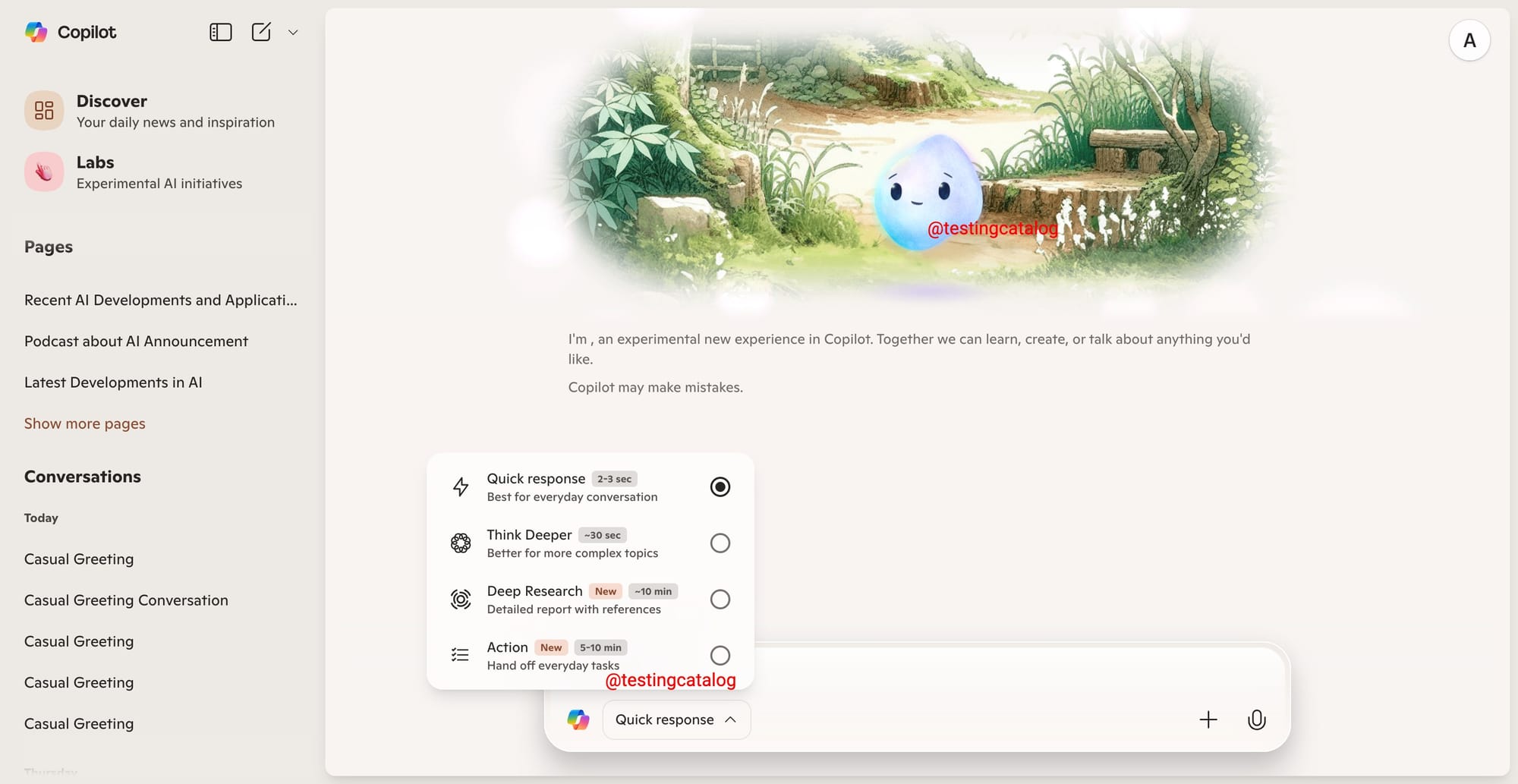

A more intriguing development lies in the hidden “Action” feature, previously teased as a tool to let Copilot take over daily computing tasks. This option, now visible in the code but still labeled “coming soon” in the Labs tab, hints that users will be able to hand off these tasks during a 5 to 10-minute session. Early indicators suggest it’s being built for Windows environments specifically, reinforcing Microsoft’s ecosystem-first strategy. When it eventually launches, access will initially be limited, possibly to select testers or Copilot Pro subscribers.

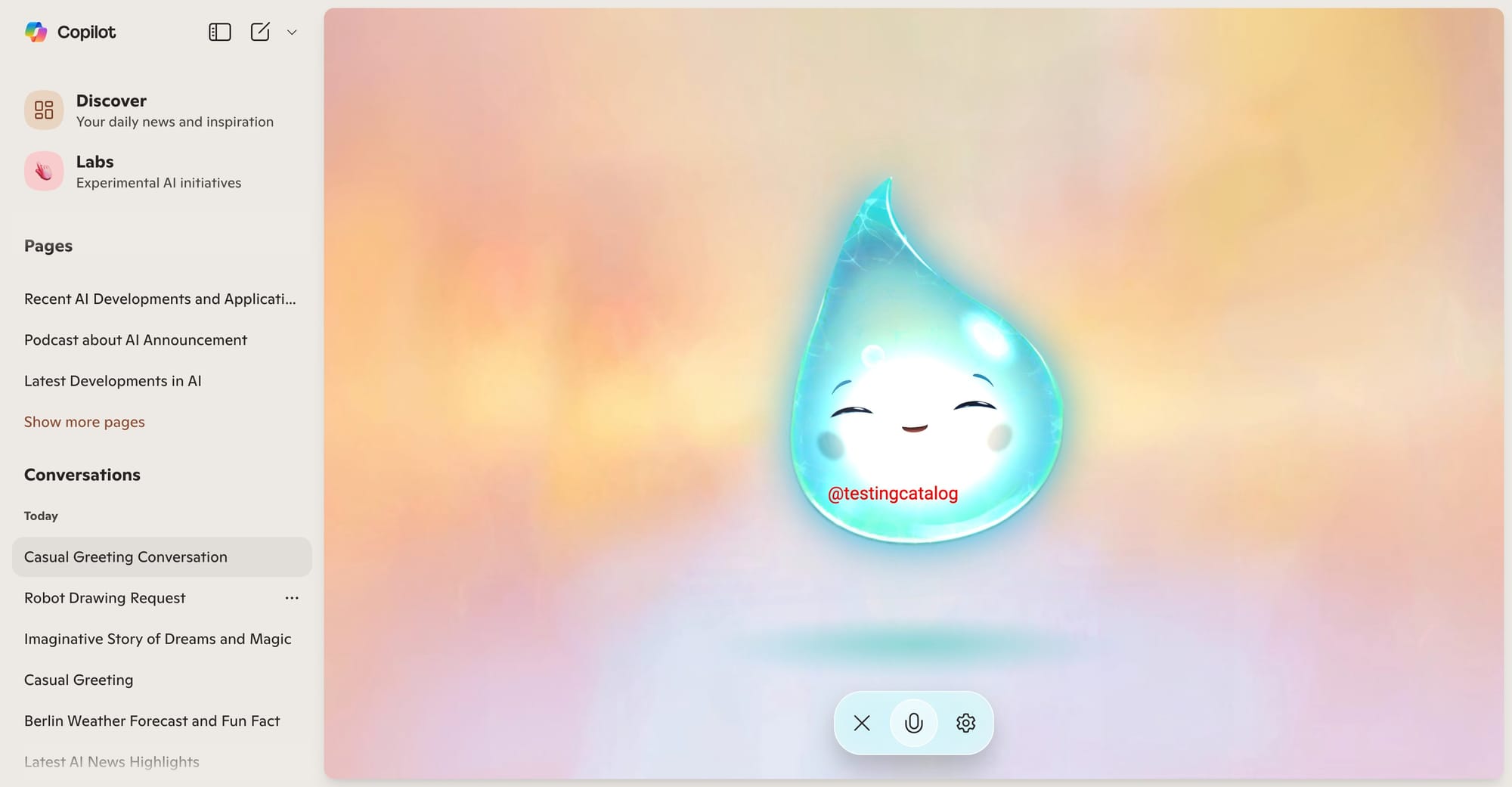

Visual identity updates continue as well. Copilot characters—AI personas with distinct appearances—are evolving. In voice mode, the character now occupies the full screen, shifting away from its smaller conversational UI. The still-unnamed fourth character, which resembles a bubblegum or cloud, has gained a more defined look. Its final form is still unclear, echoing how Erin’s appearance morphed over time from a lava shape to a mushroom. These characters serve as a branding layer and possibly functional avatars, but it seems more refinement is expected before full release.

These updates reflect Microsoft’s ongoing ambition to blur boundaries between productivity, assistance, and personality within its Copilot AI ecosystem.