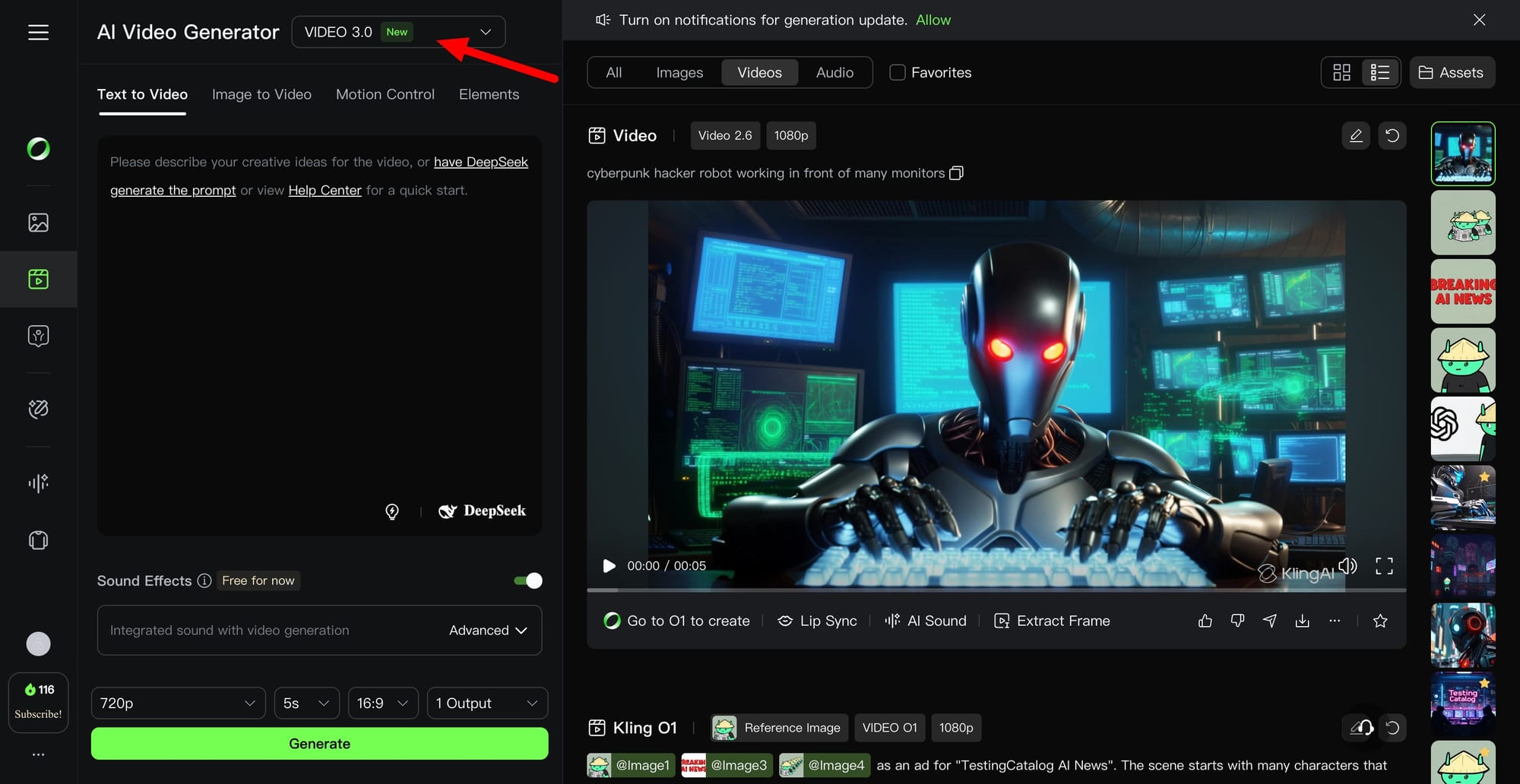

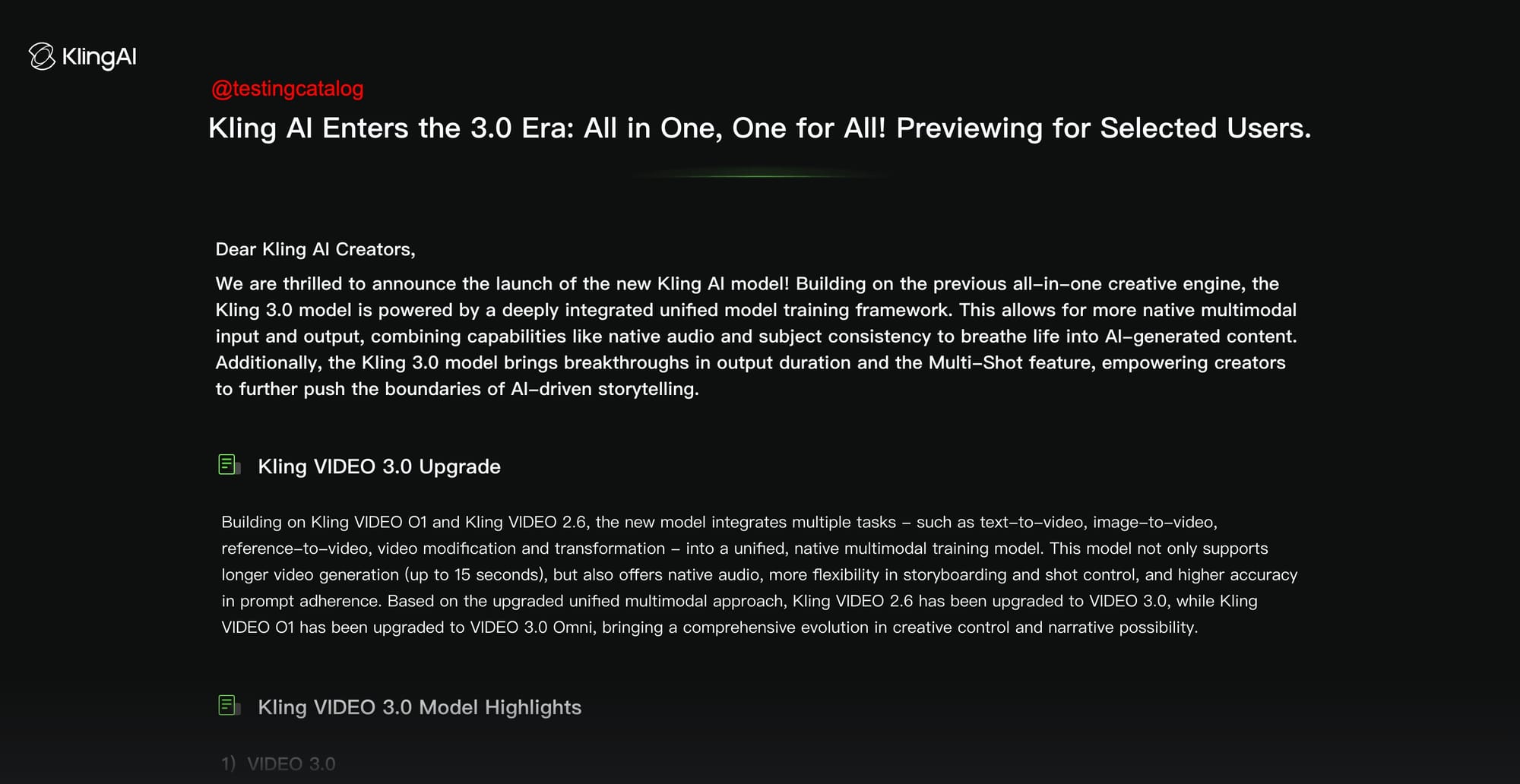

Kling AI is preparing to enter its “3.0 era” with a new unified video model that is not publicly available yet and is being previewed to a limited set of users. The positioning is clear: instead of splitting workflows across separate tools or model lines, Kling is aiming for an all-in-one creative engine that can handle text-to-video, image-to-video, reference-based generation, and video modification under one native multimodal training framework.

Kling 3.0 Model is coming!

— Kling AI (@Kling_ai) January 31, 2026

Now in exclusive early access. Stay tuned for what’s next. 🚀 pic.twitter.com/XcyZBRQPCz

On the product side, Kling VIDEO 3.0 is described as a consolidation of the previous VIDEO 2.6 capabilities and the earlier VIDEO 01 line, rolling them into a single “VIDEO 3.0” family. The main promise is more control without forcing creators into complex editing pipelines. Kling highlights longer single-generation clips, with output duration reaching up to 15 seconds and flexible control from 3 to 15 seconds, which is framed as enabling fuller narrative beats rather than short fragments stitched together externally.

A core feature teased for VIDEO 3.0 is Multi-Shot, presented as an “AI Director” style storyboard workflow. The idea is that the model can interpret scene coverage and shot patterns directly from a prompt, then adjust camera angles and compositions automatically, from basic shot–reverse-shot dialogue setups to more complex sequences. Kling also claims this reduces the need for manual cutting and editing, since the output is intended to arrive as a more “cinematic” sequence in a single generation.

Kling is also emphasizing subject consistency as a major upgrade, especially for image-to-video and reference-driven work. The preview material describes a system that can lock in “core elements” of a character or scene so that camera movement and scene development do not cause the subject to drift. This is paired with support for multi-image references and video references as “Elements,” which appears to be Kling’s term for reusable character or asset anchors that can be brought into new generations.

Audio is another pillar of the planned release. Kling says VIDEO 3.0 upgrades native audio output with character-specific voice referencing, aiming to reduce ambiguity in multi-character scenes by letting creators identify who is speaking. It also claims broader language support, listing languages like English, Chinese, Japanese, Korean, and Spanish, and positions this as enabling more natural bilingual or multilingual scenes where dialogue and lip motion remain coherent.

Beyond motion and audio, the preview notes a “native-level text output” capability focused on precise lettering. Kling frames this as useful for signage, captions, and advertising-style layouts, where legible text is often a failure point in generative video. If it works as described, it would move Kling closer to being a production-oriented tool for commerce and marketing assets, not just stylized clips.

The VIDEO 3.0 Omni variant is positioned as the more reference-heavy branch. Kling claims it improves subject consistency, prompt adherence, and output stability versus its earlier reference model. It also expands “Elements 3.0” to include video-character reference with both visual and audio capture. In practice, Kling suggests a workflow where users upload a short clip of a character to extract appearance traits, and optionally provide a voice clip to extract vocal characteristics, then reuse those Elements across scenes for continuity.

Kling also highlights more granular shot controls in the storyboard flow, including duration, shot size, perspective, narrative content, and camera movement per shot, with the stated goal of smoother transitions and more structured multi-shot sequences. That points to Kling trying to compete not only on model quality, but also on creative direction tooling that sits between pure prompting and full NLE editing.

On the company side, Kling is presenting this as an architectural step forward: a native framework for multi-task, all-purpose video generation, plus cross-modal audio modeling and a reference system meant to decouple and recombine subjects across scenes. The broader bet is that creators want longer, more coherent sequences with consistent characters and integrated sound, and they want to achieve that with fewer external steps.

For now, the key takeaway is availability: Kling AI frames this as an upcoming release, previewed for selected users, with broader access planned later. The details shared so far read like a roadmap for a unified “video OS” inside Kling’s product, centered on longer generations, Multi-Shot storyboarding, stable character references, and integrated audio.