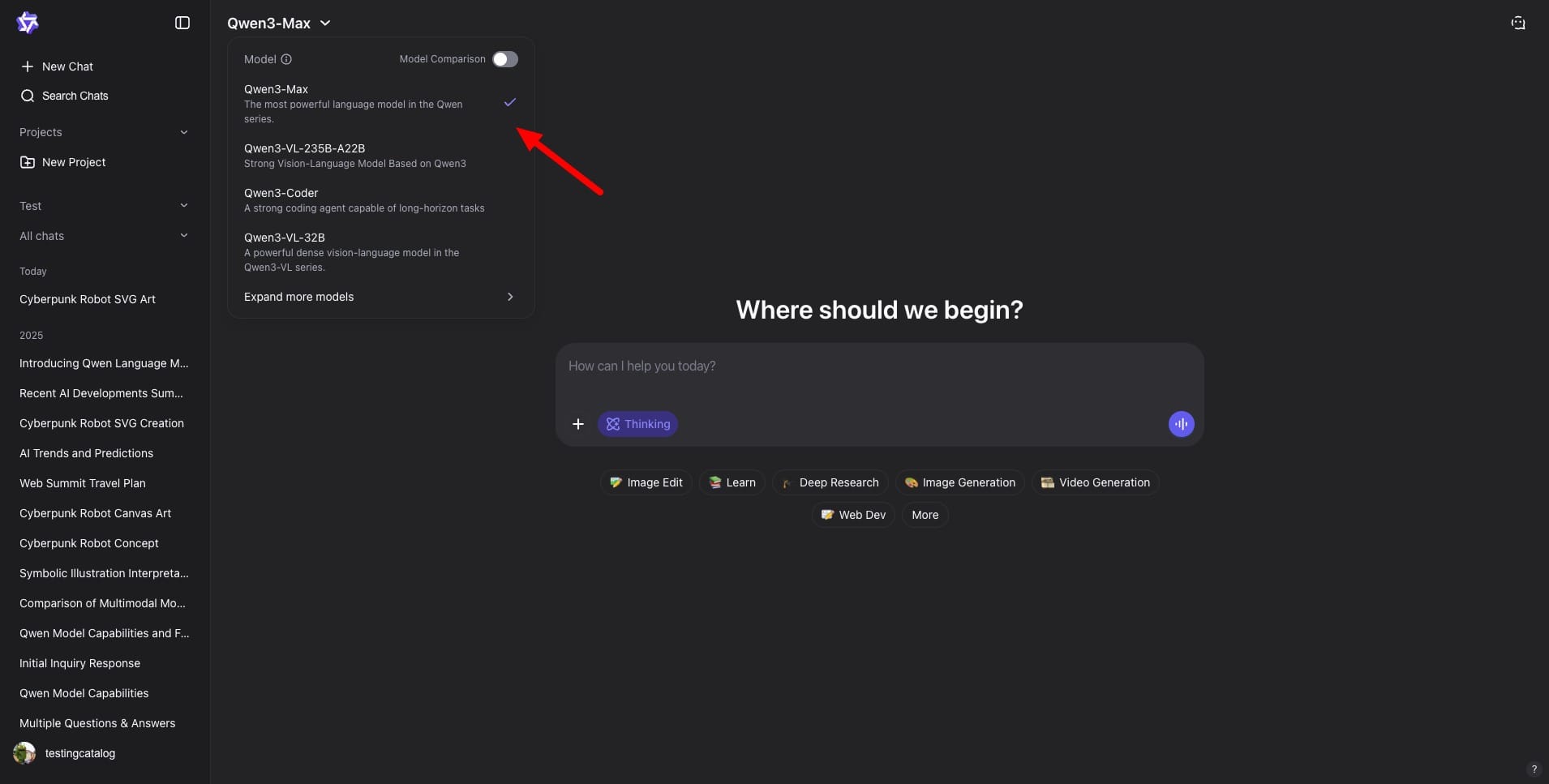

Qwen has introduced Qwen3-Max-Thinking, a flagship reasoning model designed for tackling complex math, coding, and multi-step agent workflows. This model is now available for direct use in Qwen Chat and is intended to be integrated into applications via Alibaba Cloud’s Model Studio. Here, teams can utilize the model for deliberate “thinking” runs when accuracy is crucial, and switch back to faster responses for routine prompts.

🚀 Introducing Qwen3-Max-Thinking, our most capable reasoning model yet. Trained with massive scale and advanced RL, it delivers strong performance across reasoning, knowledge, tool use, and agent capabilities.

— Qwen (@Alibaba_Qwen) January 26, 2026

✨ Key innovations:

✅ Adaptive tool-use: intelligently leverages… pic.twitter.com/6sZiKWQAq3

In Model Studio, the Qwen3-Max line features a 262,144-token context window. The latest snapshot tag (qwen3-max-2026-01-23) is described as merging thinking and non-thinking capabilities into a single model. In thinking mode, it can interleave tool calls within the reasoning process, equipped with built-in web search, webpage content extraction, and a code interpreter. This setup is designed for questions that require evidence gathering, parsing, and calculation before an answer is produced.

Alibaba Cloud’s Qwen team has been expanding the Qwen3 family across chat and cloud APIs, targeting developers and enterprises seeking long-context reasoning and tool-using agents. Early testers have noted that the “thinking” variant excels when tasks necessitate tool use or deep verification. However, the day-to-day benefits can vary depending on the prompt mix and the amount of test-time compute allocated.