Moonshot AI has officially launched Kimi K2.5, the latest open-source multimodal language model, available to users on Kimi.com, the Kimi App, API, and Kimi Code. The release targets developers, enterprises, and AI researchers seeking advanced coding, vision, and agentic computing capabilities.

Here's a short video from our founder, Zhilin Yang.

— Kimi.ai (@Kimi_Moonshot) January 27, 2026

(It's his first time speaking on camera like this, and he really wanted to share Kimi K2.5 with you!) pic.twitter.com/2uDSOjCjly

K2.5 introduces a novel agent swarm mechanism, enabling up to 100 sub-agents to execute 1,500 tool calls in parallel, reducing complex workflow execution time by as much as 4.5 times compared to single-agent models. This feature is currently in beta for high-tier paid users, with broader access through the API and integration into popular IDEs.

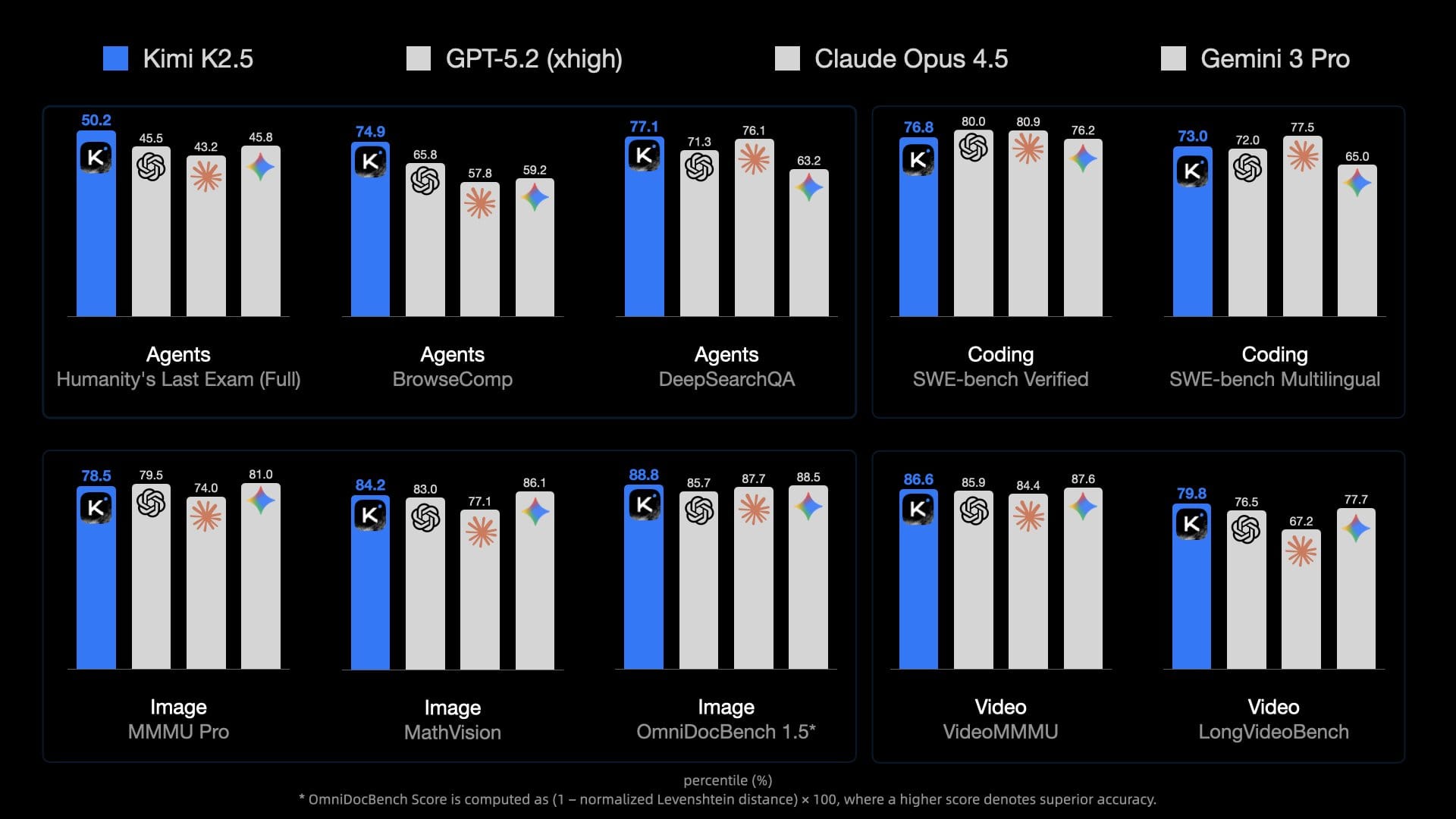

Built on continued pretraining over 15 trillion visual and text tokens, Kimi K2.5 achieves state-of-the-art performance in both front-end and multi-language code generation, vision-to-code tasks, and visual debugging. Compared to prior versions like Kimi K2, the new model shows consistent improvements in end-to-end tasks, including:

- Document creation

- Spreadsheet modeling

- Large-scale office work

Benchmarks indicate strong results at lower costs when compared to leading alternatives.

One-shot "Video to code" result from Kimi K2.5

— Kimi Product (@KimiProduct) January 27, 2026

It not only clones a website, but also all the visual interactions and UX designs.

No need to describe it in detail, all you need to do is take a screen recording and ask Kimi: "Clone this website with all the UX designs."… pic.twitter.com/g6Ov4aDQi6

Moonshot AI developed Kimi K2.5 using Parallel-Agent Reinforcement Learning, which allows a trainable orchestrator to dynamically create and coordinate subagents for distributed task execution. This architecture addresses previous bottlenecks and pushes the boundaries of scalable AI, positioning Moonshot AI as a competitive force in open-source AGI research.