Microsoft has announced the public preview of its Agent Framework, a unified open-source SDK and runtime for building, observing, and governing multi-agent AI systems. This new release targets developers and enterprise organizations looking to orchestrate sophisticated AI agents at scale while maintaining strong governance and observability. The framework integrates previous Microsoft research projects like AutoGen with the enterprise-grade Semantic Kernel, offering a seamless path from local experimentation to Azure AI Foundry deployment.

Announcing Microsoft Agent Framework in Azure AI Foundry.

— Microsoft Azure (@Azure) October 1, 2025

As agentic AI adoption accelerates, managing multi-agent systems is harder than ever. The framework helps devs build, observe, and govern responsibly—at scale.

Learn more: https://t.co/cklPdJtNgg pic.twitter.com/qpso1UPcPz

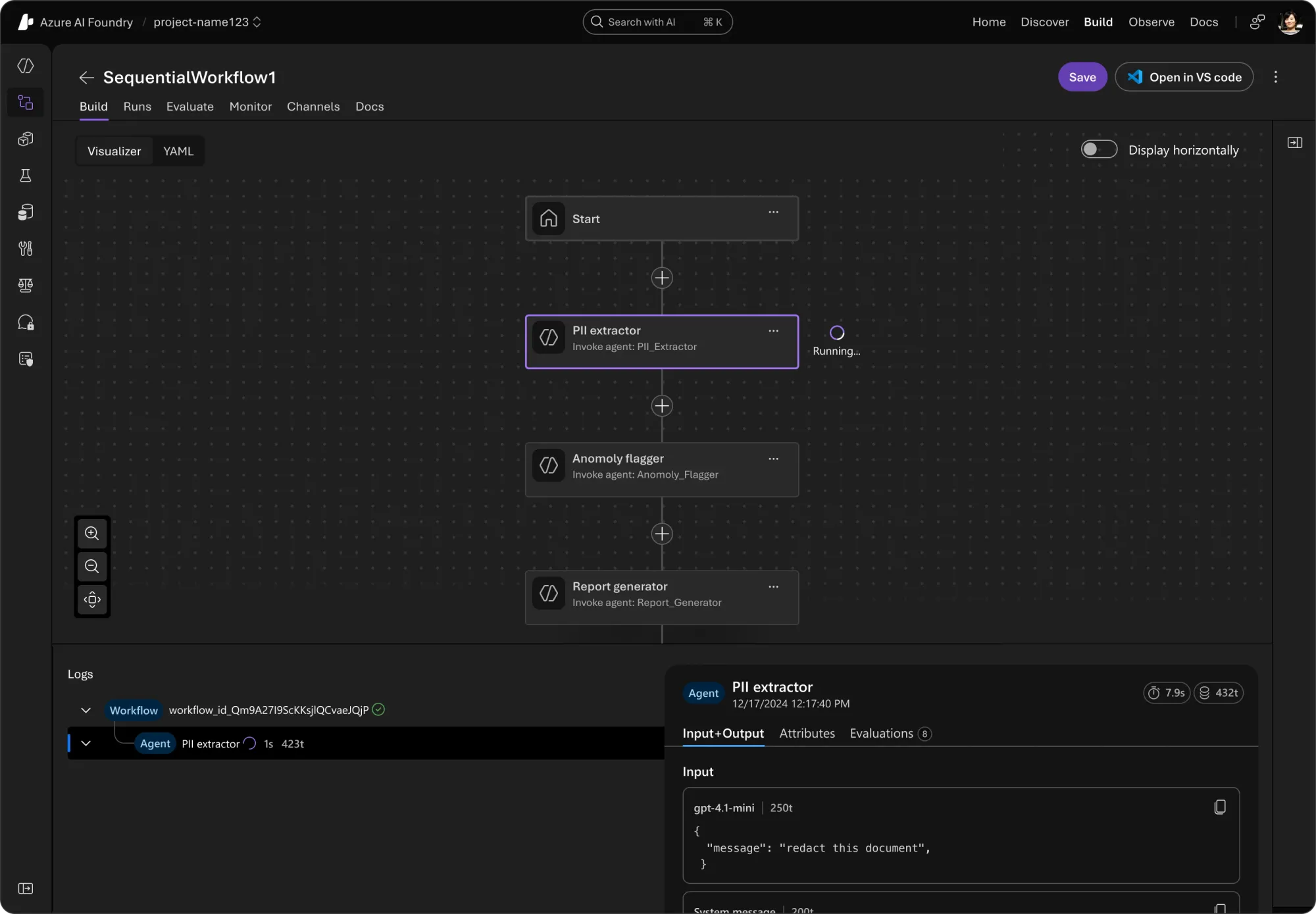

The Agent Framework is available now for public preview and supports integration across APIs using OpenAPI, multi-runtime collaboration via Agent2Agent, and dynamic tool connections with Model Context Protocol. New capabilities in Azure AI Foundry include multi-agent workflows, allowing developers to coordinate complex business processes with persistent state and visual authoring through VS Code or Azure AI Foundry. The general availability of Voice Live API also brings real-time speech-to-speech capabilities, combining speech-to-text, generative models, text-to-speech, avatars, and conversation features in a single low-latency pipeline.

Microsoft’s approach addresses the growing complexity of agentic AI systems, focusing on compliance, reliability, and responsible AI. Industry leaders such as KPMG, Commerzbank, Citrix, TCS, Sitecore, and Elastic are already integrating these new tools, highlighting reduced operational friction and improved scalability. The framework sets itself apart from competitors by supporting open standards, multi-framework observability, and extensibility, positioning Microsoft as a leader in responsible, enterprise-grade AI orchestration.