Arena has announced Code Arena, a comprehensive evaluation platform designed to assess AI coding models under real-world conditions. The release is available publicly, targeting developers, researchers, model builders, and knowledge workers interested in AI-assisted software development. Unlike traditional benchmarks that focus solely on code correctness, Code Arena records and evaluates the entire development cycle, including planning, iterative building, debugging, and refinement.

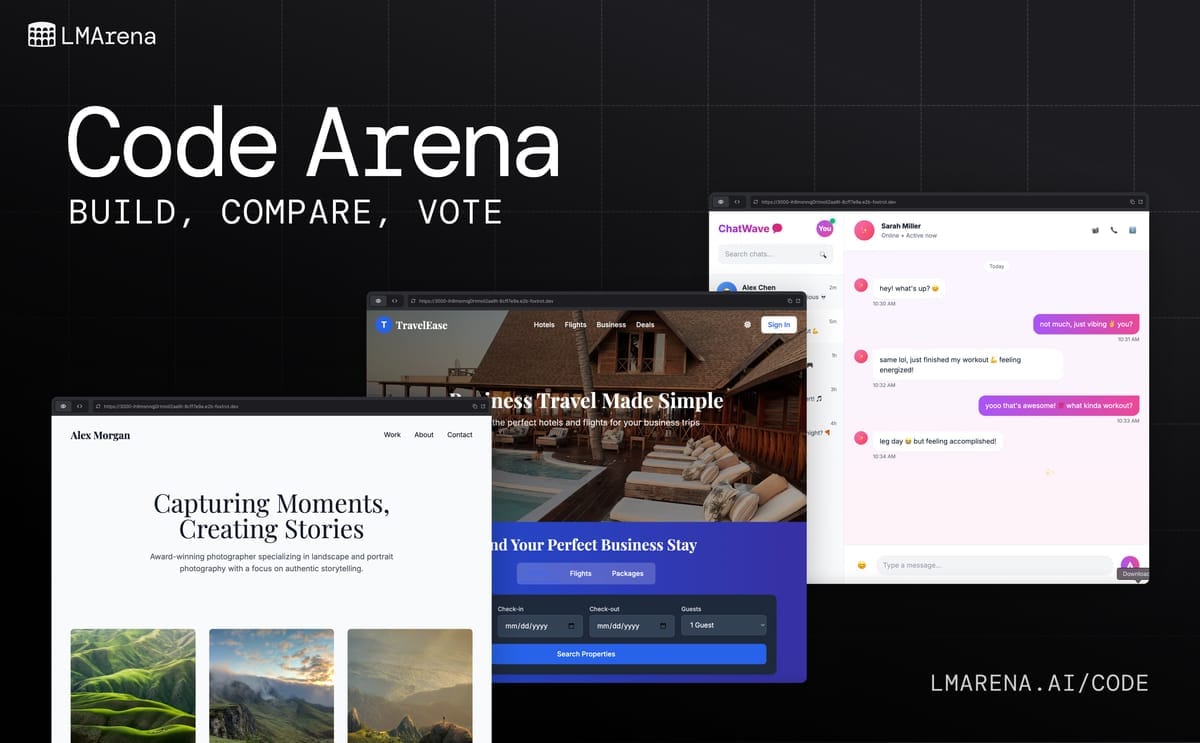

🚀Introducing Code Arena: the next generation of live coding evals for frontier AI models. Built to test how models plan, scaffold, debug, and build real web apps step-by-step.

— lmarena.ai (@arena) November 12, 2025

Try Claude, GPT-5, GLM-4.6 and Gemini in Code Arena today! pic.twitter.com/0OU57FOI8V

The system introduces agentic behaviors, allowing models to perform structured tool calls like file creation, editing, and execution. Each action is tracked and logged in persistent, restorable sessions, and output generations can be shared for peer review. A secure frontend streams live coding sessions, and every evaluation links to a unique traceable ID, ensuring reproducibility and transparency. Human evaluators compare model outputs based on functionality, usability, and fidelity, with their judgments aggregated using rigorous statistical methods.

Arena, the company behind Code Arena, previously ran WebDev Arena, one of the first large-scale human-in-the-loop coding benchmarks. Code Arena replaces this earlier effort with a platform rebuilt for methodological rigor, transparent data handling, and scientific measurement. The new leaderboard starts fresh, avoiding legacy data to guarantee clean comparisons. Industry experts and early users have highlighted Code Arena's step forward in transparent, reproducible evaluation, setting a new standard for testing AI coding agents.