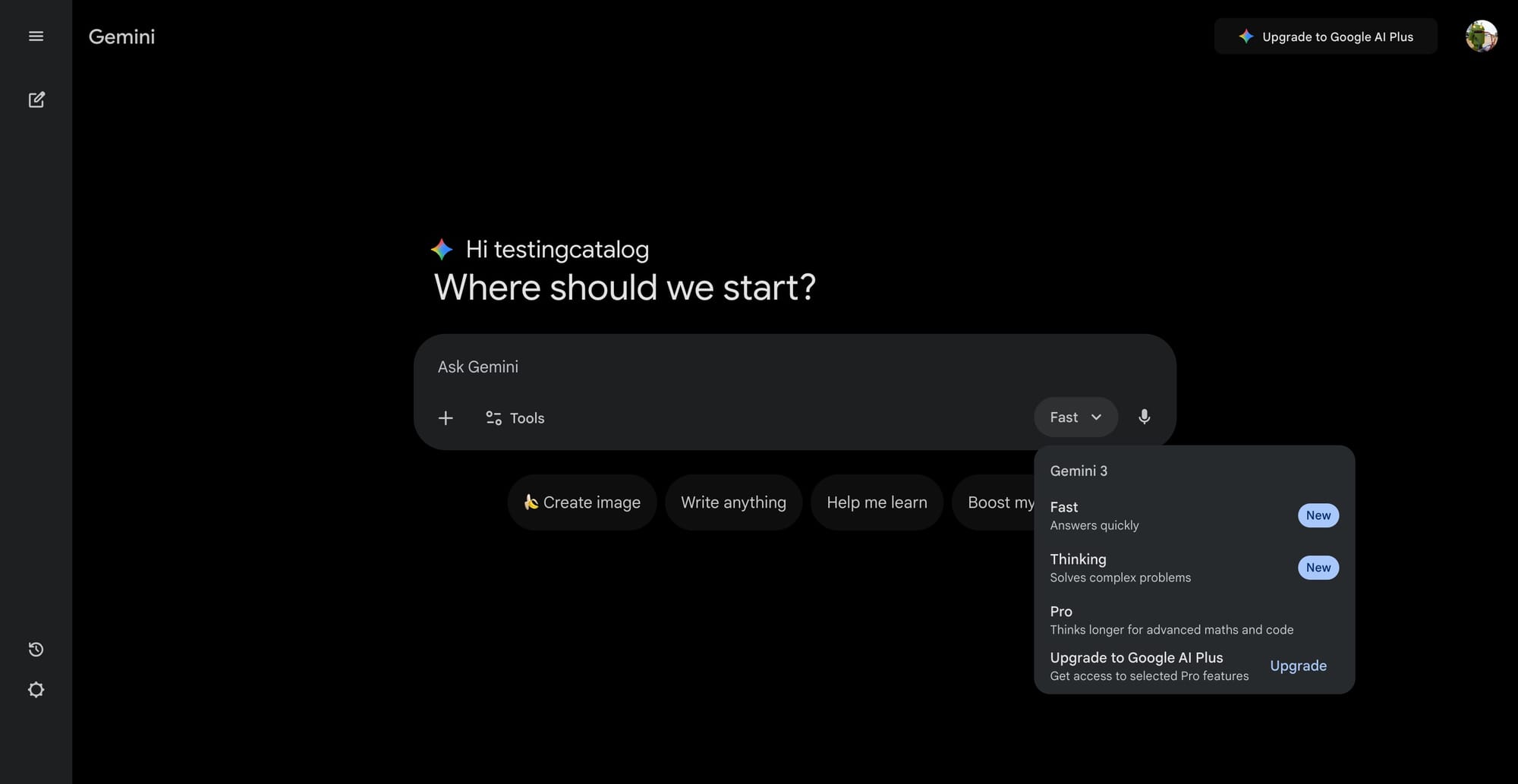

Google has unveiled Gemini 3 Flash, the latest addition to its Gemini 3 model family, designed to deliver rapid processing and high-quality reasoning at a reduced cost. This release is reaching millions of users worldwide through Google’s own Gemini app and Search AI Mode, as well as being made available via the Gemini API, Google AI Studio, Vertex AI, Gemini Enterprise, Gemini CLI, and Android Studio. The primary audience includes developers, enterprises, and everyday users seeking advanced AI for tasks ranging from coding to multimodal content analysis.

Gemini 3 Flash gives you frontier intelligence at a fraction of the cost. ⚡

— Google DeepMind (@GoogleDeepMind) December 17, 2025

Here’s how it’s built for speed and scale 🧵 pic.twitter.com/iEut7GStxo

Gemini 3 Flash stands out for its efficient token usage and high performance on leading benchmarks, surpassing its predecessor Gemini 2.5 Pro in both speed and accuracy. It achieves 90.4% on GPQA Diamond and 81.2% on MMMU Pro, rivaling larger models while offering three times faster response at a lower price point, $0.50 per million input tokens and $3 per million output tokens.

BREAKING 🚨: Google officially announced Gemini 3 Flash! It outperforms Gemini 3 Pro on SWE Bench Verified, plus scores a very high result on HLA and other benchmarks. https://t.co/qw0C4Le8FJ pic.twitter.com/6i8APL2jd3

— TestingCatalog News 🗞 (@testingcatalog) December 17, 2025

The model adapts processing time based on task complexity, using 30% fewer tokens on average for everyday operations. Its strong coding abilities are demonstrated with a 78% score on SWE-bench Verified, establishing it as a strong choice for both production systems and responsive consumer applications.

Google, the company behind this release, is leveraging its deep expertise in AI and large language models to push the boundaries of speed, scale, and affordability. Early enterprise adopters such as JetBrains, Bridgewater Associates, and Figma have already integrated Gemini 3 Flash, noting its balance of inference speed, reasoning, and cost efficiency. The rollout makes next-generation AI widely accessible for both consumers and enterprise users alike.