DeepSeek recently announced its new model, DeepSeek v3, which is a significant upgrade over its predecessor. The new model is reported to be three times faster than v2 and boasts enhanced capabilities and intelligence. Like all previous DeepSeek models, v3 is open source. According to benchmarks, it outperforms existing models, including Claude 3.5 Sonnet, and ChatGPT-4o, especially in math and coding tasks such as HumanEval.

🚀 Introducing DeepSeek-V3!

— DeepSeek (@deepseek_ai) December 26, 2024

Biggest leap forward yet:

⚡ 60 tokens/second (3x faster than V2!)

💪 Enhanced capabilities

🛠 API compatibility intact

🌍 Fully open-source models & papers

🐋 1/n pic.twitter.com/p1dV9gJ2Sd

With 671 billion parameters, DeepSeek v3 is the largest open-source language model to date, surpassing the previous record held by LLaMA at 405 billion parameters. The model is now available on Hugging Face and is gradually rolling out to the DeepSeek Chat UI, making it accessible to a wider audience.

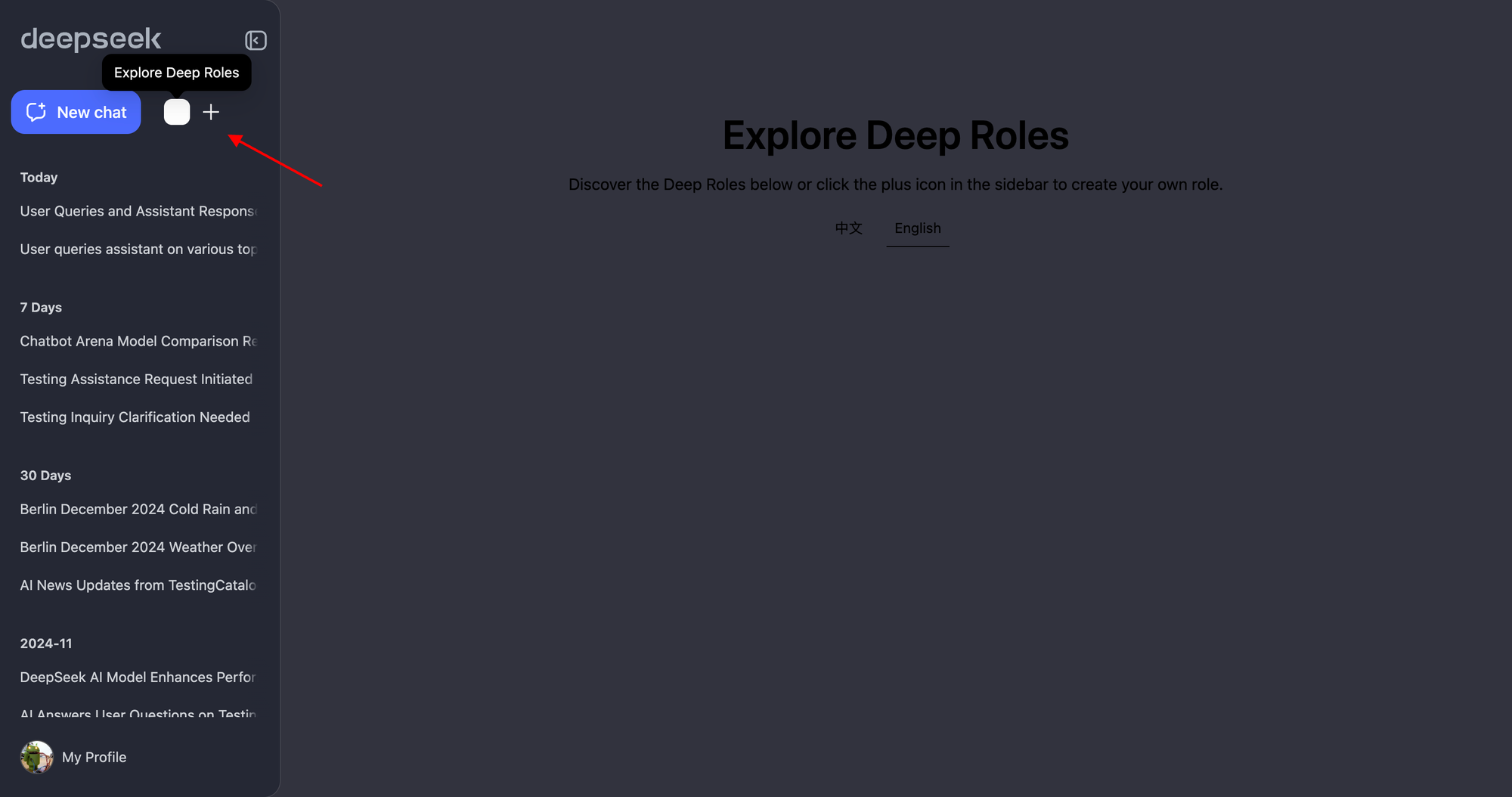

DeepSeek is working on Deep Roles 👀

— TestingCatalog News 🗞 (@testingcatalog) December 25, 2024

Users will be able to create their own roles and explore roles created by other users (Custom GPTs?)

This feature is in the early development stage at this moment 🚧 pic.twitter.com/0T4pGt93bi

In addition to the new model, some hidden features have been discovered in the DeepSeek ecosystem. One notable feature in development is called Deep Roles, which will allow users to explore “roles” created by others in both Chinese and English or design their own. While still in its early stages, this feature appears to function similarly to Custom GPTs, enabling users to add personalized prompts to the DeepSeek LLM and share them publicly. However, the full scope of Deep Roles remains unclear, and further updates are expected as the feature evolves.