Cognition and Windsurf have announced the release of SWE-grep and SWE-grep-mini, highly specialized agentic models designed for extremely fast and parallel context retrieval within large codebases. The models are now available to users through Windsurf's new Fast Context subagent and a demo playground. Targeting developers and technical teams who rely on coding agents, these models aim to drastically cut down the time spent searching for relevant code context, a pain point often consuming over 60% of initial agent operation time.

We trained a first-of-its-kind family of models: SWE-grep and SWE-grep-mini.

— Windsurf (@windsurf) October 16, 2025

Designed for fast agentic search (>2,800 TPS), surface the right files to your coding agent 20x faster.

Now rolling out gradually to Windsurf users via the Fast Context subagent. pic.twitter.com/oSI8JTIn28

The Fast Context subagent is being progressively rolled out to Windsurf users, with no special commands needed for activation—simply using Windsurf Cascade will invoke the new feature when code search is necessary. Additionally, anyone can experiment with the models via a dedicated playground. The models support multiple platforms, including Windows, ensuring broad usability.

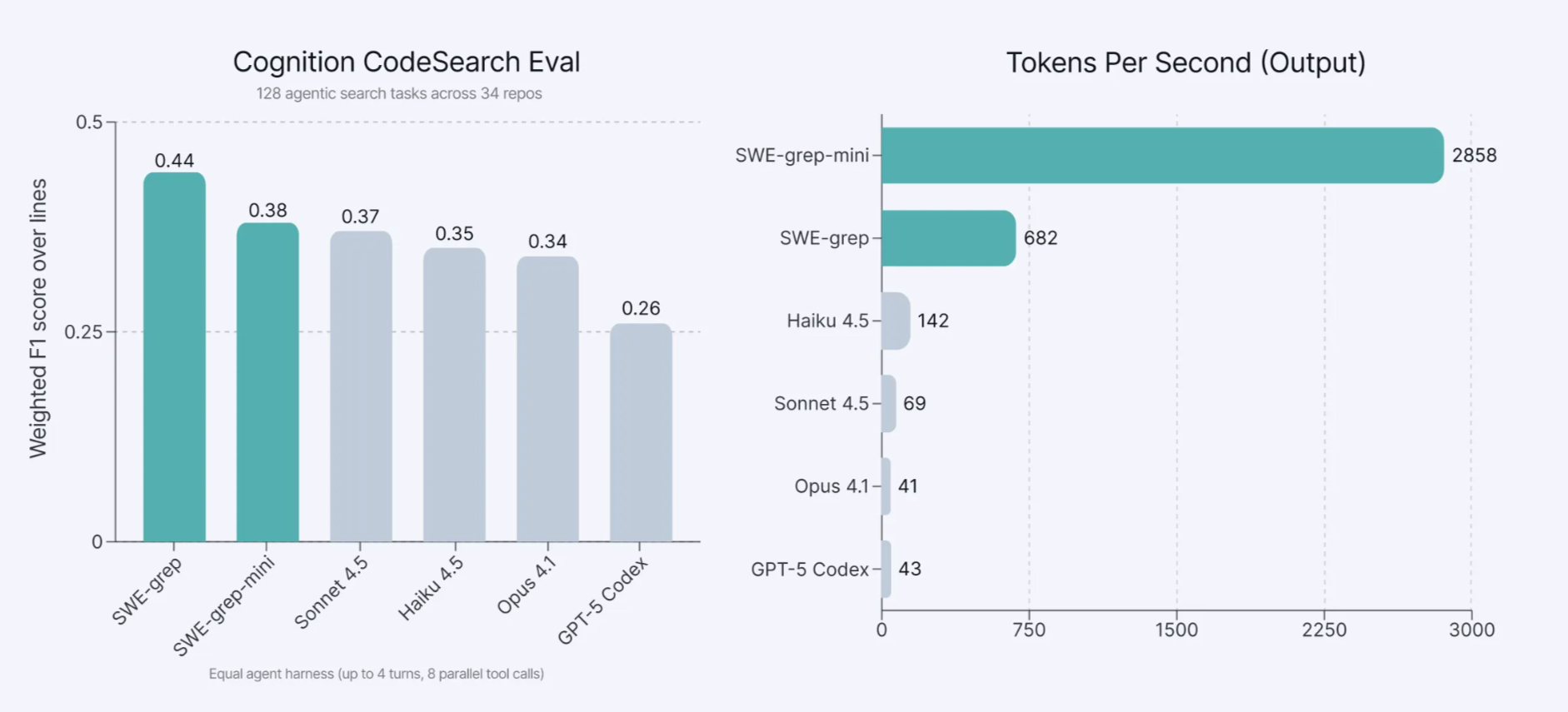

SWE-grep and SWE-grep-mini can execute up to 8 parallel tool calls per turn for a maximum of 4 turns. This parallelism, combined with custom-optimized tool calls and fast inference powered by Cerebras, enables retrieval speeds up to 20 times faster than leading competitors. Unlike traditional embedding or sequential agentic search, these models use reinforcement learning to prioritize precision, minimizing context pollution and saving valuable agent tokens. Early benchmarks show they match or outperform top-tier models in relevant task performance while slashing latency.

Cognition and Windsurf, both recognized for their work in AI-powered developer tools, are leveraging this launch to solidify their position in the coding agent space. By addressing the long-standing speed bottleneck in context retrieval, they aim to keep users "in flow" and boost productivity, with plans to expand SWE-grep’s deployment across additional products and future iterations.