Zhipu AI has announced GLM-4.7, the latest release in its General Language Model line, positioning it as a high-end foundation model aimed at advanced reasoning, coding, and multimodal workloads. The update targets developers, enterprises, and research teams building large-scale AI applications, with availability rolling out through Z.ai’s platform and APIs. The model expands context handling and reasoning depth compared to earlier GLM-4 versions, with a clear focus on long-form analysis, tool use, and complex instruction following.

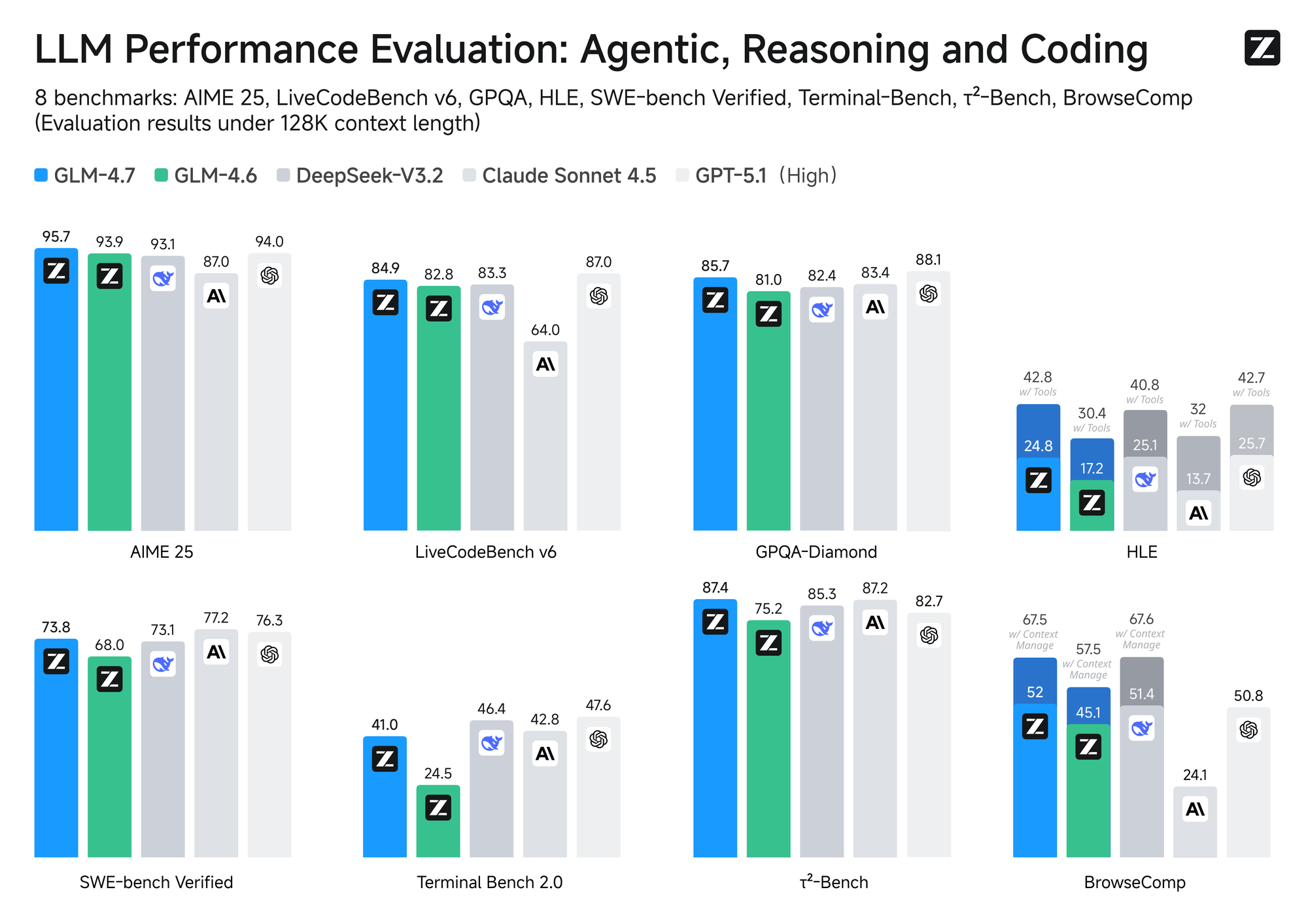

BREAKING 🚨: GLM-4.7 by @Zai_org scored 42% on the HLE benchmark, representing a 38% improvement over GLM-4.6 and approaching GPT-5.1 performance.

— TestingCatalog News 🗞 (@testingcatalog) December 22, 2025

New SOTA open source on SWE? 👀 https://t.co/YBx2bO8MS2 pic.twitter.com/1nh37pL69h

GLM-4.7 introduces upgraded reasoning pipelines and broader multimodal support, covering text and vision inputs with tighter alignment between perception and generation. Zhipu highlights stronger performance on coding tasks, structured outputs, and multi-step problem solving, suggesting internal training optimizations and post-training refinements over GLM-4.5. The release also aligns the model more closely with agent-style workflows, where the system can plan, call tools, and maintain state across longer sessions, a capability increasingly demanded by enterprise users.

Frontend Optimization · Diverse Styles pic.twitter.com/PdNzhtELZ0

— Z.ai (@Zai_org) December 22, 2025

Both standard and streaming API calls are supported, allowing for real-time or batch processing. Compared to previous versions, GLM-4.7 features more robust reasoning and conversational depth, positioning it as a strong competitor to other leading models in the generative AI space. Early user feedback highlights the model’s consistent output and flexible integration options, especially praising the open API structure.

The company frames GLM-4.7 as part of its broader push to compete with leading global foundation models while maintaining compliance with regional deployment requirements in China. By shipping this upgrade through Z.ai, Zhipu AI is signaling continued investment in large-scale models for both domestic and international developers, as competition intensifies around reasoning quality, context length, and multimodal execution.