The year is coming to an end, making it a perfect time for a yearly review. Many creators are closely following products like ChatGPT, Claude, and Perplexity, generating insightful yearly recaps. I am excited to share these reviews on our website, along with relevant links to provide more context.

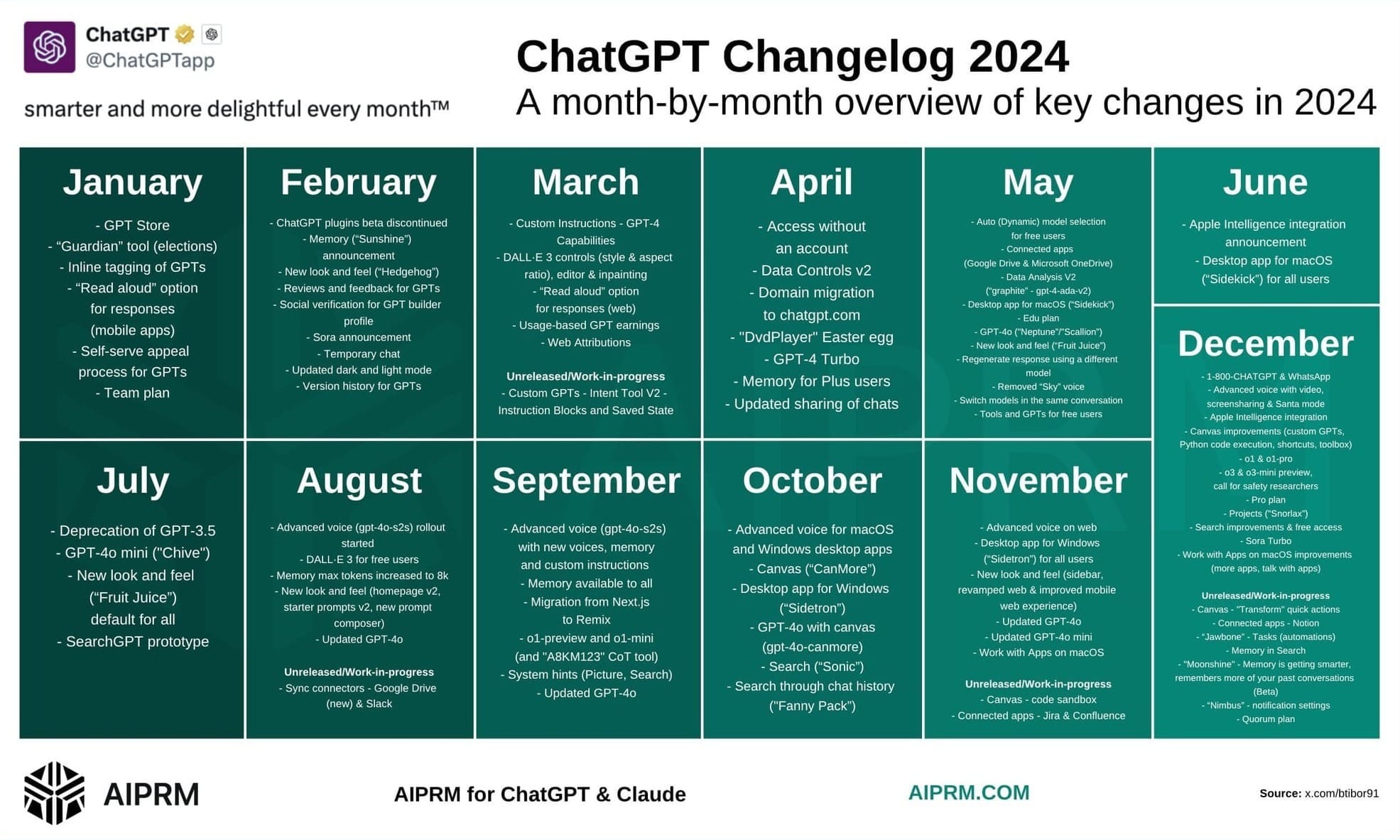

Starting with ChatGPT, we can explore an infographic prepared by Tibor that highlights the key features developed throughout the year. Interestingly, June turned out to be the month with the fewest feature releases, which followed major announcements in May. In contrast, December saw the most updates, with many of them unveiled during OpenAI’s “12 Days of OpenAI” initiative.

Let’s dive deeper into each feature and its specifics.

January

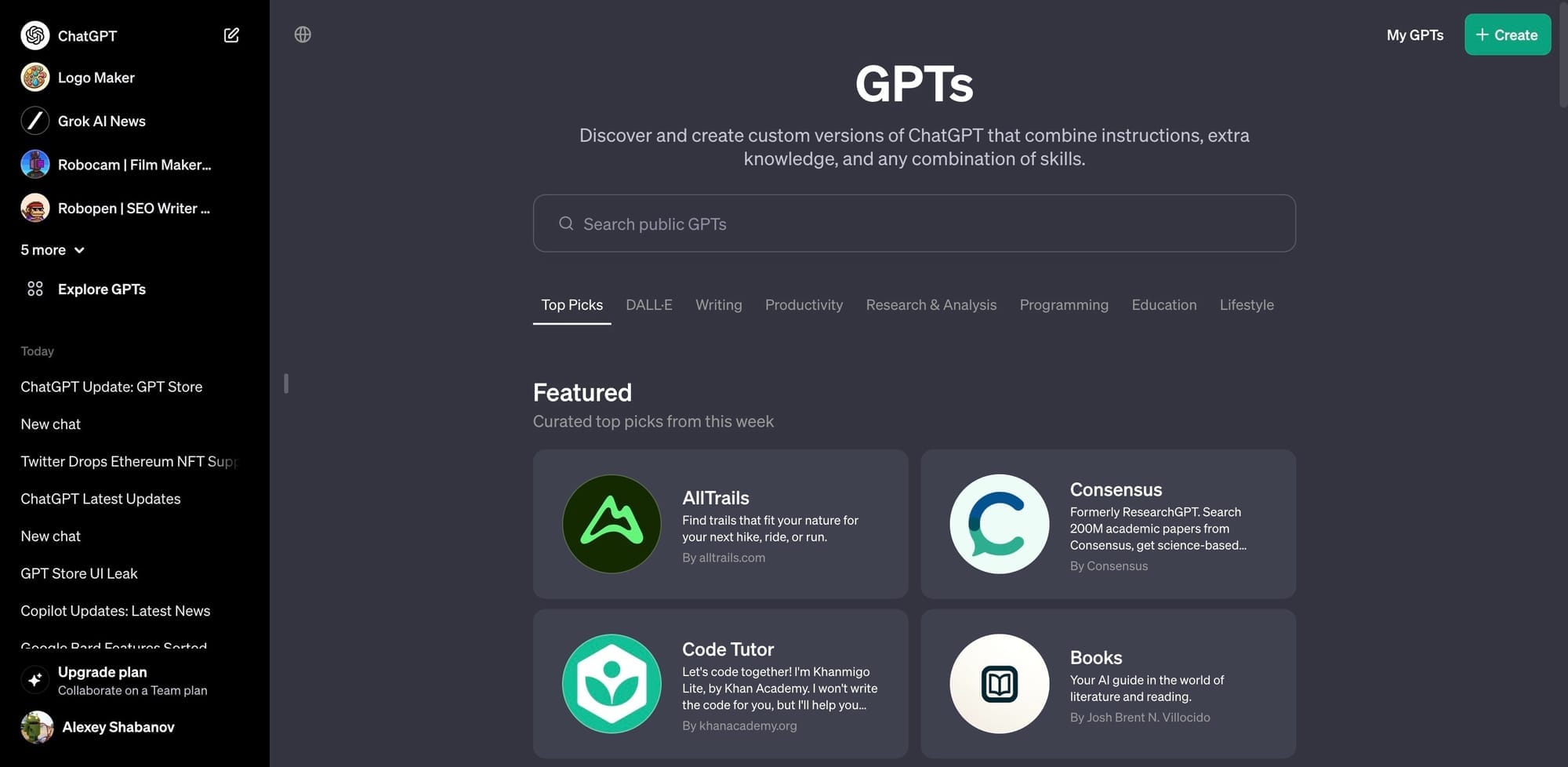

The year started with the release of GPT-Store, where there were a lot of hopes and promises and expectations towards OpenAI becoming a platform where users will be able to create AI agents. A lot of builders started experimenting with what’s possible. Some of the GPTs really got a very nice attention, and a user base like Grimoire, for example, and others.

I think a lot of them are still used till that day, while in the long run, it was clear that most of the people actually use their own GPTs with their custom prompts for their own use cases, rather than relying on GPTs created by other people. GPT-Store kept getting new features during the first half of the year, while development around GPT-Store stagnated afterwards. Actually, we didn’t get a lot of new features in the second half.

- GPT Store 🔥

- “Guardian” tool (elections)

- Inline tagging of GPTs

- “Read aloud” option for responses (mobile apps)

- Self-serve appeal process for GPTs

- Team plan

February

In February, besides polishing to GPT Store, we got a Sora announcement that was only released in December afterwards. Interestingly, this announcement pumped the price of WorldCoin, which was an interesting correlation to observe. Besides that, we also got an announcement of the Memory feature that took some time until it was released, especially in the EU.

- ChatGPT plugins beta discontinued

- Memory (“Sunshine”) announcement 🔥

- New look and feel (“Hedgehog”)

- Reviews and feedback for GPTs

- Social verification for GPT builder profiles

- Sora announcement

- Temporary chat

- Updated dark and light mode

- Version history for GPTs

March

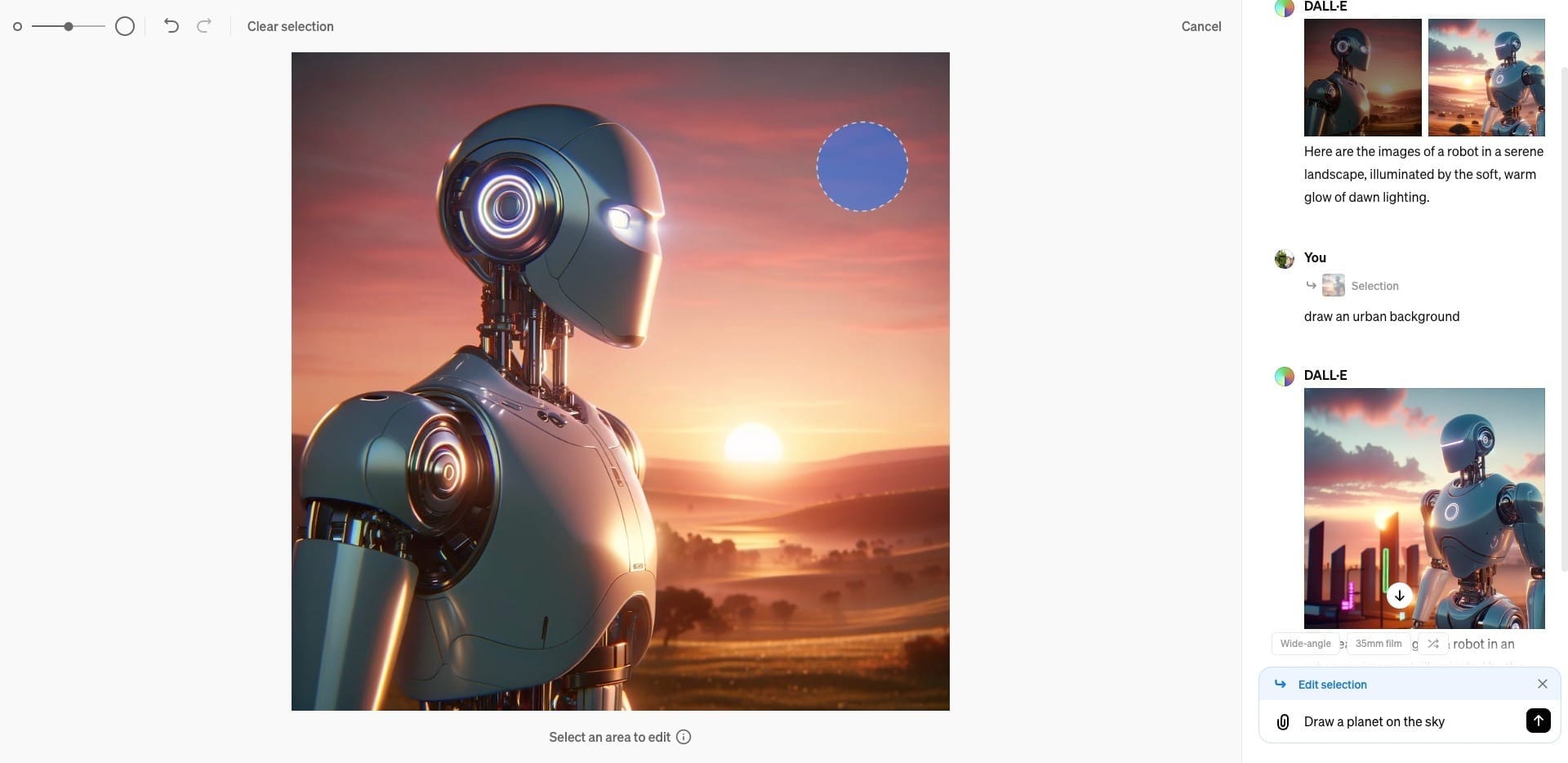

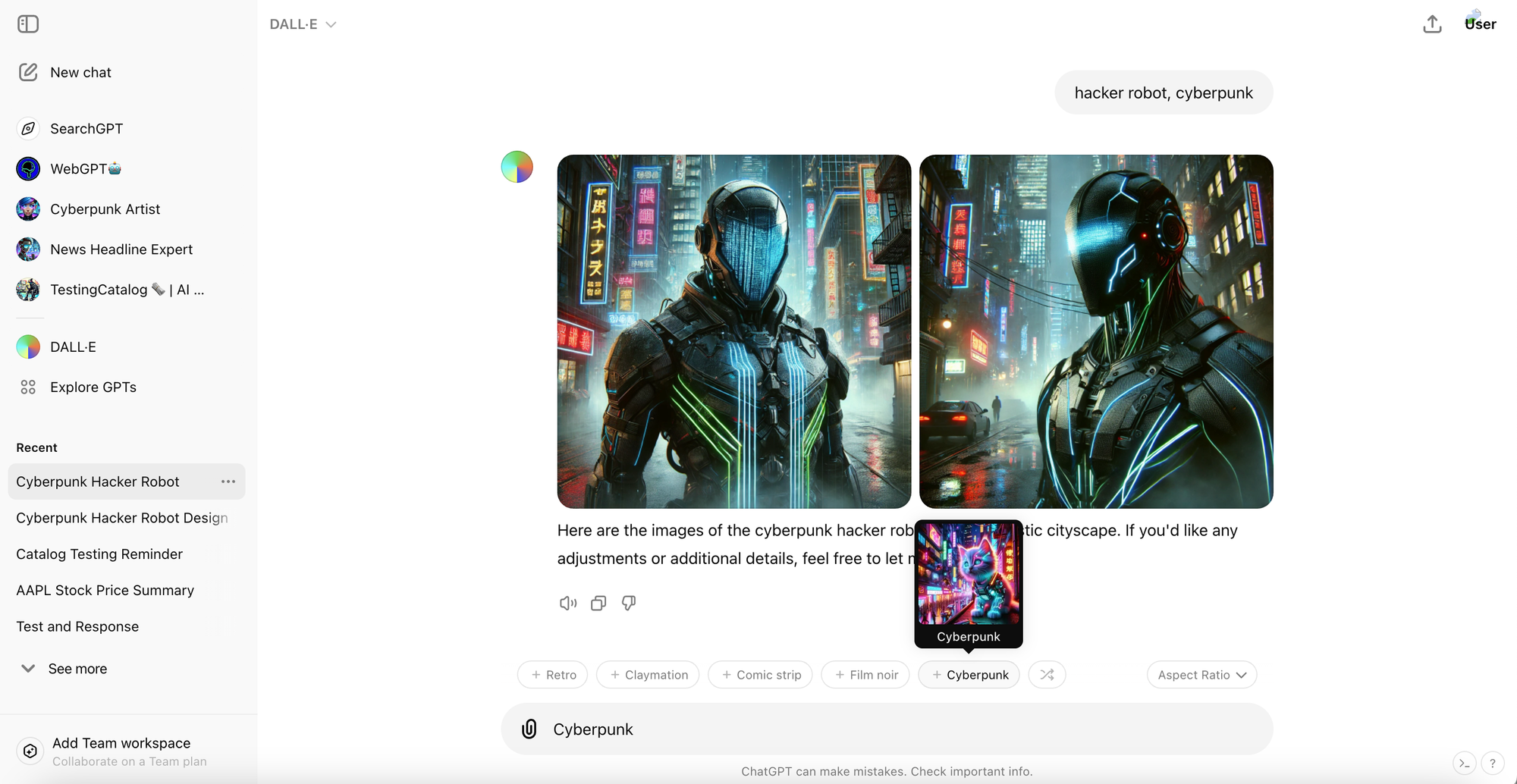

In March, OpenAI introduced new tools for image creation and editing, including the release of a built-in image editor. Additionally, OpenAI announced a revenue-sharing program for GPT builders. However, this was likely the last time we heard about such a program, as it seems the initiative never really took off or gained traction.

- Custom Instructions

- GPT-4 Capabilities

- DALL-E 3 controls (style & aspect ratio), edit & inpainting 🔥

- “Read aloud” option for responses (web)

- Usage-based GPT earnings

- Web Attributions

- Unreleased/Work-in-progress:

- Custom GPTs

- Intent Tool V2

- Instruction Blocks and Saved State

April

In April, OpenAI released Data Analysis Version 2, enabling users to build charts and work with data in a more efficient and user-friendly way. The Memory feature was also finally rolled out broadly during this time. Additionally, early signs of vision capabilities in the iOS app were spotted, even before the official announcement, which came in May.

- ChatGPT Vision leak 🔥

- Access without an account

- Data Controls v2

- Domain migration to chatgpt.com

- “DvdPlayer” Easter egg

- GPT-4 Turbo

- Memory for Plus users

- Updated sharing of chats

May

In May, OpenAI hosted its famous Demo Day, which took place just one day before Google I/O. During the event, they showcased vision capabilities, advanced voice mode for both mobile and desktop, and introduced the highly anticipated desktop app. The desktop app became available immediately after the conference.

Shortly after, reverse engineers discovered and accessed the alpha version of the macOS application by spotting a download link for a separate alpha build. This leaked version included developer tools, allowing users to toggle various feature flags and preview the advanced voice mode UI. While not yet functional, it provided an exciting glimpse of what was to come.

Unfortunately, May also marked the loss of Sky Voice, a favourite voice for many users. On a brighter note, GPT-4o was officially released, cementing itself as one of the top-performing models on LM Arena.

- Auto (Dynamic) model selection for free users

- Connected apps (Google Drive & Microsoft OneDrive)

- Data Analysis V2 (“graphite”: gpt-4-da-v2)

- Desktop app for macOS (Sidekick) 🔥

- GPT-4o (“Netptune”/“Scallion”)

- New look and feel (“Fruit Juice”)

- Regenerate response using a different model

- Removed “Sky” voice

- Switch models in the same conversation

- Tools and GPTs for free users

June

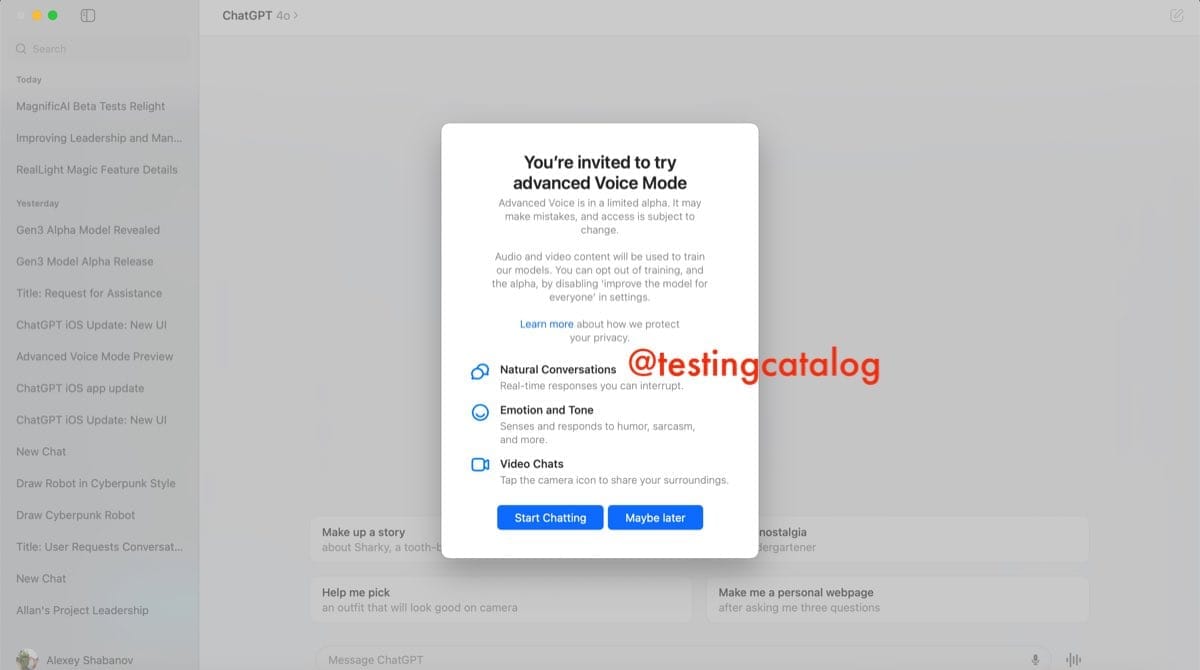

In June, Apple announced a partnership with OpenAI, introducing “Apple Intelligence.” Around the same time, OpenAI shipped several updates to their macOS desktop app, making it available to all users. At the time, there were significant expectations that OpenAI would soon release the advanced voice mode, as numerous hints pointed in that direction.

However, OpenAI later announced that the release of advanced voice mode would be delayed, likely due to regulatory compliance requirements. Additionally, the feature’s stability might not have been at a level suitable for public release.

As a result, advanced voice mode was not released until September, and vision capabilities for mobile followed in December. Meanwhile, vision functionality for desktop users remains unavailable to this day.

- Apple Intelligence integration announcement

- Desktop app for macOS (“Sidekick”) for all users 🔥

July

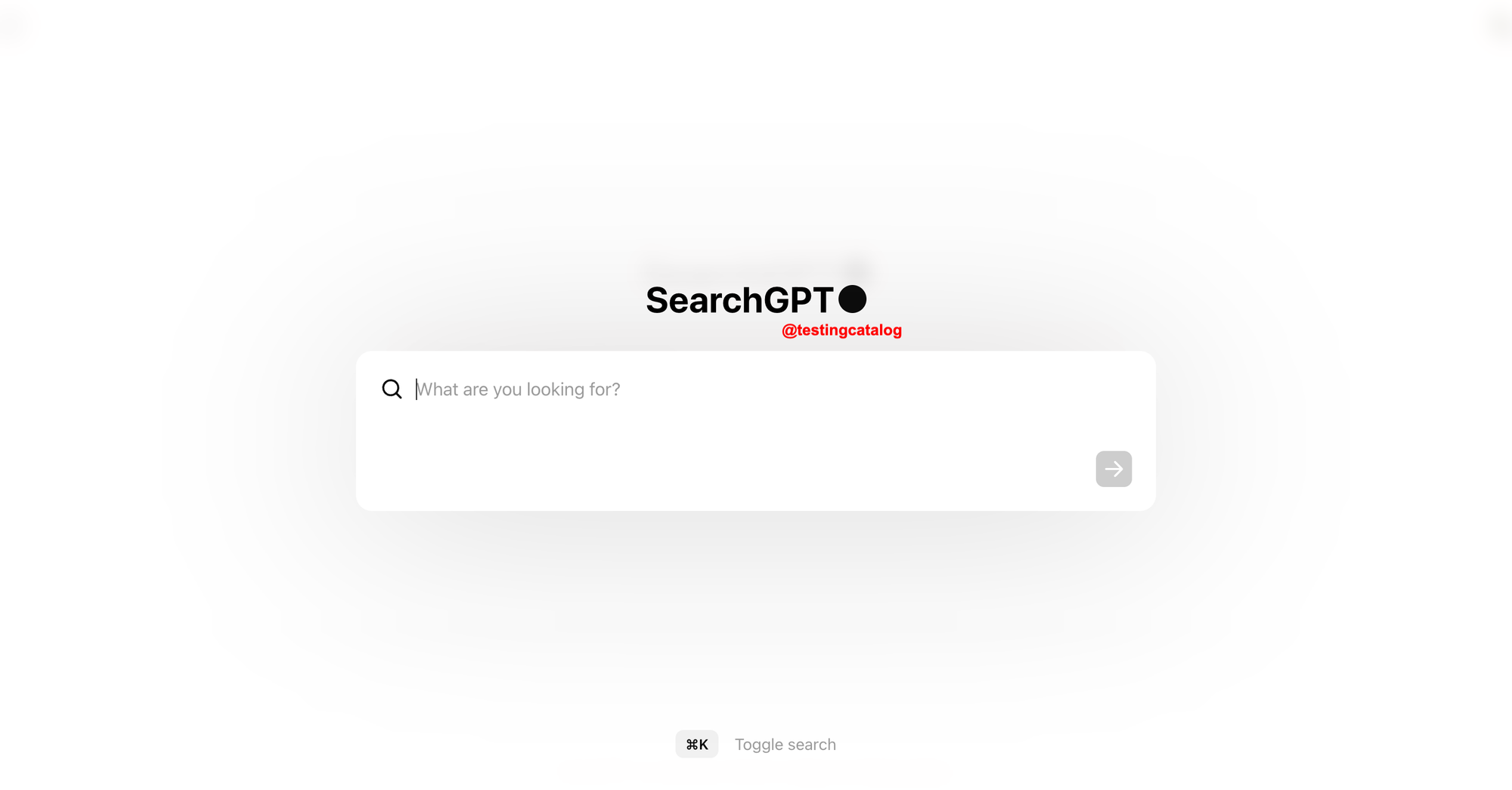

In July, the ChatGPT user interface underwent a complete revamp. Additionally, OpenAI announced their SearchGPT prototype, which marked their entry into the search engine space and positioned them as a potential competitor to tools like Google Search or Perplexity. This prototype was initially very limited, available only to a small group of users, and the waitlist was closed shortly afterwards.

Later, this prototype was integrated directly into the core ChatGPT product, expanding its functionality and accessibility.

- Deprecation of GPT-3.5

- GPT-4o mini (“Chive”)

- New look and feel (“Fruit Juice”) default for all

- SearchGPT prototype 🔥

August

In August, the long-awaited advanced voice mode began rolling out to a limited group of users, with a broader release following in September. During the same period, the ChatGPT homepage was redesigned to adopt a look and feel more aligned with a search product, reflecting OpenAI’s evolving direction.

- Advanced voice (gpt-4o-s2s) rollout started

- DALL-E 3 for free users 🔥

- Memory max tokens increased to 8k

- New look and feel (homepage v2, starter prompts v2, new prompt composer)

- Updated GPT-4o

- Unreleased/Work-in-progress:

- Sync connectors (Google Drive (new) & Slack)

September

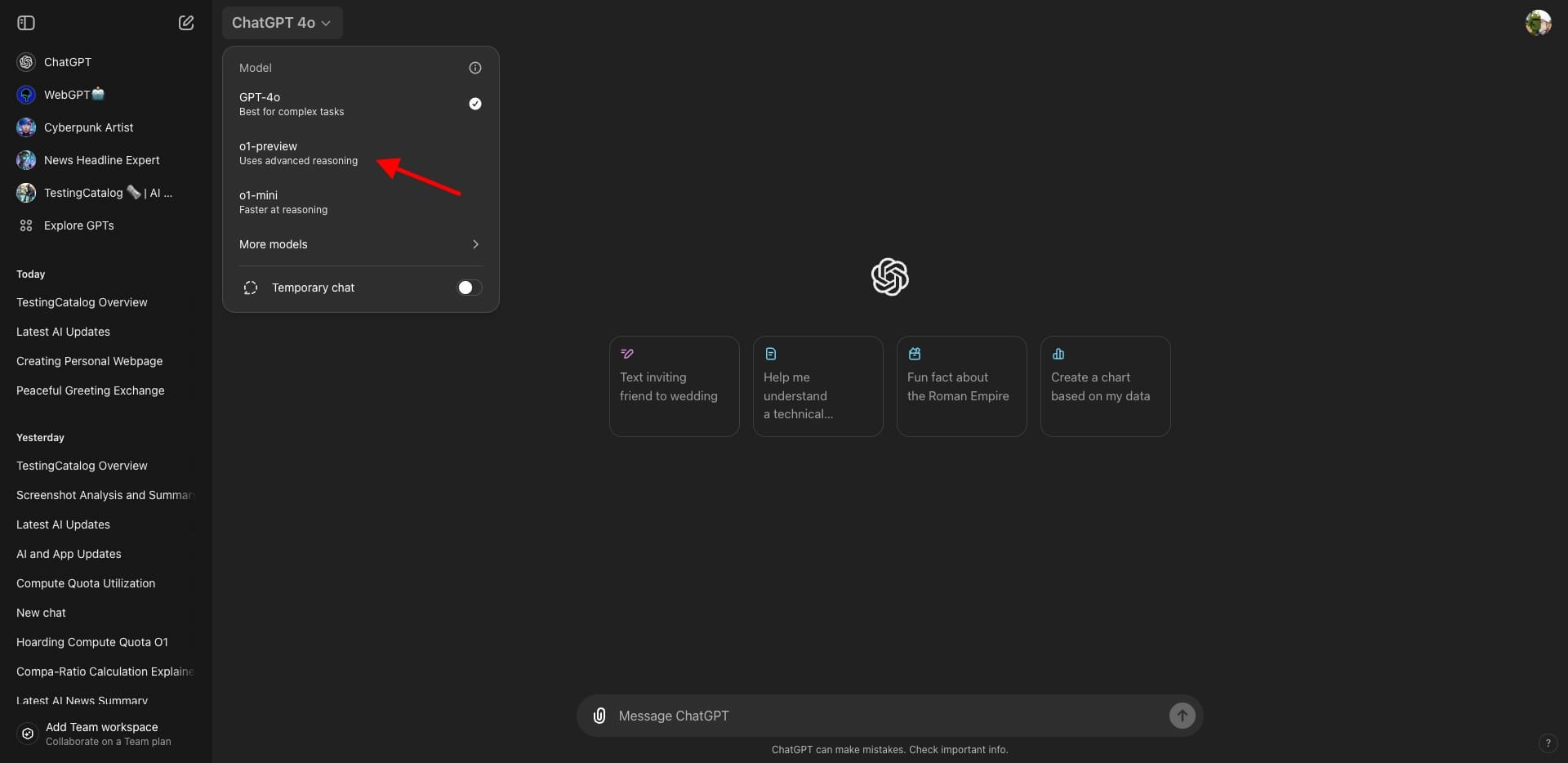

In September, Advanced Voice Mode was finally made available to a broader audience. OpenAI also introduced O1 Preview and O1 Mini, featuring new models that supported Chain of Thought reasoning. Alongside these releases, OpenAI rolled out evaluations that assessed the performance of all existing models and plans.

Initially, these new offerings came with strict usage limits, which were slightly increased shortly afterwards to accommodate user feedback and demand.

- Advanced voice (gpt-4o-s2s) with new voices, memory, and custom instructions

- Memory available to all

- Migration from Next.js to Remix

- o1-preview and o1-mini (and “A8KM123” CoT tool)

- System hints (Picture, Search)

- Updated GPT-4o

October

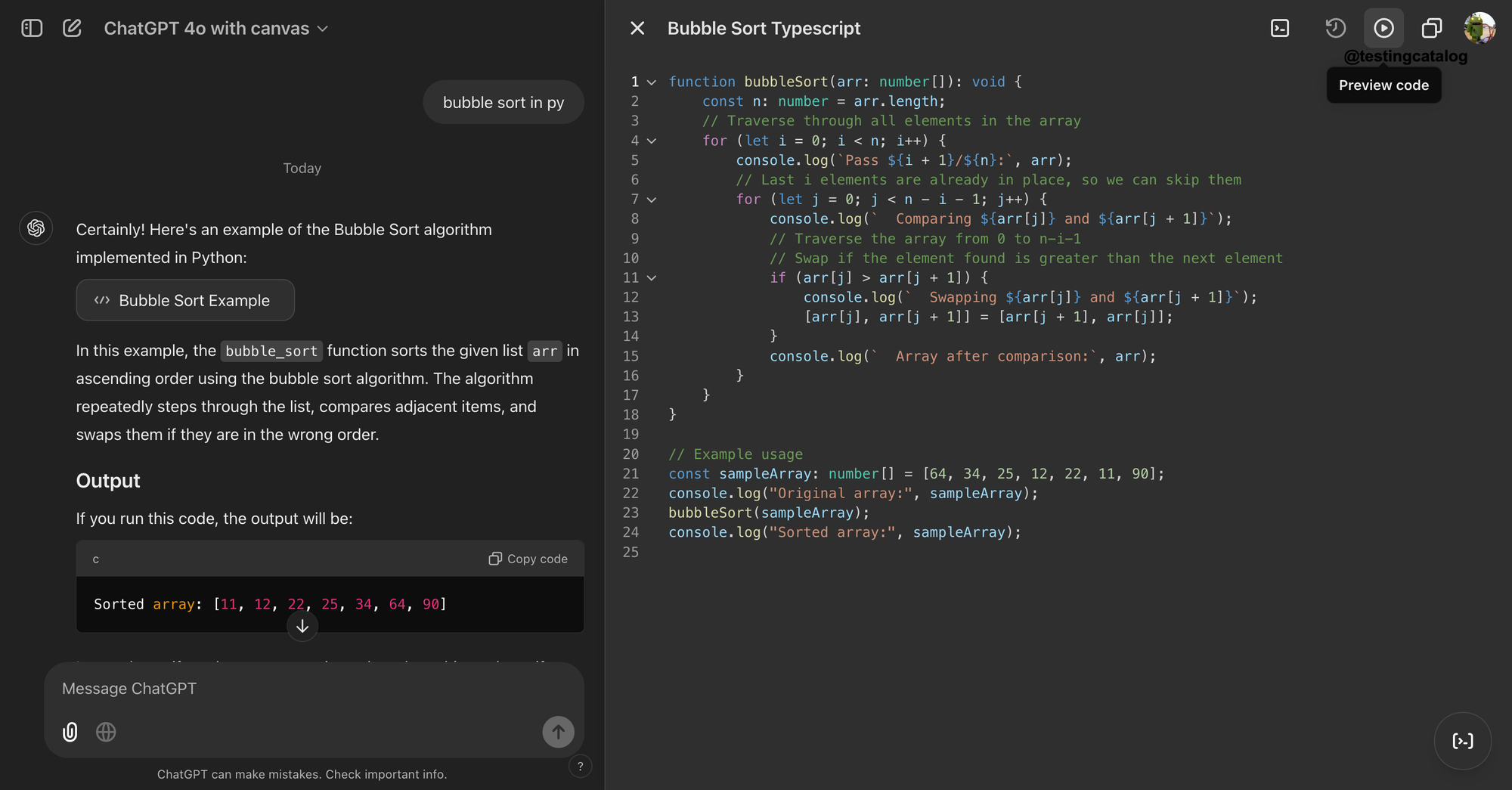

In October, Windows users gained access to OpenAI’s Electron-based desktop app. Additionally, OpenAI introduced Canvas, their version of Artifacts, allowing ChatGPT to display specific code or text in a separate side sheet for better organization. Real-time search functionality was also integrated directly into the core ChatGPT product, enhancing its usability.

- Advanced voice for macOS and Windows desktop apps

- Canvas (“CanMore”)

- Desktop app for Windows (“Sidetron”)

- GPT-4o with canvas (gpt-4o-canmore)

- Search (“Sonic”)

- Search through chat history (“Fanny Pack”)

November

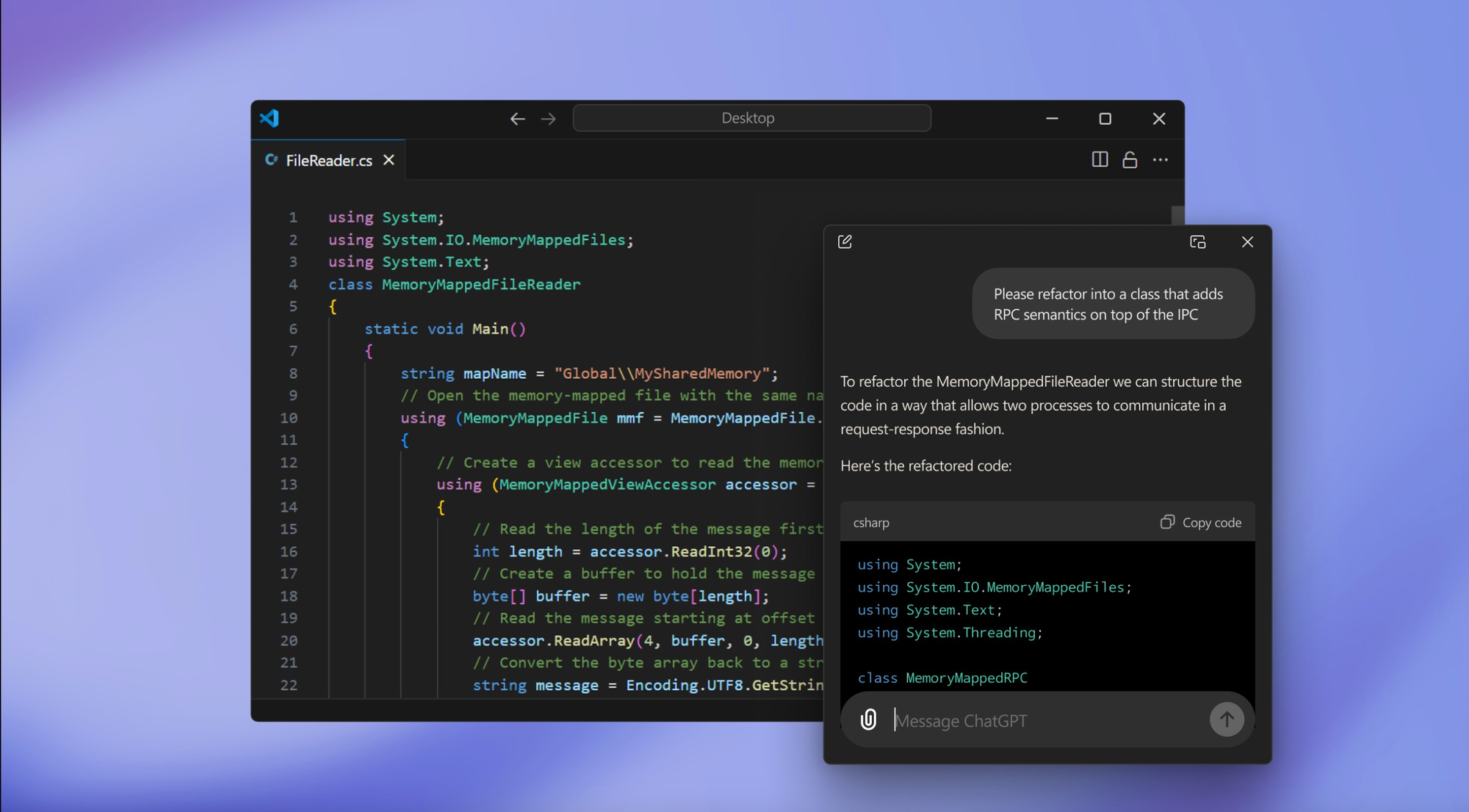

In November, many existing features were made available to a wider user base. Additionally, ChatGPT’s performance received an update, culminating in the release of the latest version of GPT-4o. The macOS desktop app introduced the Work With Apps feature in beta, allowing enhanced integration with external applications. Furthermore, Advanced Voice Mode was finally rolled out for use on the web, expanding its accessibility.

- Advanced voice on the web

- Desktop app for Windows (“Sidetron”) for all users

- New look and feel (sidebar, revamped web/mobile experience)

- Updated GPT-4o

- Updated GPT-4o mini

- Work with Apps on macOS

- Unreleased/Work-in-progress:

- Canvas - code sandbox

- Connected apps - Jira & Confluence

December

December was one of the most exciting months for OpenAI, thanks to their 12 Days of OpenAI event, where they aimed to release or announce new features, big and small, each day during the event. Interestingly, in late November, just before the event began, some users accidentally discovered a way to access the non-preview version of 01, which was briefly available for a few hours.

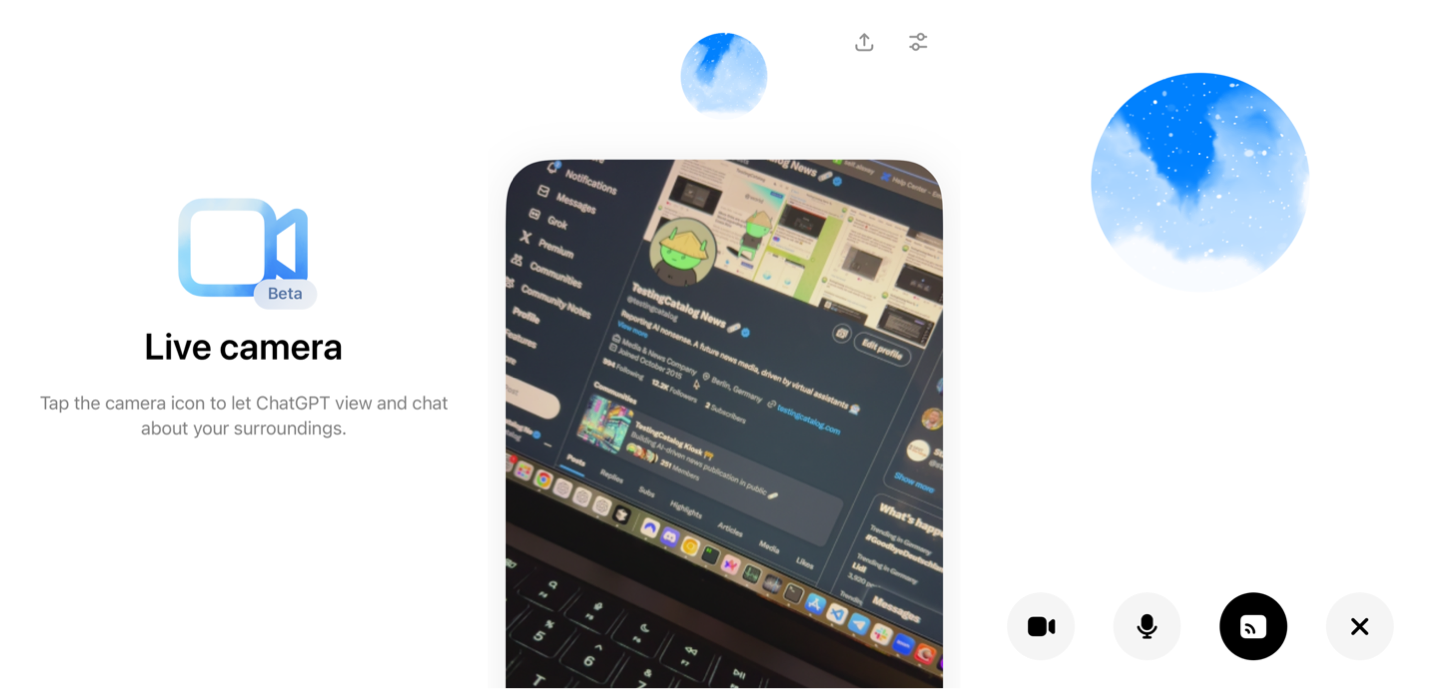

On the first day of December, users also spotted traces of GPT-4.5, though it was never officially announced. Additionally, the Pro Plan made its first appearance on the same day. The event brought a series of significant announcements, including the release of the Sora, AVM Vision feature, the introduction of Projects, the announcement of 03, and numerous other improvements.

One highly anticipated feature, Tasks, was also spotted in development. This feature would allow users to schedule prompts or create automations. It was expected to be released during the 12 Days of OpenAI, but for unknown reasons, its launch was postponed. It’s now likely we will see this feature released sometime in 2025.

- 1-800-CHATGPT & WhatsApp

- Advanced mode with video, screenshots & Santa mode 🔥

- Apple Intelligence integration

- Canvas improvements (custom GPTs, Python code execution, shortcuts, toolbox)

- o3 & o3-mini preview, call for safety researchers

- Pro plan

- Projects (“Snorlax”)

- Search improvements & free access

- Sora Turbo

- Work with Apps on macOS improvements (more apps, link with apps)

- Unreleased/Work-in-progress:

- Canvas - “Transform” quick actions

- Connected apps - Notion

- “Jawbone”: Tasks (automation)

- Memory in Search

- “Moonshine”: Memory in setting smarter, remembers more of past conversations (Beta)

- “Nimbus”: Notification settings

- Quorum plan

Forgot some of these? You can find a detailed changelog for ChatGPT on TestingCatalog. Which feature has been your favourite?

Drop your thoughts below in the comments 👇