xAI has released Grok 4 Fast, a model positioned for cost-efficient reasoning serving both developers and end users. The rollout is immediate, with availability on grok.com, iOS, Android, and through the xAI API. Early access is provided via OpenRouter and Vercel AI Gateway. The company highlights Grok 4 Fast’s 2 million token context window and its unified architecture, allowing seamless switching between reasoning and non-reasoning modes under a single set of weights.

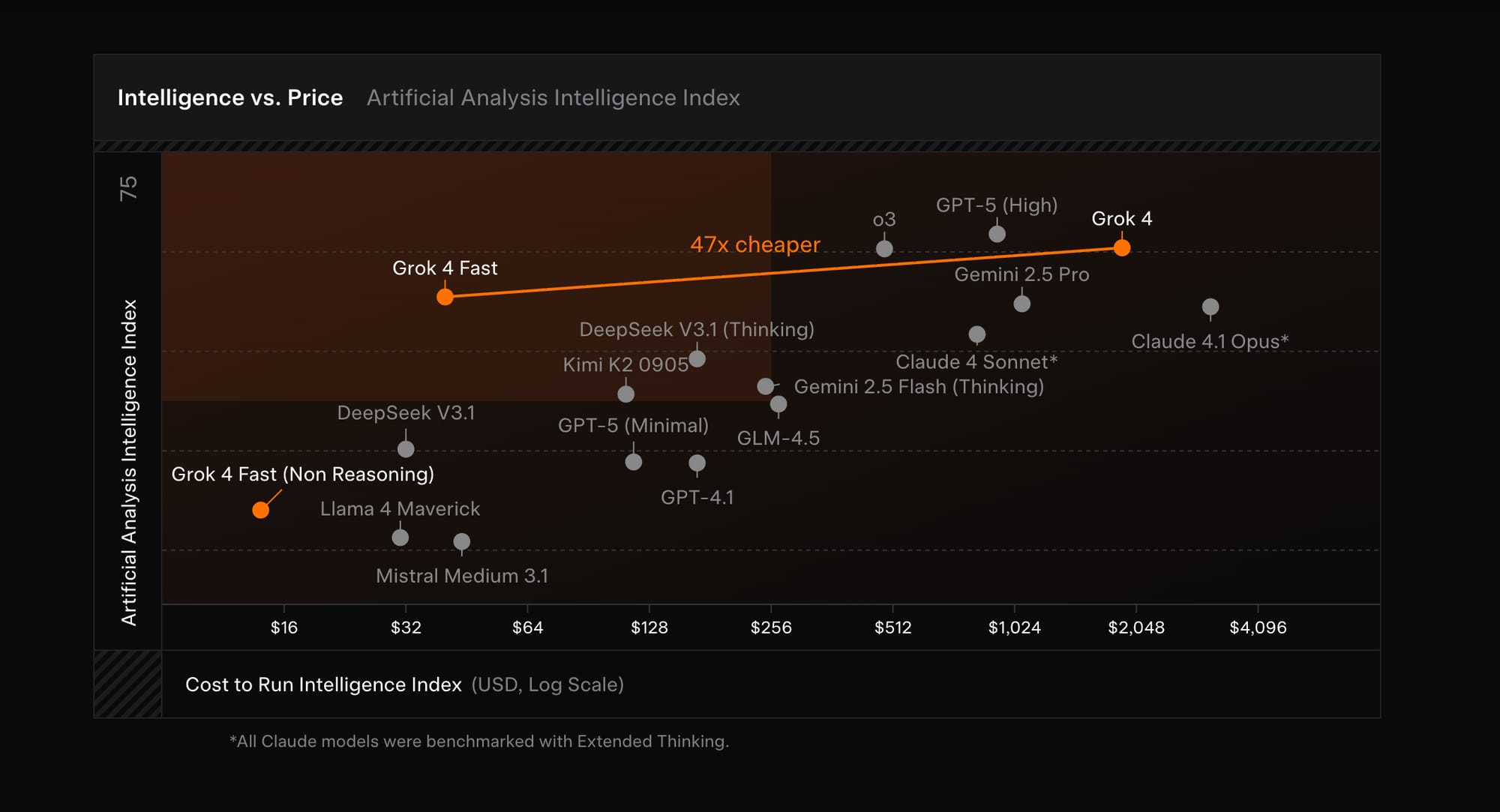

Grok 4 Fast introduces native web and X search with support for multihop browsing and direct ingestion of media. The model is designed for end-to-end tool usage and reports a 40% reduction in thinking tokens compared to Grok 4, maintaining comparable accuracy at a fraction of the cost. The approach enables a 98% lower path to the same benchmark results as the standard Grok 4. Pricing is set at $0.20 per 1M input tokens, $0.50 per 1M output tokens, and $0.05 per 1M cached input tokens, with tiered rates above 128k context. Live Search is billed per 1,000 sources, and customers can select from grok-4-fast-reasoning or grok-4-fast-non-reasoning API SKUs.

Introducing Grok 4 Fast, a multimodal reasoning model with a 2M context window that sets a new standard for cost-efficient intelligence.

— xAI (@xai) September 19, 2025

Available for free on https://t.co/AnXpIEOhOD, https://t.co/53pltypvkw, iOS and Android apps, and OpenRouter.https://t.co/3YZ1yVwueV

xAI, founded by Elon Musk, is accelerating its model deployment across consumer and developer channels. With this release, the company is pushing for broader adoption in both enterprise and individual use cases, targeting those seeking scalable AI with robust reasoning capabilities and cost control.