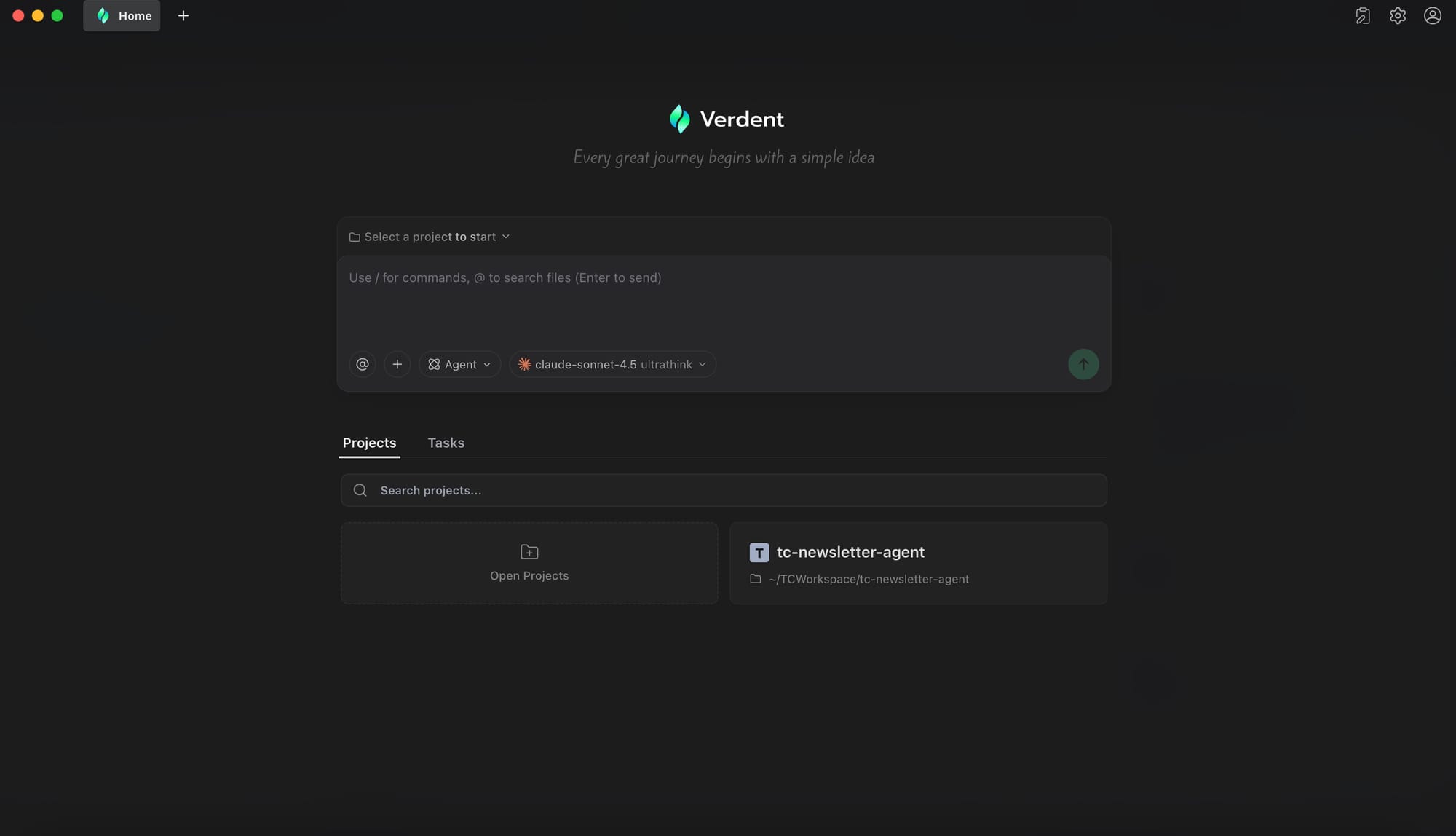

Verdent, a developer-focused coding agent, has launched with an impressive 76.1% pass@1 score on the SWE-bench Verified benchmark, which is recognized for its rigorous assessment of software-engineering agents. The product is immediately accessible to the public, targeting professional and advanced developers who seek a reliable AI coding companion for real-world tasks. Verdent is available as a Visual Studio Code extension and as a standalone application for broader workflow support, with access to top-tier models like Claude Sonnet 4.5 and GPT series, allowing users to switch models as needed.

Verdent AI achieved a score of 76.1% at pass@1 and 81.2% at pass@3 without parallel test-time compute on SWE-bench Verified!

— TestingCatalog News 🗞 (@testingcatalog) November 3, 2025

Claude, GPT, and MiniMax models power Verdent agents, coordinating verification steps that review and validate code before it’s finalized. https://t.co/6XZCvxhL5H pic.twitter.com/GT1yRI5O2i

The system orchestrates multiple subagents and advanced models in a unified plan-code-verify cycle, automating coding, debugging, and code review. Unlike many competitors, Verdent’s scores are based on the actual production agent, not a research-tuned version. Key technical features include:

- Persistent to-do lists for task tracking

- Automated verification after edits

- Static code analysis

- Intelligent code review subagents

It supports parallel task resolution and delivers stable performance by choosing reliable model providers. Early user feedback highlights the robust toolset design and the value of quality gates like code review.

The company behind Verdent positions itself at the forefront of AI-powered software development tools, emphasizing reliability, production readiness, and real-world applicability over benchmark optimization. The launch signals a strategic push to set industry standards in coding automation, aiming to streamline developer workflows while maintaining high code quality and transparency in model selection.