Perplexity is preparing to add support for the Kimi K2 Thinking model, which has drawn attention for its open-source status and performance on par with leading proprietary models. The K2 Thinking model is already being adopted and fine-tuned by various labs, underscoring its flexibility and competitiveness in the current landscape. For Perplexity, integrating Kimi K2 Thinking would echo their prior move with DeepSeek, where the model was hosted within specific jurisdictions, notably the US, enabling a regional data-processing narrative that appeals to users and organizations with strict data residency requirements.

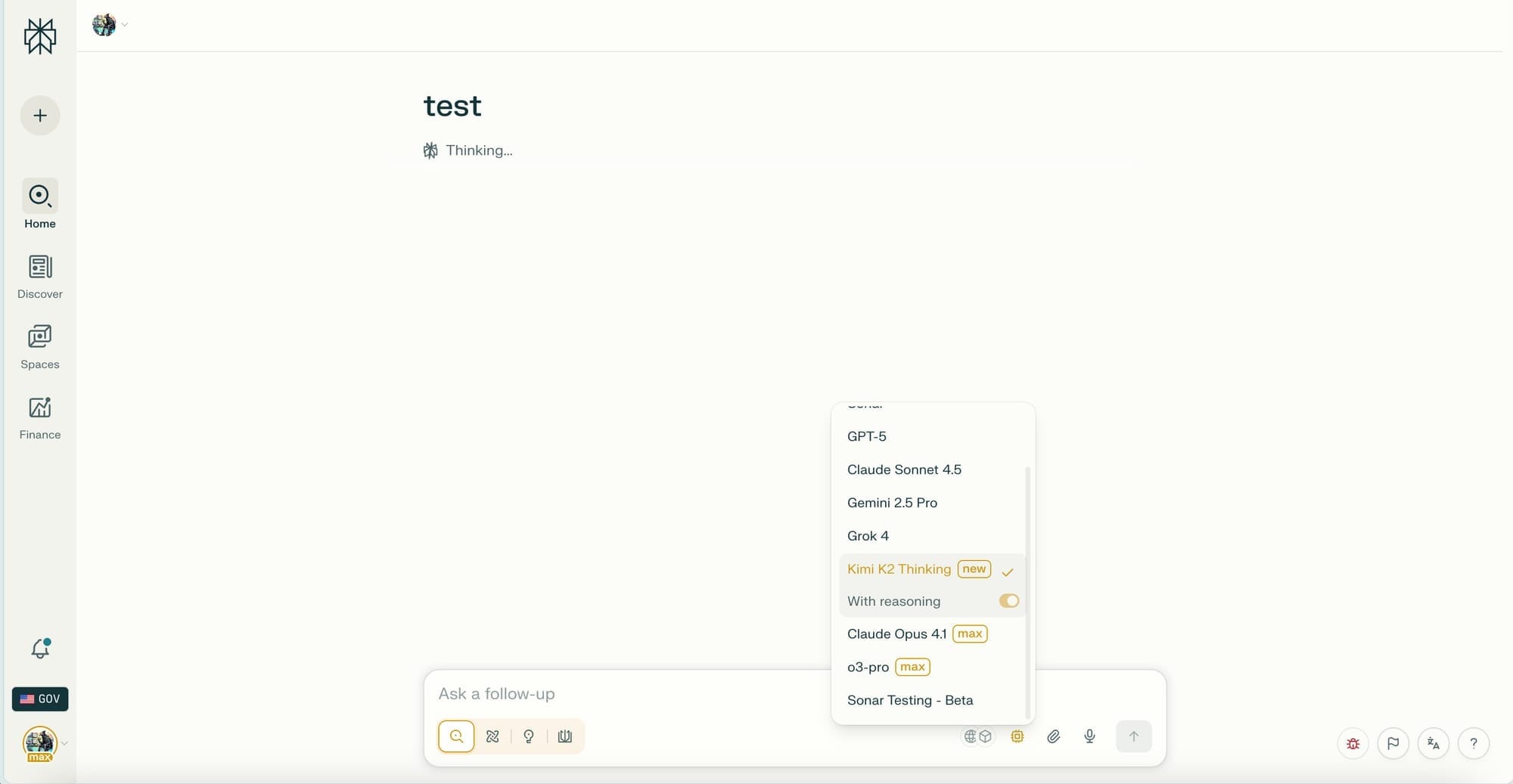

Kimi K2 thinking appears to have just dropped on Perplexity - not working yet though pic.twitter.com/RyvQdIEpc4

— EdDiboi (@EdDiberd) November 11, 2025

Early traces suggest that Perplexity will offer both the Kimi K2 Thinking and standard versions, allowing users to toggle reasoning capabilities. Although the model briefly appeared in the UI for some users, it was quickly removed, and full access remains pending, though likely imminent.

Perplexity is the first to develop custom Mixture-of-Experts (MoE) kernels that make trillion-parameter models available with cloud platform portability.

— Perplexity (@perplexity_ai) November 5, 2025

Our team has published this work on arXiv as Perplexity's first research paper. Read more:https://t.co/SNdgWTeO8F

Recent research published by Perplexity on latency minimization for the Kimi K2 non-thinking variant indicates ongoing work to optimize performance for real-world applications. There is also speculation that Perplexity’s forthcoming sonar models, historically fine-tuned for enhanced search, may be upgraded using Kimi K2 as a foundation. This would represent a natural evolution, potentially elevating the quality of Perplexity’s core search features. It remains to be seen whether these updates will be formally announced or introduced quietly, but with increasing references appearing, updates are expected in the near future.

Perplexity’s approach here aligns with their broader product strategy, which focuses on integrating top-performing open models, fine-tuning them for search, and emphasizing compliance with regional data policies, differentiating their offering in the competitive AI search landscape.