Perplexity has introduced BrowseSafe-Bench, a benchmark and fine-tuned detection model aimed at strengthening the security of browser-based AI agents. The feature is targeted at researchers, browser developers, and organizations deploying AI agents within web environments, addressing the increasing risk of prompt injection and adversarial attacks in agentic browsing workflows. BrowseSafe-Bench and its associated model are publicly available for the research community to evaluate and improve security measures.

Today we're releasing BrowseSafe and BrowseSafe-Bench: an open-source detection model and benchmark to catch and prevent malicious prompt-injection instructions in real-time.https://t.co/TutfaBnTte

— Perplexity (@perplexity_ai) December 2, 2025

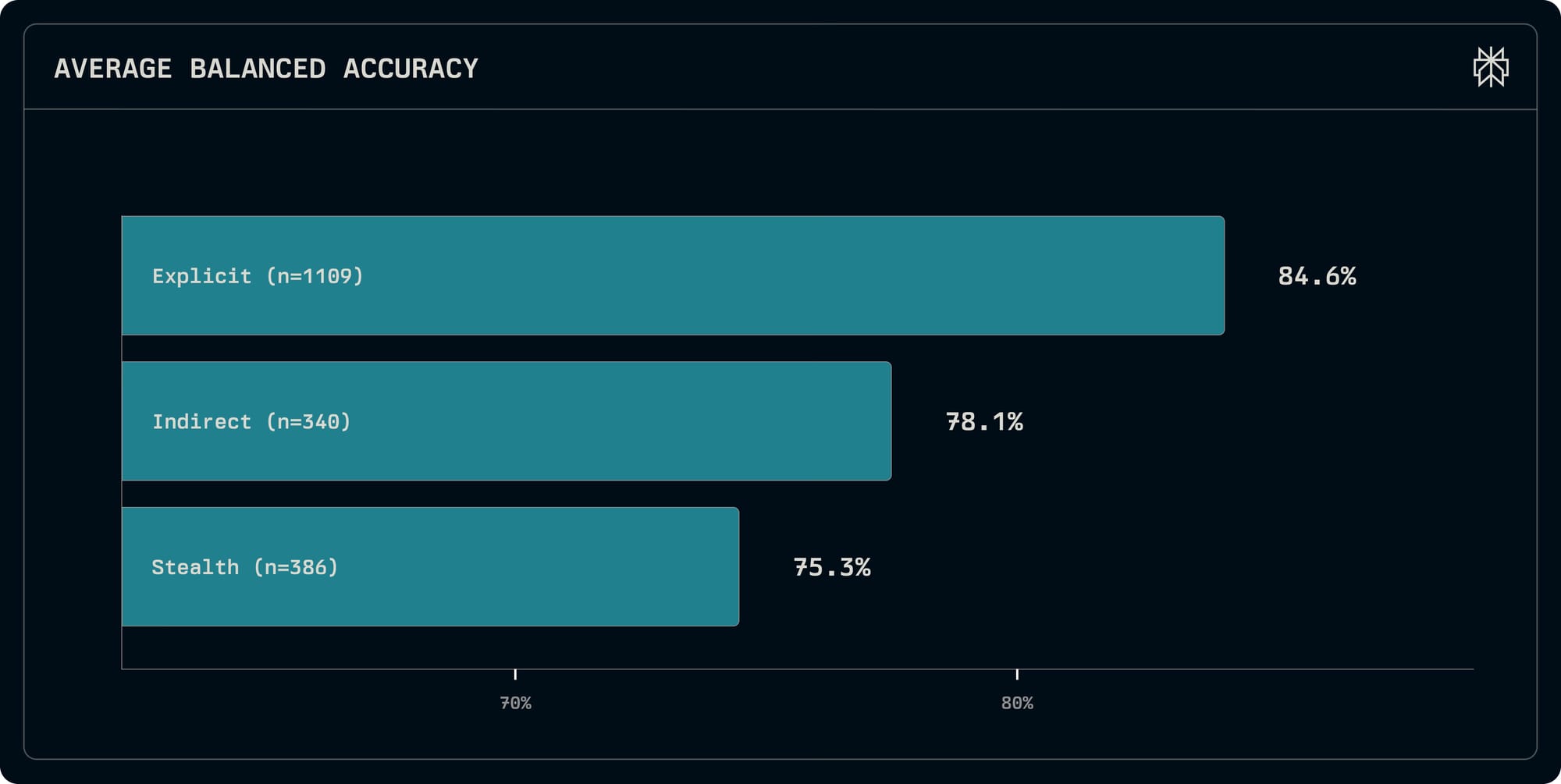

This release is notable because it provides a comprehensive evaluation environment for detecting complex, real-world attacks on browser agents, which can interpret and interact with web content on behalf of users. The benchmark simulates sophisticated threat scenarios by varying attack type, injection strategy, and linguistic style, reflecting the diversity and subtlety of actual adversarial payloads encountered online. The detection model, based on a Mixture-of-Experts architecture (Qwen-30B-A3B-Instruct-2507), demonstrates state-of-the-art performance (F1 0.91) while maintaining the speed required for real-time web browsing.

Perplexity’s approach combines defense-in-depth, including asynchronous hybrid detection and dynamic retraining via flagged boundary cases, to adapt to evolving threats. Early reactions from the research community highlight the benchmark's realism and the model’s robust performance. The availability of the dataset and model fosters collaboration and transparency, setting a new standard for browser agent security.