OpenAI has unveiled its latest advancements in AI reasoning with the introduction of the o3 and o3-mini models. These models represent a significant evolution from the earlier o1 model, focusing on deeper reasoning capabilities, improved performance in complex tasks, and enhanced safety measures.

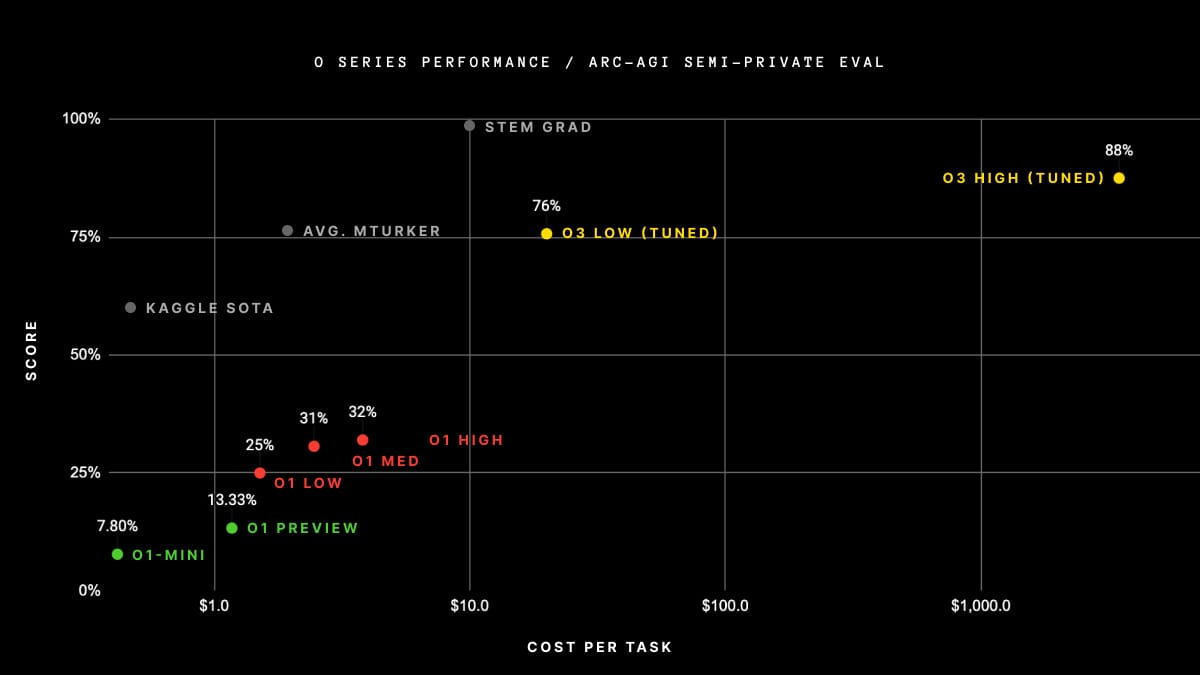

The o3 model is designed to tackle challenging problems in areas such as coding, mathematics, and scientific reasoning. It employs a "simulated reasoning" process, which allows the model to deliberate internally before generating responses. This approach mimics human-like problem-solving and surpasses pattern recognition used in earlier models. The o3 model has achieved impressive results on various benchmarks, including a 96.7% score on the American Invitational Mathematics Exam (AIME) and an 87.7% accuracy on graduate-level science questions. It also performed exceptionally well on the ARC-AGI test, which measures an AI's ability to solve novel problems without relying on pre-trained knowledge.

Today, we shared evals for an early version of the next model in our o-model reasoning series: OpenAI o3 pic.twitter.com/e4dQWdLbAD

— OpenAI (@OpenAI) December 20, 2024

The o3-mini, a distilled version of o3, is optimized for faster processing and lower computational costs while maintaining strong performance in reasoning tasks. This version is particularly suited for coding applications and offers an adaptive thinking time feature, allowing users to adjust processing speeds based on their needs.

OpenAI has also introduced a new safety training paradigm called deliberative alignment, which enhances the safety of these models by teaching them to reason explicitly over human-written safety specifications. This method ensures that the models adhere to ethical guidelines while improving their ability to handle complex prompts safely.

The o3 models are currently available for early access testing by safety and security researchers, with broader deployment planned for early 2025. OpenAI's cautious rollout underscores its commitment to aligning advanced AI systems with human values and societal benefits. These developments position o3 as a major step forward in AI reasoning and safety research.