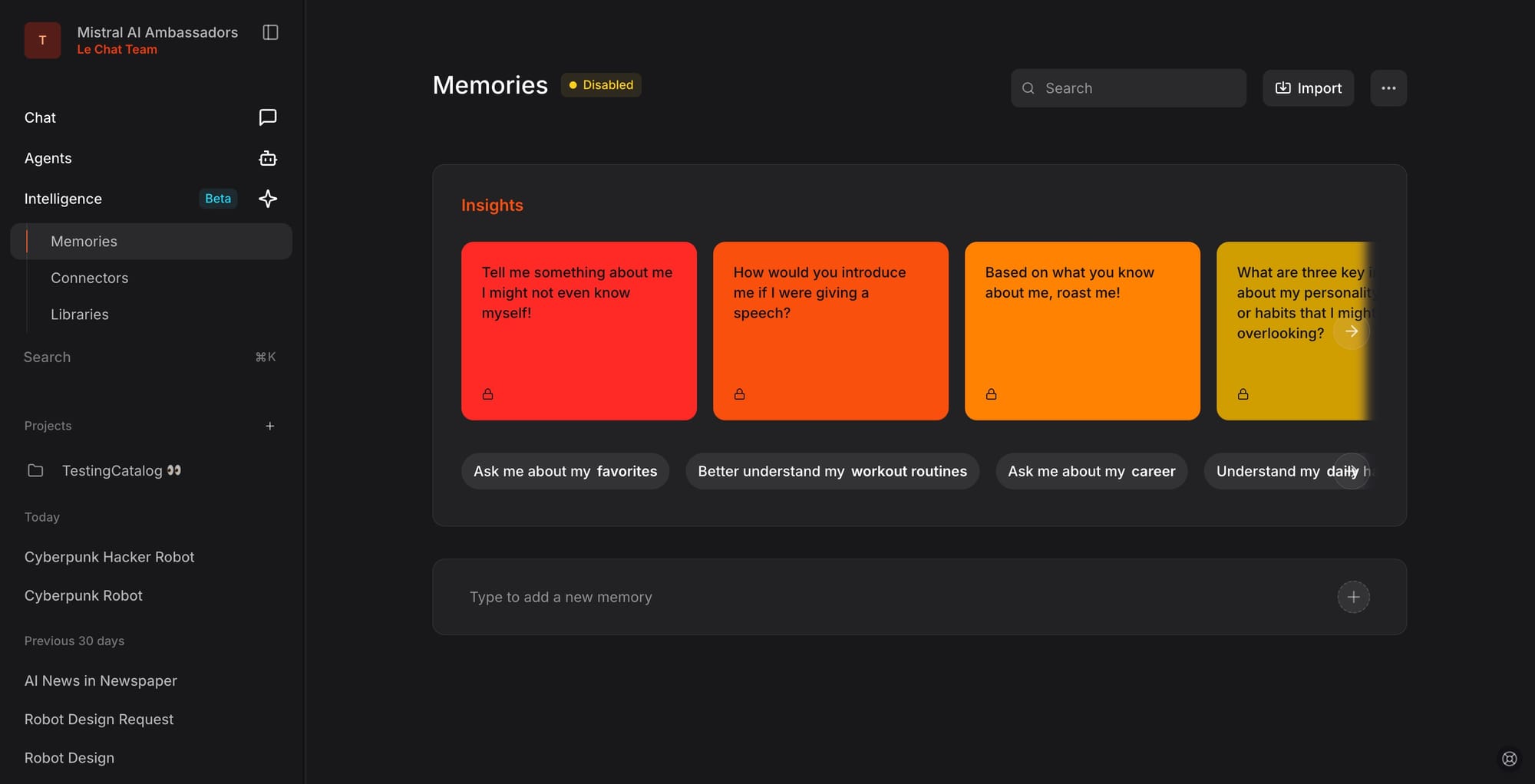

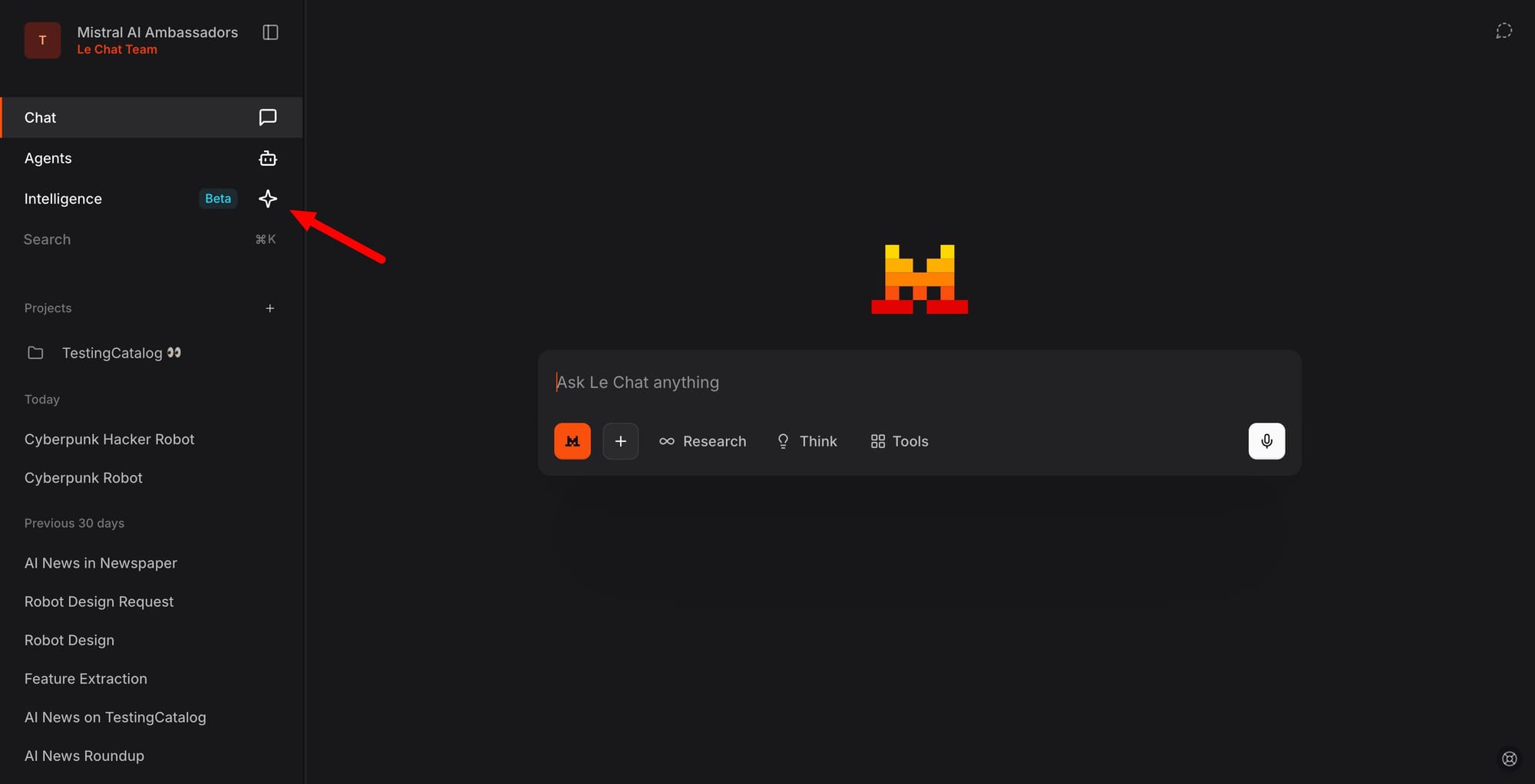

Mistral AI is preparing to roll out a memory system that will appear in the new Intelligence drop-down, aligning it with how competing chat platforms already handle persistent data. Selecting the Memory tab brings up a list of stored memories, and once a user reaches ten entries, additional quick prompts unlock. These prompts can ask the assistant to summarize personal details inferred from stored data, showing a push toward a more personalized model of conversation. If enabled, this memory layer will feed into live chats, adjusting responses based on prior interactions.

The discovery suggests Mistral is moving toward long-term personalization, a feature already central to OpenAI and Anthropic’s strategies. The approach benefits regular users who want continuity across sessions, but it also introduces a system that can guide how prompts evolve depending on previously saved context. Placement inside the Intelligence selector signals that Mistral treats memory as a core mode, not just a background toggle.

Mistral Medium 3.1 just landed on @lmarena_ai leaderboard—punching way above its weight!

— Mistral AI (@MistralAI) August 22, 2025

🏆 #1 in English (no Style Control)

🏆 2nd overall (no Style Control)

🏆 Top 3 in Coding & Long Queries

🏆 8th overall

Small model. Big impact. Try it now on Le Chat and the API! pic.twitter.com/nwJCHRvX5D

This development comes shortly after the Mistral 3.1 upgrade, which propelled the model to the top of LM Arena rankings for English prompts without style control and placed it high even with style control active. That jump underscored the technical maturity of the release and positioned Mistral as a stronger peer to larger labs. With memory on top, the company extends its model beyond raw benchmark performance toward practical, adaptive use, which could reinforce adoption across both casual and professional settings.