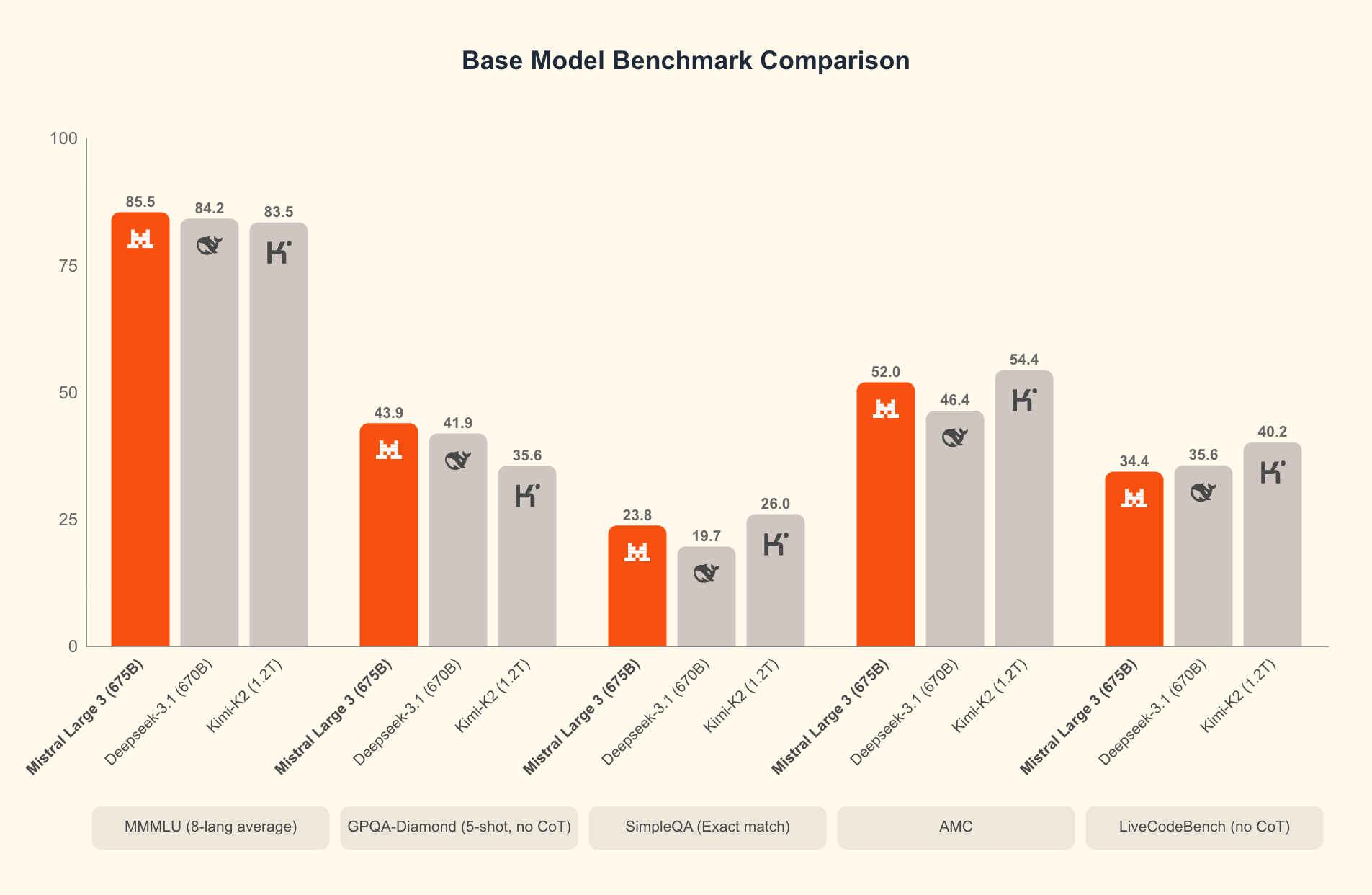

Mistral AI has launched its latest Mistral 3 model family, targeting developers, enterprises, and the open-source AI community. This release includes three compact, dense models (3B, 8B, and 14B parameters) and the flagship Mistral Large 3, which features a sparse mixture-of-experts architecture with 41B active and 675B total parameters. All models are open source under the Apache 2.0 license and are immediately available across major platforms, including Mistral AI Studio, Amazon Bedrock, Azure Foundry, Hugging Face, Modal, IBM WatsonX, OpenRouter, Fireworks, Unsloth AI, and Together AI, with further options coming soon.

Introducing the Mistral 3 family of models: Frontier intelligence at all sizes. Apache 2.0. Details in 🧵 pic.twitter.com/lsrDmhW78u

— Mistral AI (@MistralAI) December 2, 2025

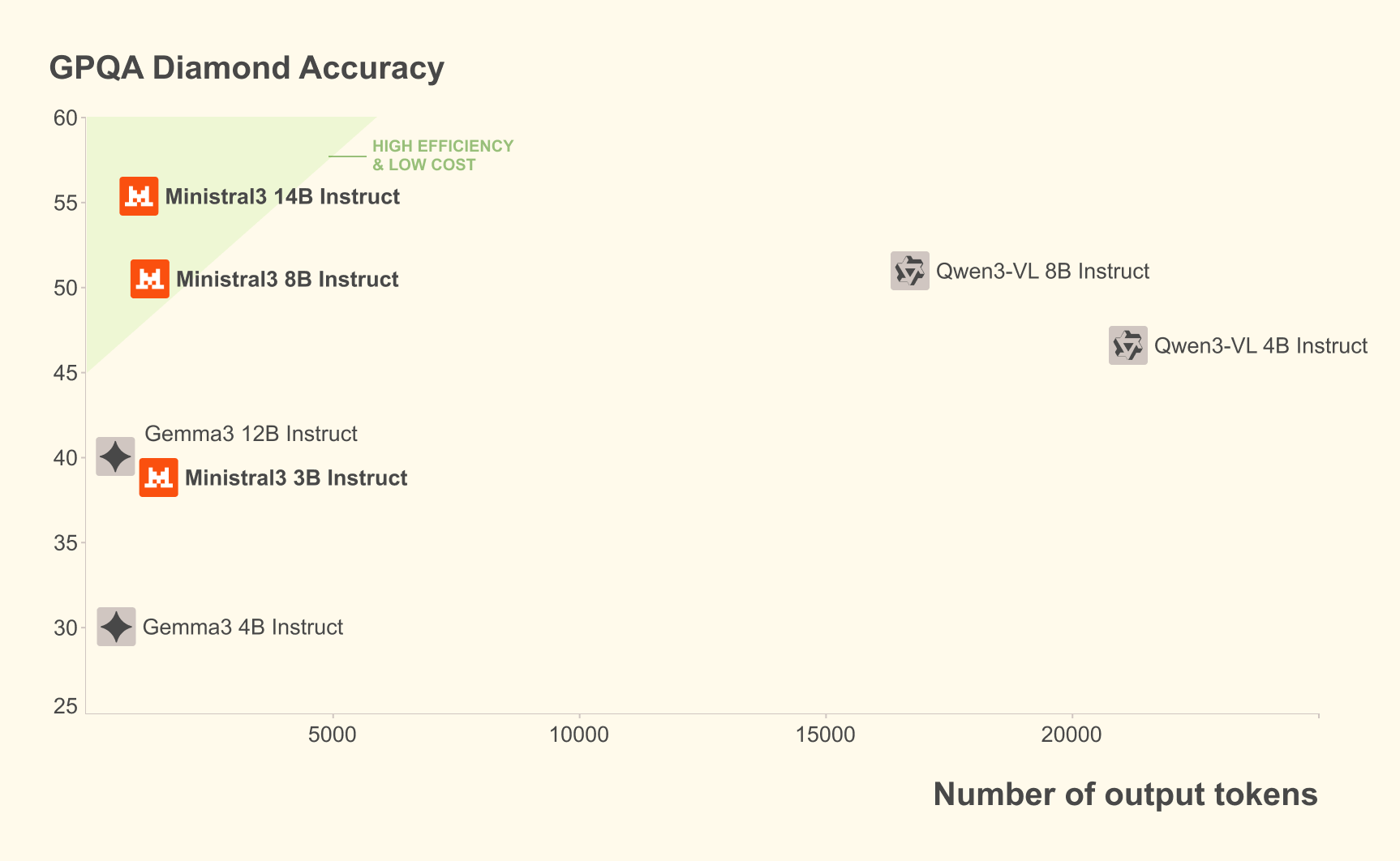

The Mistral Large 3 model has been trained using 3,000 NVIDIA H200 GPUs and incorporates Blackwell attention and mixture-of-experts kernels, achieving high marks for multilingual and multimodal capabilities. The Ministral 3 series, scalable from edge to data center, brings base, instruct, and reasoning variants, each supporting image understanding and strong cost-performance ratios. For technical deployment, the models are provided in NVFP4 format, optimized for vLLM and NVIDIA hardware, ensuring efficient inference across a range of GPUs and edge devices.

Mistral AI is a French company focused on open, scalable AI solutions and has rapidly gained attention for its commitment to permissive licensing and broad accessibility. The company collaborates closely with NVIDIA, Red Hat, and vLLM to optimize both training and deployment. Early industry reactions highlight the open weights, multilingual support, and adaptability to custom workflows as standout features for enterprise and developer use cases.