LMArena has announced the launch of AI Evaluations, a commercial product designed for enterprises, model labs, and developers who need robust assessment tools for their AI models. This service provides detailed evaluations grounded in real-world human feedback by leveraging a large-scale community of over 3 million monthly users and more than 250 million recorded conversations. It offers comprehensive analytics, auditability through representative feedback samples, and service-level agreements to ensure timely delivery of results. AI Evaluations is available to commercial clients as well as open source teams, with flexible pricing models that extend access to nonprofit organizations and ensure broad participation.

At LMArena, our mission is to improve the reliability of AI systems.

— lmarena.ai (@lmarena_ai) September 16, 2025

Today, we’re introducing an evaluation product to analyze human–AI interactions at scale, turning their complexity into insights the ecosystem can learn from to make AI more effective.

Our AI Evaluation… pic.twitter.com/744c5pvzHO

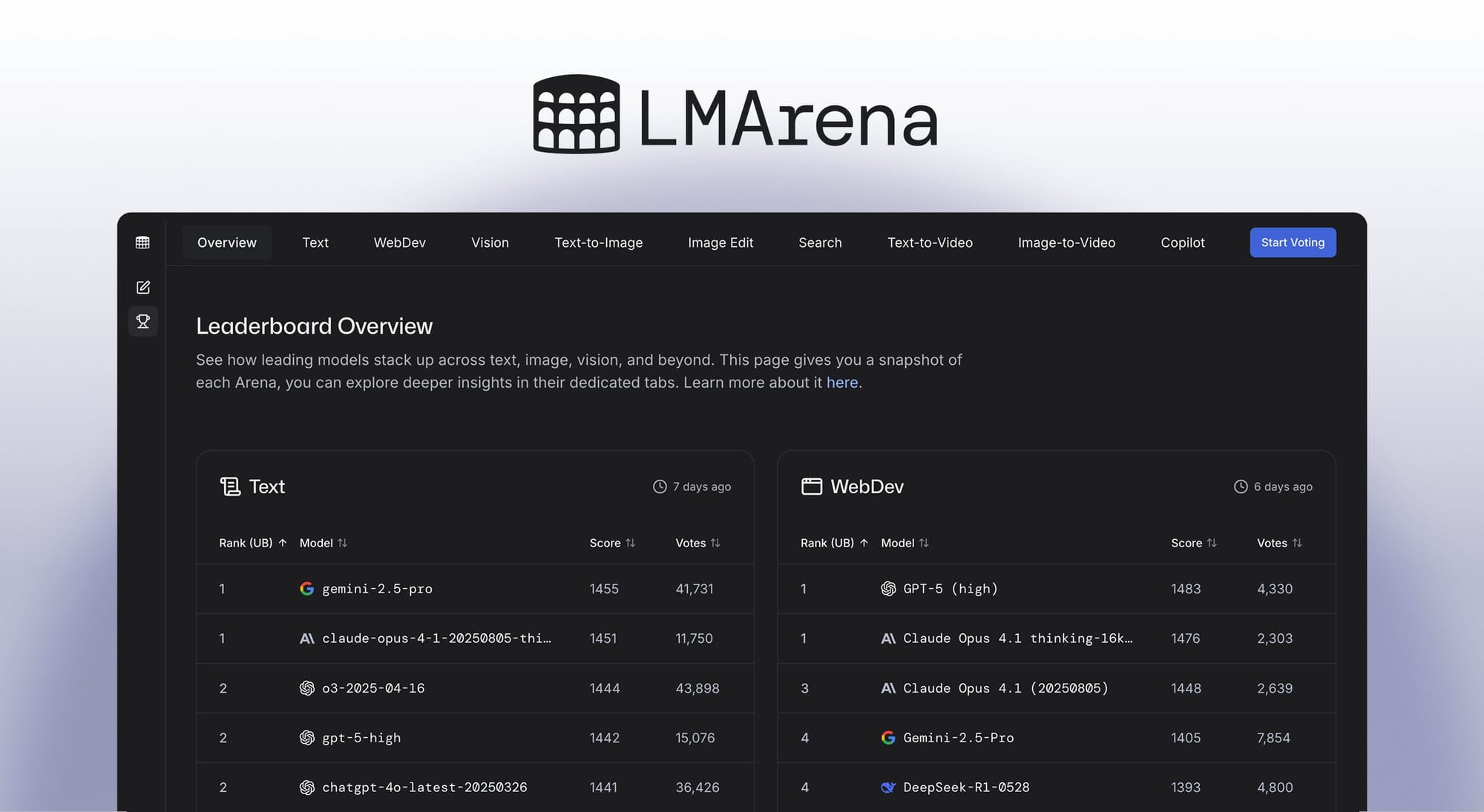

The rollout of AI Evaluations marks a shift for LMArena as it moves to commercial services while maintaining public commitments to neutrality, breadth, and openness. All models, whether commercial or open source, are evaluated using the same methodology, and a significant portion of resources is reserved for open models. The service includes public leaderboards, head-to-head model comparisons, and will continue to expand with more features in the coming weeks. Early users appreciate the transparency and scale of the evaluation process, while industry insiders note that the integration of real user feedback at such a scale sets LMArena apart from other evaluation platforms.

LMArena, known for its transparent and community-driven approach to AI model benchmarking, aims to build trust in AI systems by making evaluation data and research openly accessible. The company has become a resource for labs seeking to assess and improve their models, as well as for developers looking to select the most reliable AI solutions for their needs.