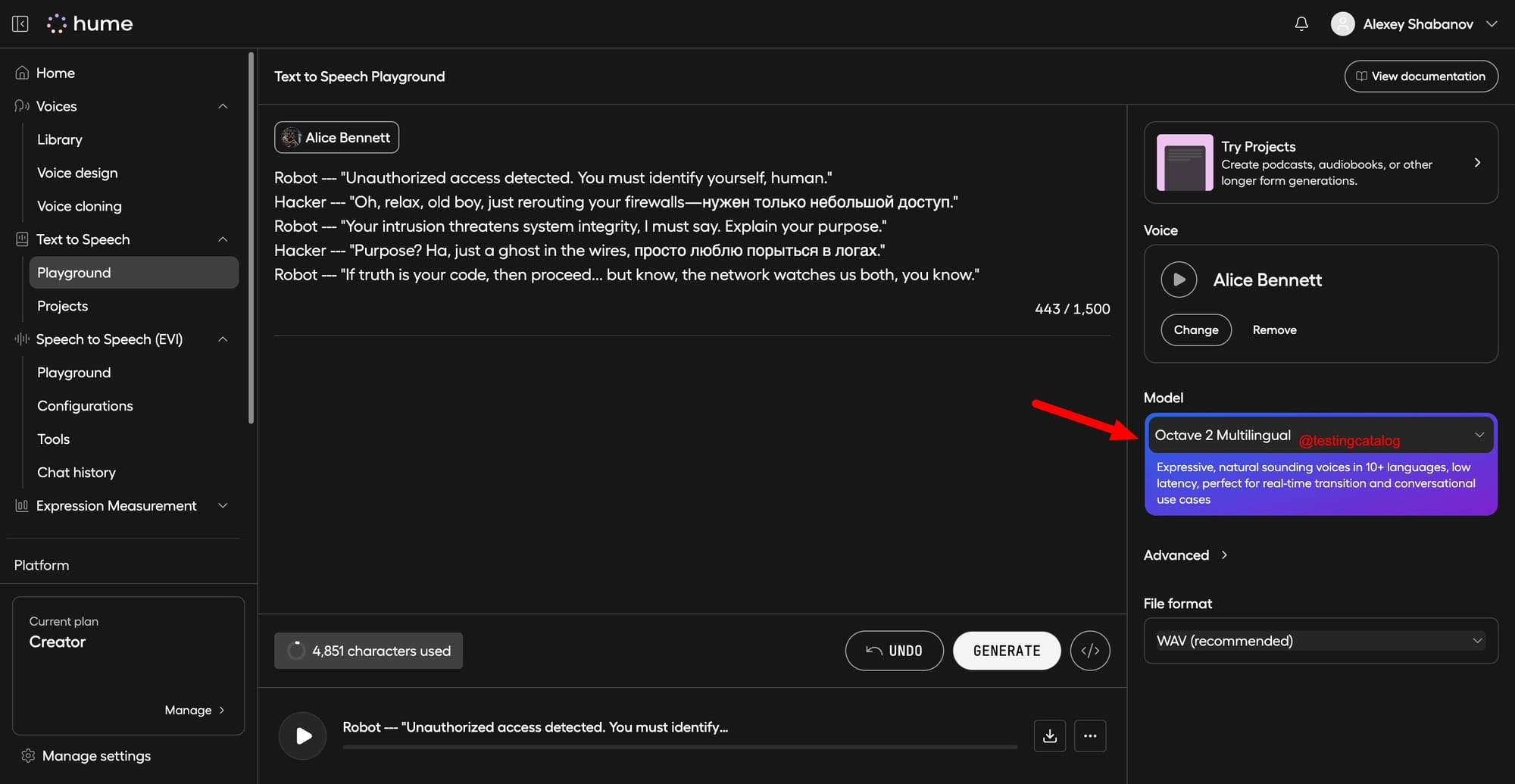

Hume AI is preparing to launch Octave 2 Multilingual, the next step in its text-to-speech lineup after the earlier release of the original Octave model. Octave 2 brings support for more than 10 languages, expanding far beyond the first model’s focus on emotionally expressive English voices. Its description mentions expressive and natural voices with low latency, making it suitable for use cases that require fast, real-time voice generation, such as live translation, voicebots, and conversational interfaces.

Example - "Robot & Russian hacker dialogue"

The new model is positioned to benefit a wide range of users, from developers building multilingual apps and real-time translation tools to creators working on podcasts or audiobooks in multiple languages. One of the key advances is the ability to switch between languages and deliver speech that sounds convincingly human, even for languages with distinct phonetics like Russian. In early side-by-side comparisons, Octave 2 reportedly produces more natural-sounding audio than its predecessor, making it difficult to distinguish from an actual human speaker, which is notable for any AI-generated voice system.

Comparison between Octave 2

vs Octave 1

The Octave 2 Multilingual model is not yet publicly available but has surfaced in early internal and hidden tests, suggesting a public release is imminent. This fits into Hume AI’s broader product direction, where the company focuses on emotion-rich and context-aware AI voices. If Octave 2’s fast response times and language flexibility hold up at scale, it could quickly attract both commercial and research interest, especially as demand rises for tools that handle real-time, multilingual audio. The discovery of its new features came from testing and observing differences in generated outputs, without public documentation or announcements yet from Hume AI.

As the rollout nears, developers and early adopters should watch closely for further updates and public demos.