Google has launched TranslateGemma, a suite of open-source machine translation models derived from the Gemma 3 architecture. TranslateGemma is offered in three versions based on parameter size: 4 billion, 12 billion, and 27 billion. These models are designed to support translation across 55 languages, including a mix of high-, mid-, and low-resource languages, and are accessible to developers and organizations globally. The models are compatible with a broad range of devices, from mobile platforms to high-performance computing environments, allowing deployment flexibility regardless of hardware constraints.

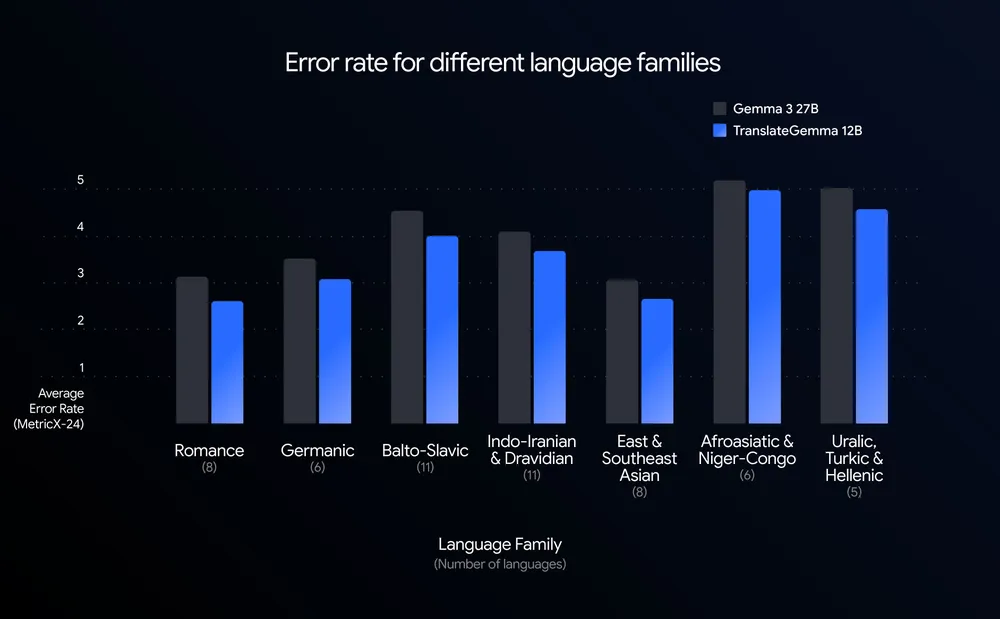

TranslateGemma sets itself apart by delivering translation performance that rivals or exceeds much larger models. Notably, the 12B parameter model surpasses the Gemma 3 27B baseline in translation quality according to MetricX scores on the WMT24++ benchmark, while the compact 4B model matches the earlier 12B baseline, making it suitable for resource-constrained deployments. The models use distilled knowledge from Google’s top-tier language systems, resulting in reduced error rates and faster processing speeds compared to previous iterations. Developers benefit from improved translation accuracy with fewer parameters, leading to both lower computational requirements and latency.

Google’s release of TranslateGemma aligns with its ongoing commitment to open AI research and the democratization of language technology. By expanding high-quality translation capabilities to a wide audience, Google aims to support cross-lingual communication and address the needs of developers seeking efficient, scalable solutions for multilingual applications.