Google has announced the release of FunctionGemma, a specialized version of its Gemma 3 270M model focused on function calling. This model is now available to developers and the public, targeting those building on-device intelligent agents that translate natural language into executable API actions. FunctionGemma is engineered to run efficiently on edge devices such as NVIDIA Jetson Nano and modern mobile phones, and supports a 256k vocabulary for handling JSON and multilingual inputs. It is integrated with popular development and deployment frameworks including Hugging Face Transformers, Keras, NVIDIA NeMo, LiteRT-LM, vLLM, MLX, Ollama, Vertex AI, and LM Studio, supporting a wide range of workflows and platforms globally.

Solve fun physics simulation puzzles in FunctionGemma Physics Playground. The game runs locally in your browser and is powered with Transformers.js by @huggingface: https://t.co/YtmZvF02xX pic.twitter.com/dO7DRG4aHc

— Google AI Developers (@googleaidevs) December 18, 2025

FunctionGemma stands out by unifying structured function calls with conversational abilities, allowing it to both execute commands and relay results to users in natural language. Fine-tuning has been shown to substantially improve reliability, with accuracy on mobile action tasks rising from 58% to 85%. This positions FunctionGemma as a robust foundation for custom, fast, and private local agents that can automate tasks ranging from reminders to system settings, and even run fully offline for privacy and low latency. The model can act independently for local tasks or as part of a larger system, routing complex actions to more powerful models if needed.

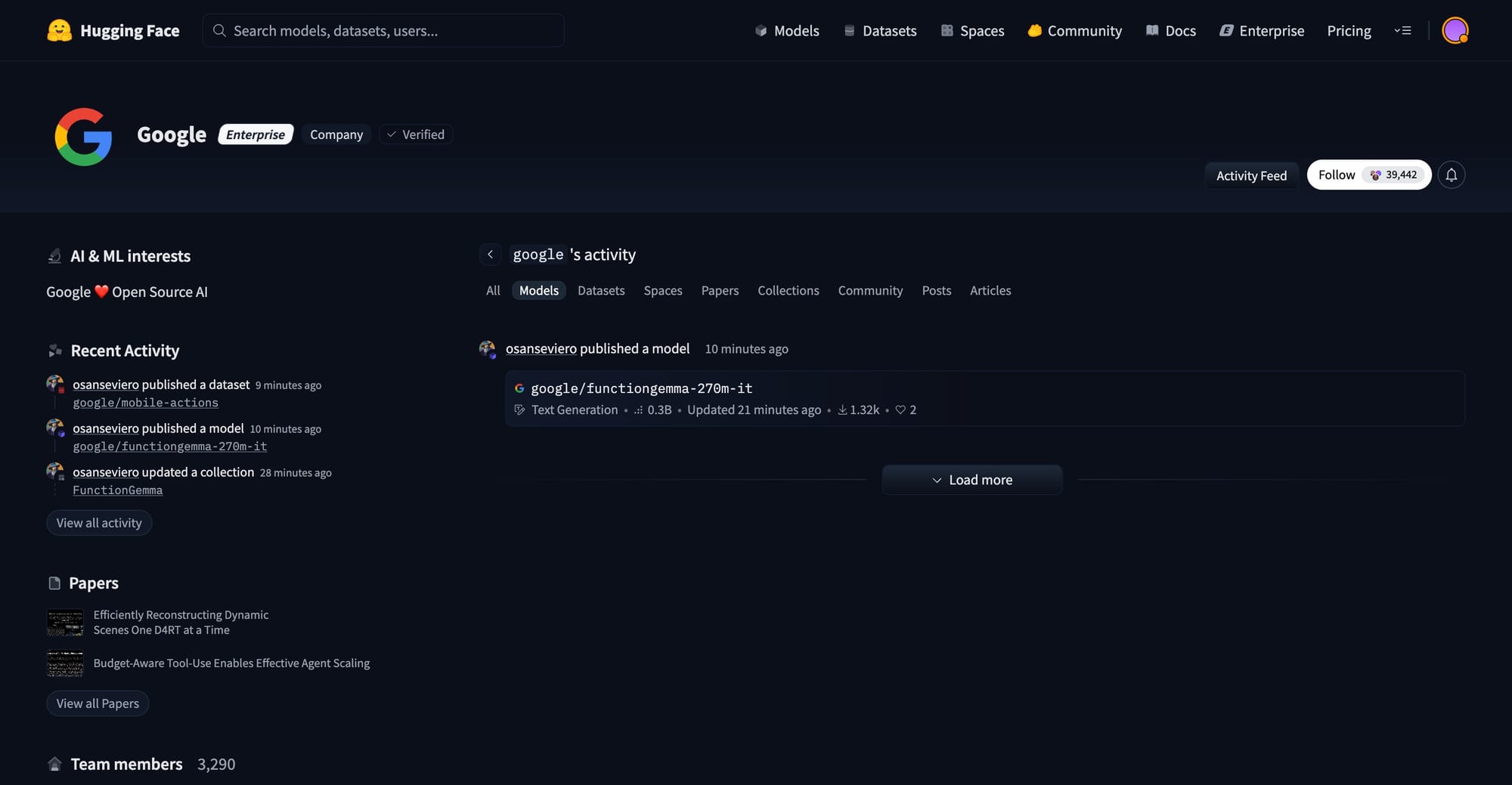

Google, the company behind FunctionGemma, has rapidly expanded its Gemma model lineup over the past year, surpassing 300 million downloads. This new release aligns with Google’s commitment to advancing open models and supporting developers in creating next-generation AI agents that go beyond simple conversations to actionable, real-world applications.