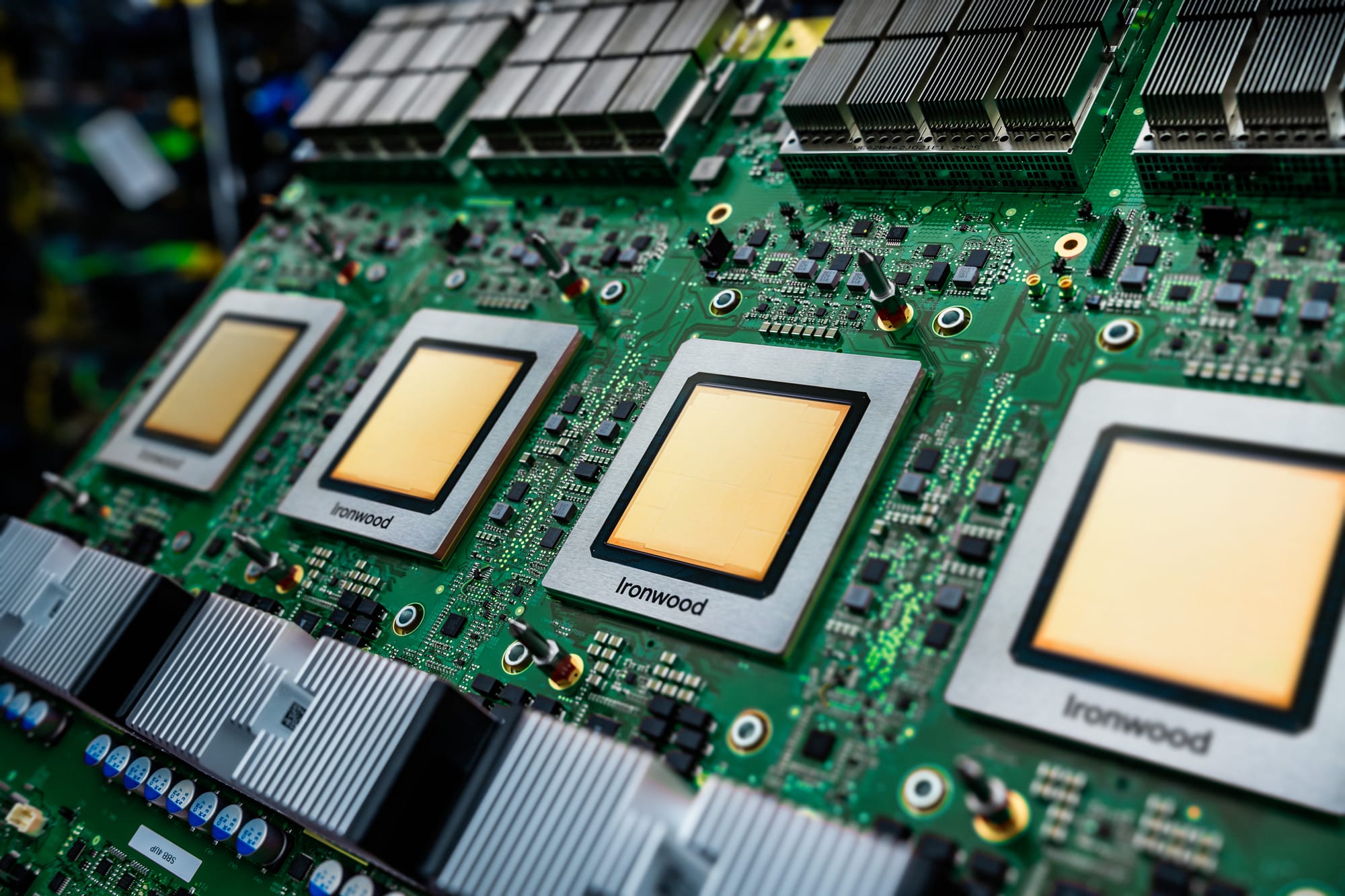

Google Cloud announced Ironwood, its seventh-generation TPU, along with new Axion instances on November 6. Ironwood is designed for large-scale training, complex reinforcement learning, and high-volume, low-latency serving. It is expected to reach general availability in the coming weeks. The Axion N4A, an Arm-based virtual machine optimized for price-performance, is currently in preview, and the C4A metal, a bare-metal Arm instance, will soon be available in preview. The target audience includes AI labs, SaaS platforms, and enterprises transitioning their spending from training to inference at scale.

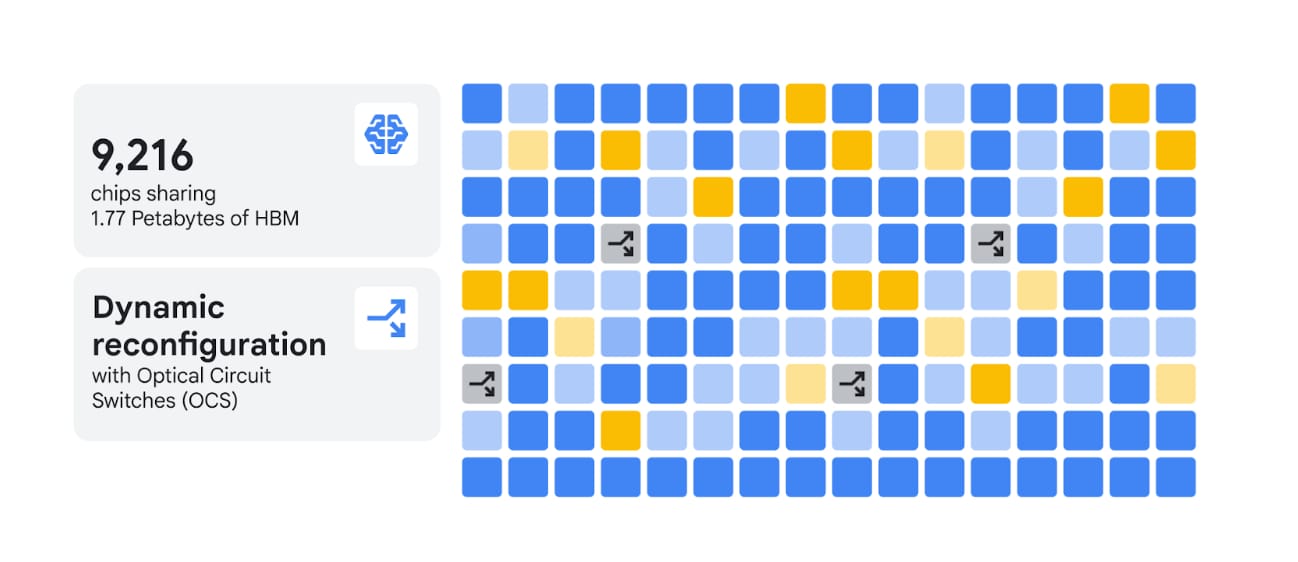

Ironwood significantly increases throughput, offering a 10× peak gain over TPU v5p and more than 4× per-chip performance compared to TPU v6e. A pod can connect up to 9,216 chips via a 9.6 Tb/s Inter-Chip Interconnect and provides 1.77 PB of shared HBM. Optical Circuit Switching allows for rerouting around faults, and pods can scale into multi-pod clusters. Google claims 118× more FP8 ExaFLOPS at the pod level compared to the next competitor, indicating substantial capacity for frontier model serving.

Our 7th gen TPU Ironwood is coming to GA!

— Sundar Pichai (@sundarpichai) November 6, 2025

It’s our most powerful TPU yet: 10X peak performance improvement vs. TPU v5p, and more than 4X better performance per chip for both training + inference workloads vs. TPU v6e (Trillium). We use TPUs to train + serve our own frontier… pic.twitter.com/HvNt2VHtFX

Software co-design plays a crucial role: MaxText introduces SFT and GRPO paths; vLLM support enables teams to switch between GPUs and TPUs with minimal configuration changes; GKE Inference Gateway reduces time-to-first-token by up to 96% and serving costs by up to 30%. Early feedback includes Anthropic planning access to up to 1 million TPUs; Lightricks reporting quality improvements in generative AI media; on Axion, Vimeo experienced approximately 30% better transcoding performance, ZoomInfo observed around 60% price-performance gains, and Rise reduced compute by about 20%. The N4A offers up to 64 vCPUs, 512 GB DDR5, and 50 Gbps; the C4A metal is aimed at hypervisors, native Arm development, and large test farms.

Google’s history of custom silicon development supports this launch. Over a decade, TPUs, YouTube VCUs, and five generations of Tensor processors have been built with system-level co-design. The first TPU was introduced eight years ago, preceding the Transformer. Titanium storage, advanced liquid cooling at gigawatt scale, and approximately 99.999% fleet uptime since 2020 underpin today’s claims for cost, scale, and reliability across the AI Hypercomputer stack. Availability: Ironwood will be generally available in the coming weeks; Axion N4A is in preview now; C4A metal will be in preview soon.