Anthropic is quietly expanding its Workbench toolkit, which serves as a sandbox for developers testing the Claude API and refining prompt strategies. Hidden features now discovered in recent builds provide a window into Anthropic’s near-term plans for making its ecosystem friendlier to both developers and product teams working with generative AI.

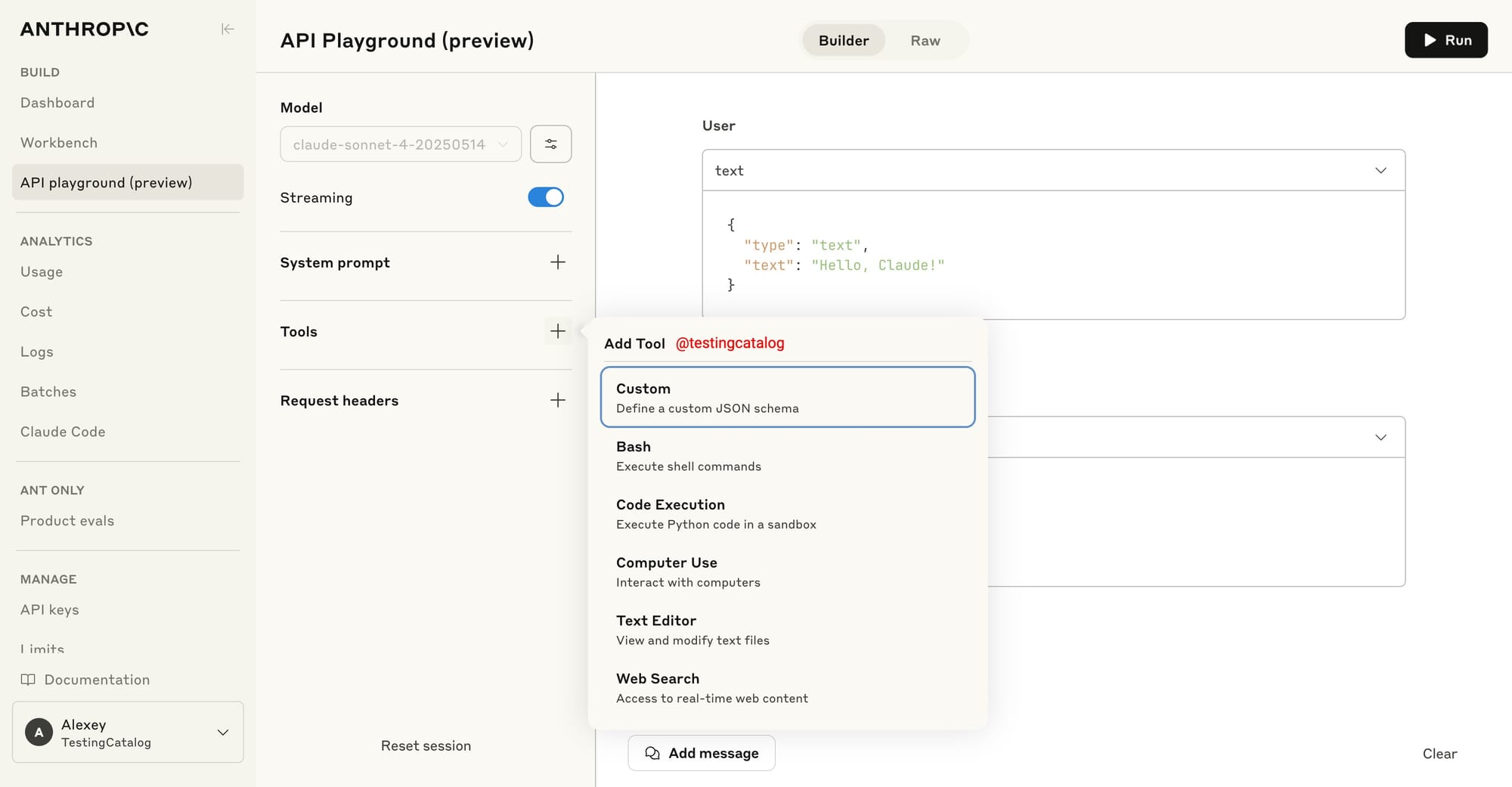

One key addition is the API Playground, flagged as a preview feature. This playground aims to let users experiment with Claude’s API directly through a graphical interface—adjusting temperature, adding custom headers, and toggling options like caching, without the friction of manual code or external tools. For API consumers, especially those evaluating Claude’s outputs before full-scale integration, this will streamline iterative testing and parameter tuning right in the Workbench’s UI.

BREAKING 🚨: Upcoming API Playground preview on Anthropic Workbench and a new analytics page for Claude Code.

— TestingCatalog News 🗞 (@testingcatalog) July 12, 2025

What % of your code is AI-generated?

"coming soon" 👀 https://t.co/SZ9EWVeg6r pic.twitter.com/jNitYSMNEg

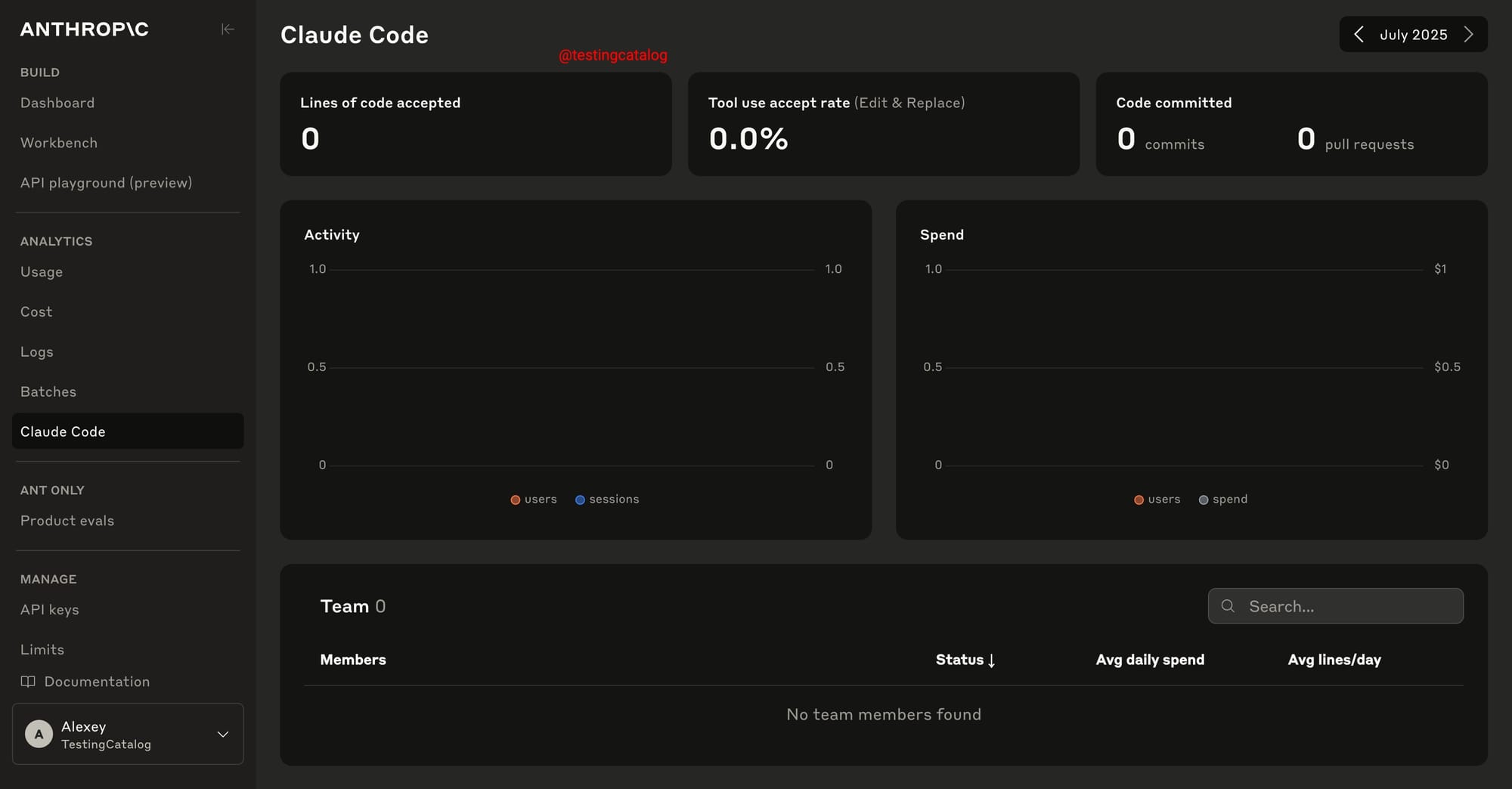

Another feature in the works appears in the Analytics section, focusing on Claude Code, Anthropic’s command-line utility that can generate and commit code directly to repositories. The new analytics panel is expected to display stats like the number of commits produced via Claude Code, offering organizations new visibility into how much code is being authored through AI. This metric could become relevant for engineering managers tracking code provenance and quantifying AI’s contribution to projects, though the exact methodology for measuring this hasn’t been disclosed.

A further internal section labeled Product Evals is also present, though details remain scarce and it’s currently marked “coming soon.” The inclusion of this and the other features signals Anthropic’s intent to close the gap with OpenAI and Google’s developer suites, as both rivals have long provided feature-rich dashboards for API exploration, analytics, and prompt iteration.

While there’s no confirmed release timeline, these upgrades point to a broader push by Anthropic to make Claude’s ecosystem more transparent and actionable for technical teams, potentially supporting both experimentation and compliance tracking as adoption grows.