Z AI has announced the open-source release of its GLM-4.6V series, a new generation of multimodal large language models. There are two models available: GLM-4.6V (106B), aimed at cloud and high-performance cluster environments, and GLM-4.6V-Flash (9B), designed for lightweight local deployment and low-latency applications. The models are available to the public, with weights accessible via HuggingFace and ModelScope, and can be integrated into applications using an OpenAI-compatible API. Users can interact with GLM-4.6V on the Z.ai platform or through the Zhipu Qingyan App.

GLM-4.6V Series is here🚀

— Z.ai (@Zai_org) December 8, 2025

- GLM-4.6V (106B): flagship vision-language model with 128K context

- GLM-4.6V-Flash (9B): ultra-fast, lightweight version for local and low-latency workloads

First-ever native Function Calling in the GLM vision model family

Weights:… pic.twitter.com/TAFwJS6uas

The GLM-4.6V models are capable of processing 128,000 tokens in a single context window, allowing them to handle lengthy and complex documents, images, and videos. Key features include native function calling, multimodal tool use, and context-aware reasoning across text and visual data. The models support direct image, screenshot, and document input, and can output structured, image-rich content. Compared to previous iterations, these models close the loop from perception to action, handle tool invocation natively, and use a large pretraining dataset for broad world knowledge. Early user reports highlight strong performance in:

- Document understanding

- Code generation from designs

- Video summarization

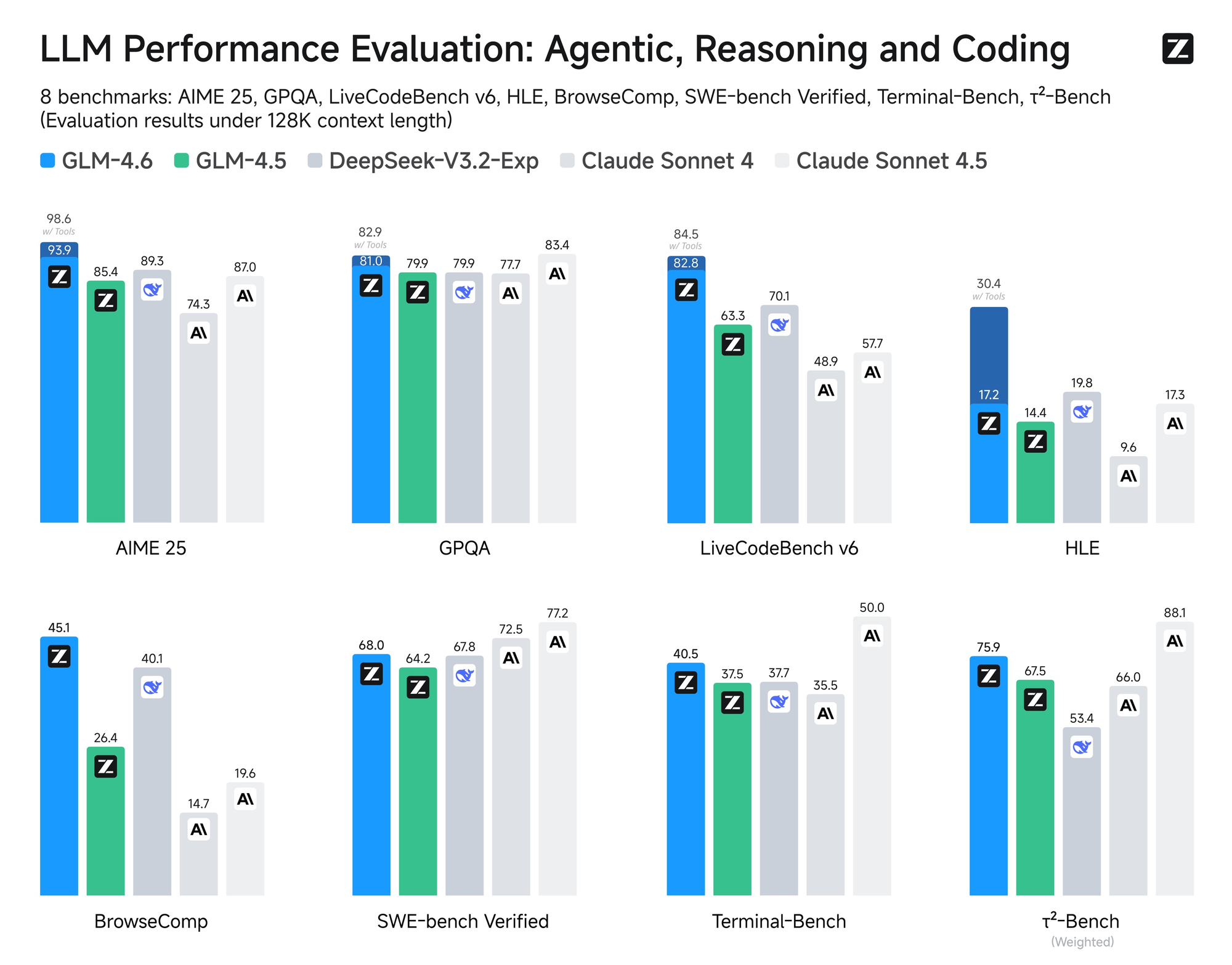

These capabilities place GLM-4.6V among top open-source models for multimodal reasoning.

Zhipu AI, the developer behind the GLM series, is recognized for advancing open-source large language models in China. With GLM-4.6V, the company targets enterprise, research, and development communities seeking advanced multimodal AI solutions that rival major global competitors in both capability and scale.