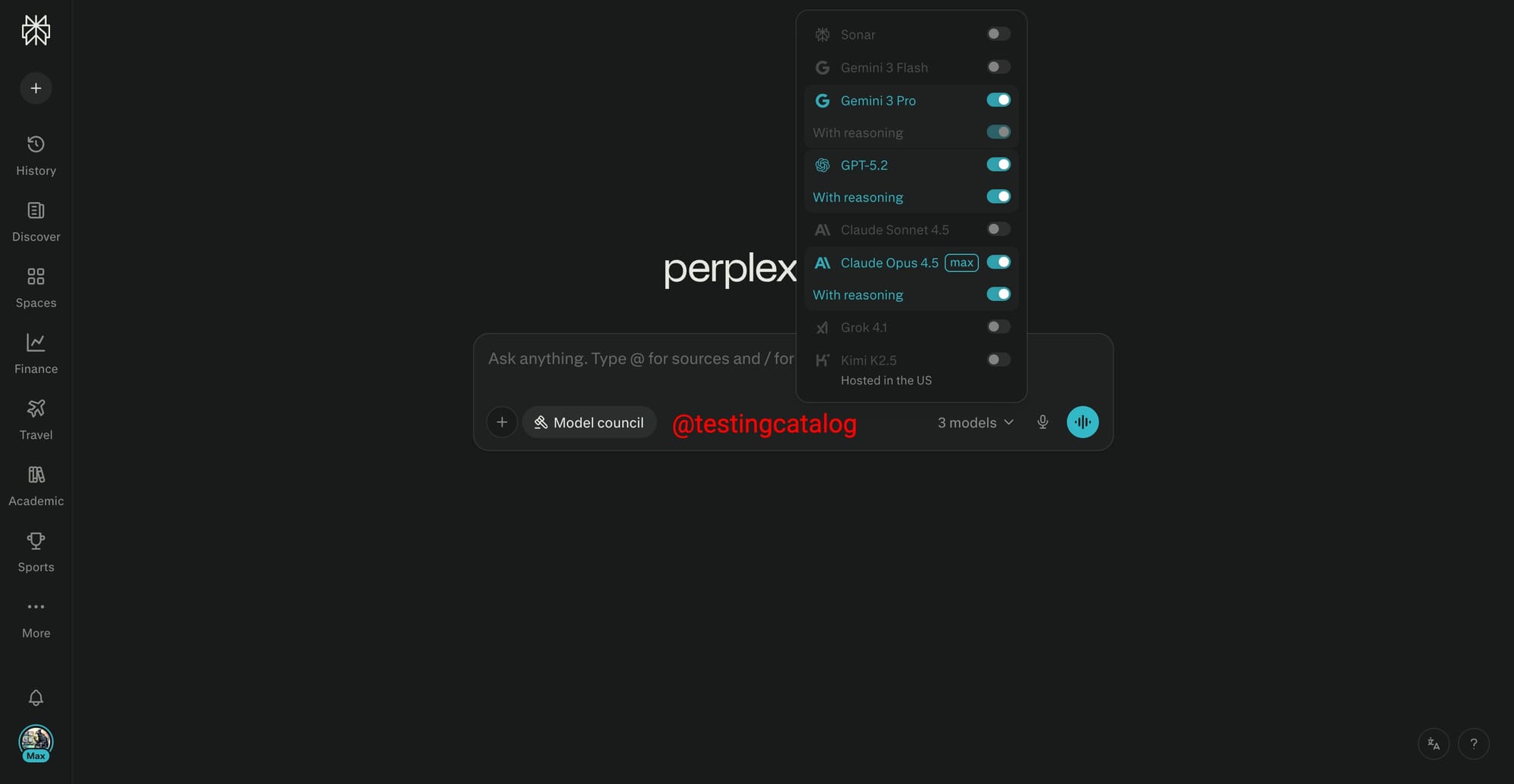

Perplexity recently announced Advanced Deep Research, but it looks like the team is also working on more tools behind the scenes. One of them is called Model Council. It’s labeled as “Max,” which suggests it will be limited to Max subscribers. The description says it lets you compare responses from multiple AI models. When enabled, the model selector appears to support choosing three models at once, specifically Gemini 3 Pro, GPT-5.2, and Claude Opus 4.5 with reasoning. Since these are currently among the strongest options, the feature hints at a system where Perplexity can put multiple models on the same task and potentially combine their outputs.

That approach matters because multi-model systems have already shown strong results in benchmarks like ARC-AGI. In past submissions, including Poetiq’s entry last year and another one submitted this year, multi-model setups have outperformed many single-model runs.

A new 72% acheivement submission for ARC-AGI-2. So far, it is the second multi-model system that outperformed single-model solutions.

— TestingCatalog News 🗞 (@testingcatalog) February 3, 2026

"It runs the same task through GPT-5.2, Gemini-3, and Claude Opus 4.5 in parallel."

We need new benchmarks 👀 https://t.co/Tkmdyg8m4v pic.twitter.com/SoJnnjh6mL

If Perplexity turns this kind of orchestration into a product feature, it could stand out from competitors who tend to prioritize their own models by default. The open question is how well it performs in real usage.

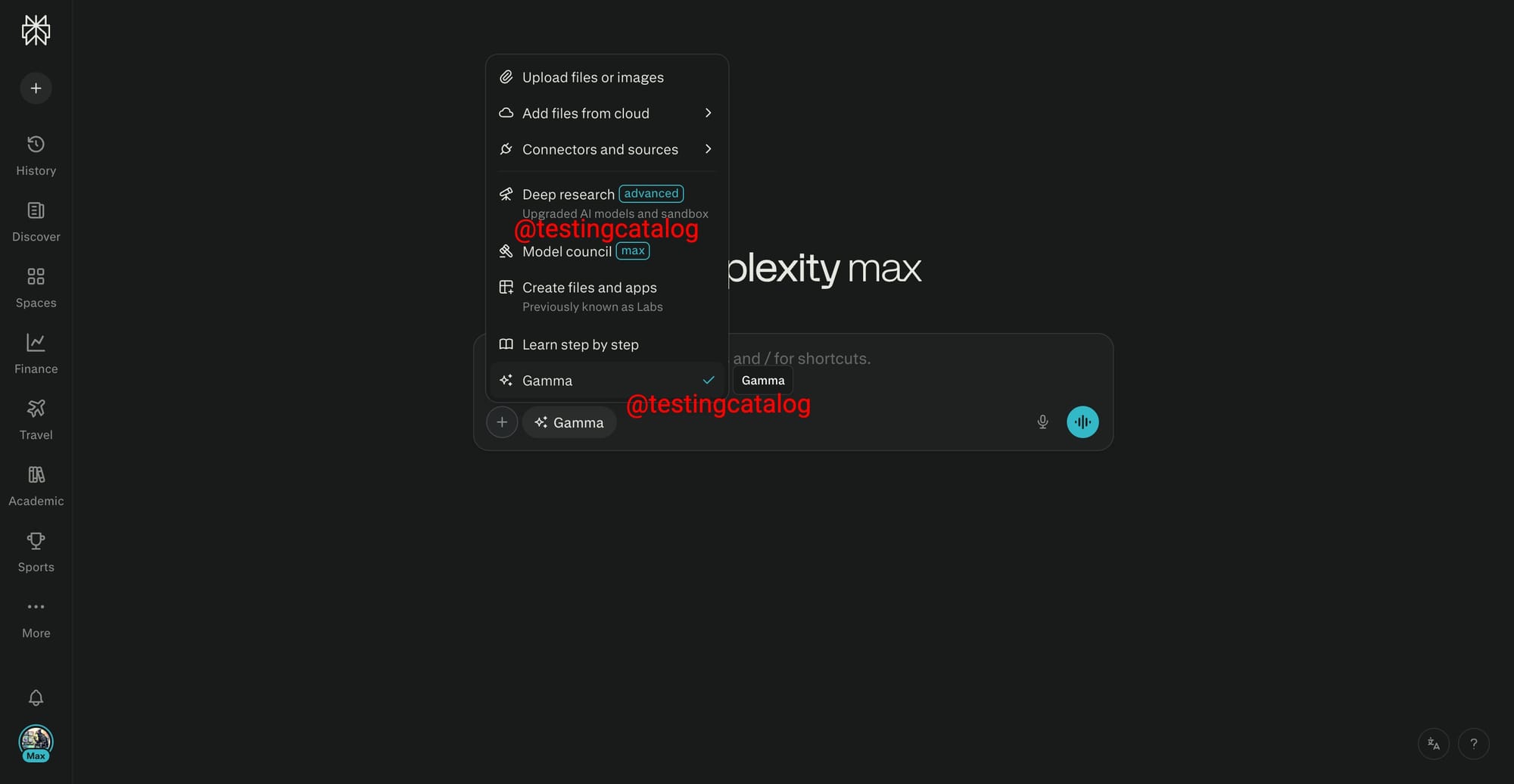

There’s also another mode called Gamma. It doesn’t include any description, only an AI-style icon. What stands out is that it’s referenced in the code as ASI, short for “artificial superintelligence.” Beyond the names Gamma and ASI, there’s nothing else to infer yet, so its purpose is unclear. Still, if this aligns with Perplexity’s direction, the company may be setting up a higher-tier mode aimed at more ambitious capabilities.