Mistral Large 2 is the latest generation of AI models from Mistral AI, designed to push the boundaries of cost efficiency, speed, and performance. This model has a 128k context window and supports dozens of languages, including French, German, Spanish, Italian, Portuguese, Arabic, Hindi, Russian, Chinese, Japanese, and Korean, along with 80+ coding languages. With a size of 123 billion parameters, Mistral Large 2 is designed for single-node inference with long-context applications in mind.

Key Aspects and Functionalities

- 128k context window

- Supports dozens of languages

- 80+ coding languages

- 123 billion parameters

- Designed for single-node inference with long-context applications

- Enhanced function calling and retrieval skills

- Trained to proficiently execute both parallel and sequential function calls

- Available under the Mistral Research License for research and non-commercial usages

The Company Behind It: Mistral AI

Mistral AI is a company that specializes in developing AI models for various applications. With the release of Mistral Large 2, the company is consolidating its offering on la Plateforme around two general-purpose models, Mistral Nemo and Mistral Large, and two specialist models, Codestral and Embed. Mistral AI is also partnering with leading cloud service providers to bring its models to a global audience.

Release Date and Availability

Mistral Large 2 was released on July 24, 2024, and is available on la Plateforme under the name mistral-large-2407. It is also available on Vertex AI via a Managed API, in addition to Azure AI Studio, Amazon Bedrock, and IBM watsonx.ai.

Target Audience

The target audience for Mistral Large 2 includes researchers, developers, and businesses looking to utilize AI models for various applications, such as language translation, code generation, and reasoning.

Technical Specifications and Capabilities

Mistral Large 2 has a 128k context window and supports dozens of languages, making it suitable for long-context applications. Its size of 123 billion parameters allows it to run at large throughput on a single node. The model is also trained to acknowledge when it cannot find solutions or does not have sufficient information to provide a confident answer.

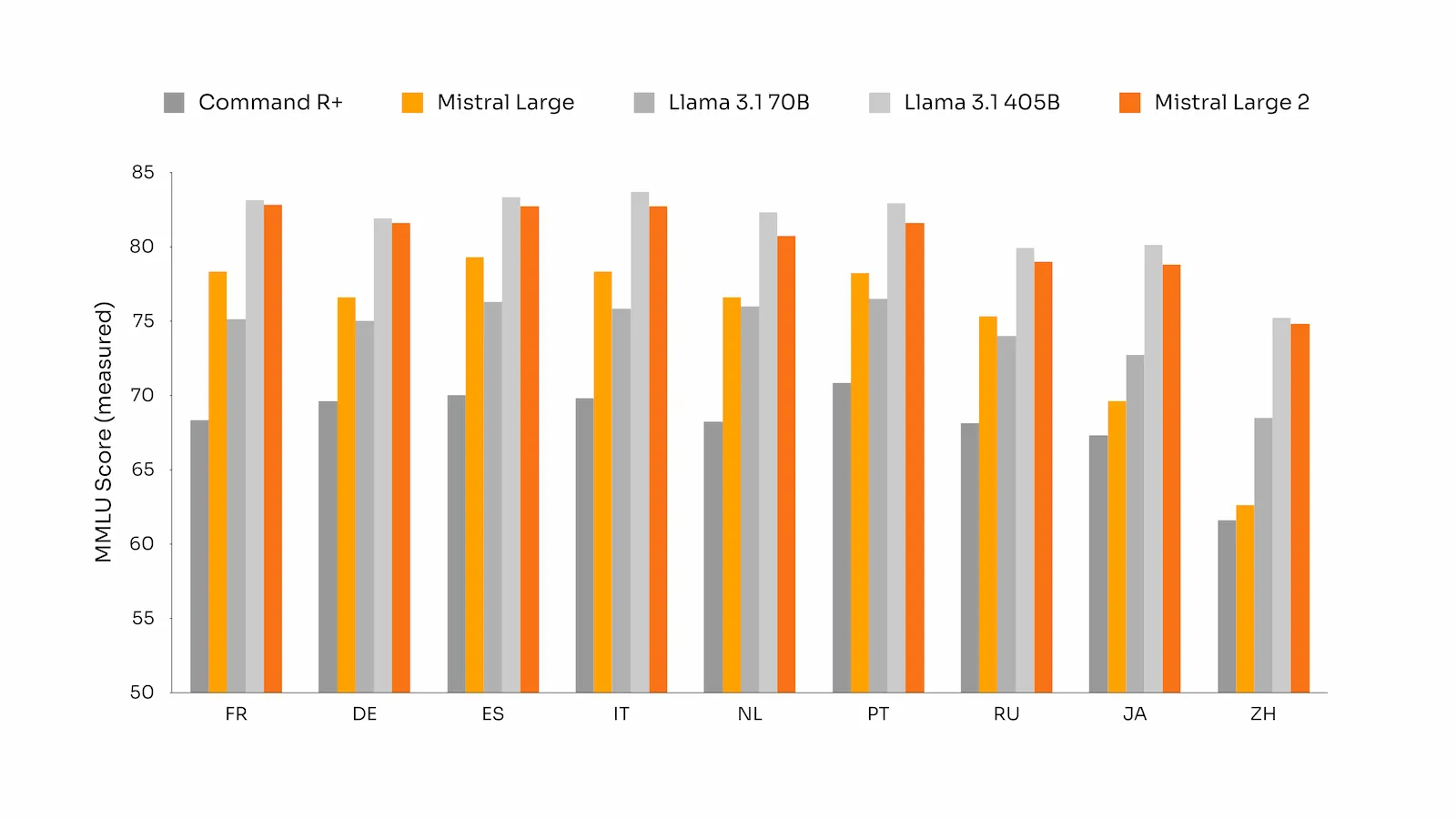

Comparison to Previous Versions and Competitor Offerings

Mistral Large 2 vastly outperforms the previous Mistral Large and performs on par with leading models such as GPT-4o, Claude 3 Opus, and Llama 3 405B. The model's reasoning capabilities have been enhanced, and it is trained to be more cautious and discerning in its responses.

Potential Impact on Users and the Broader Industry

Mistral Large 2 has the potential to significantly impact various industries, such as language translation, code generation, and reasoning. Its enhanced function calling and retrieval skills make it suitable for complex business applications. The model's availability on la Plateforme and through cloud service providers will also make it more accessible to a global audience.

Reactions from Industry Experts, Competitors, or Early Users

There are no specific reactions from industry experts, competitors, or early users mentioned in the blog post. However, the post highlights the model's performance on various benchmarks and its potential applications in various industries.

Brief History of the Company's Related Products or Features

Mistral AI has previously released several AI models, including Mistral Large, Codestral, and Embed. The company is consolidating its offering on la Plateforme around two general-purpose models, Mistral Nemo and Mistral Large, and two specialist models, Codestral and Embed.

Controversies, Concerns, or Potential Challenges

There are no specific controversies, concerns, or potential challenges mentioned in the blog post. However, the post highlights the model's potential applications in various industries and its availability on la Plateforme and through cloud service providers.