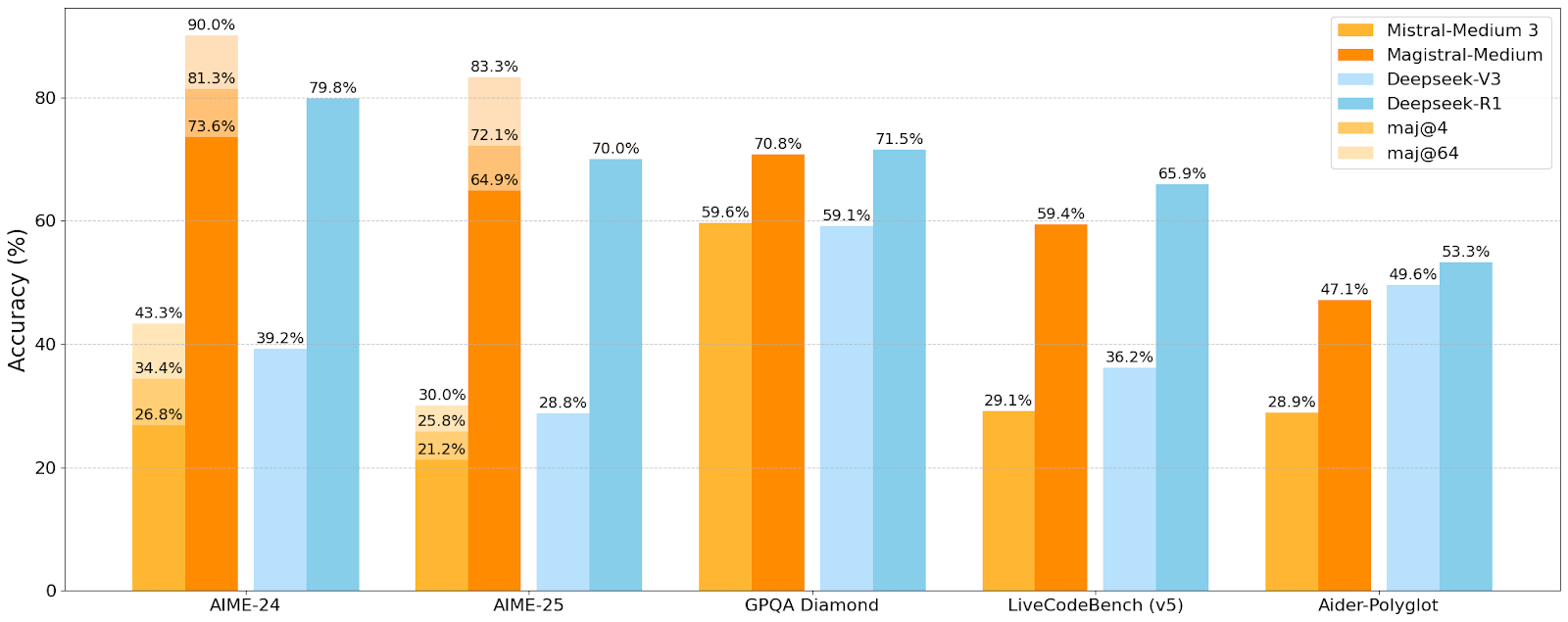

Mistral AI has introduced a new family of models under the “Magistral” name, officially unveiled on June 10. These models focus on strong reasoning capabilities and come in two versions: Magistral Medium and Magistral Small. Magistral Medium is a proprietary model aimed at delivering state-of-the-art performance with reduced size and cost compared to competitors. Magistral Small will be released as an open-source version for the broader community, with final naming potentially subject to change.

BREAKING 🚨: Mistral AI released 2 new models of the Magistral family with reasoning capabilities.

— TestingCatalog News 🗞 (@testingcatalog) June 10, 2025

What's new:

- Magistral Small: An open-weight version

- Magistral Medium: An enterprise version in preview

- A new Le Chat Think button

- Flash Reasoning 10x the speed https://t.co/DpV4YmuX7P pic.twitter.com/ENLiq1zTPS

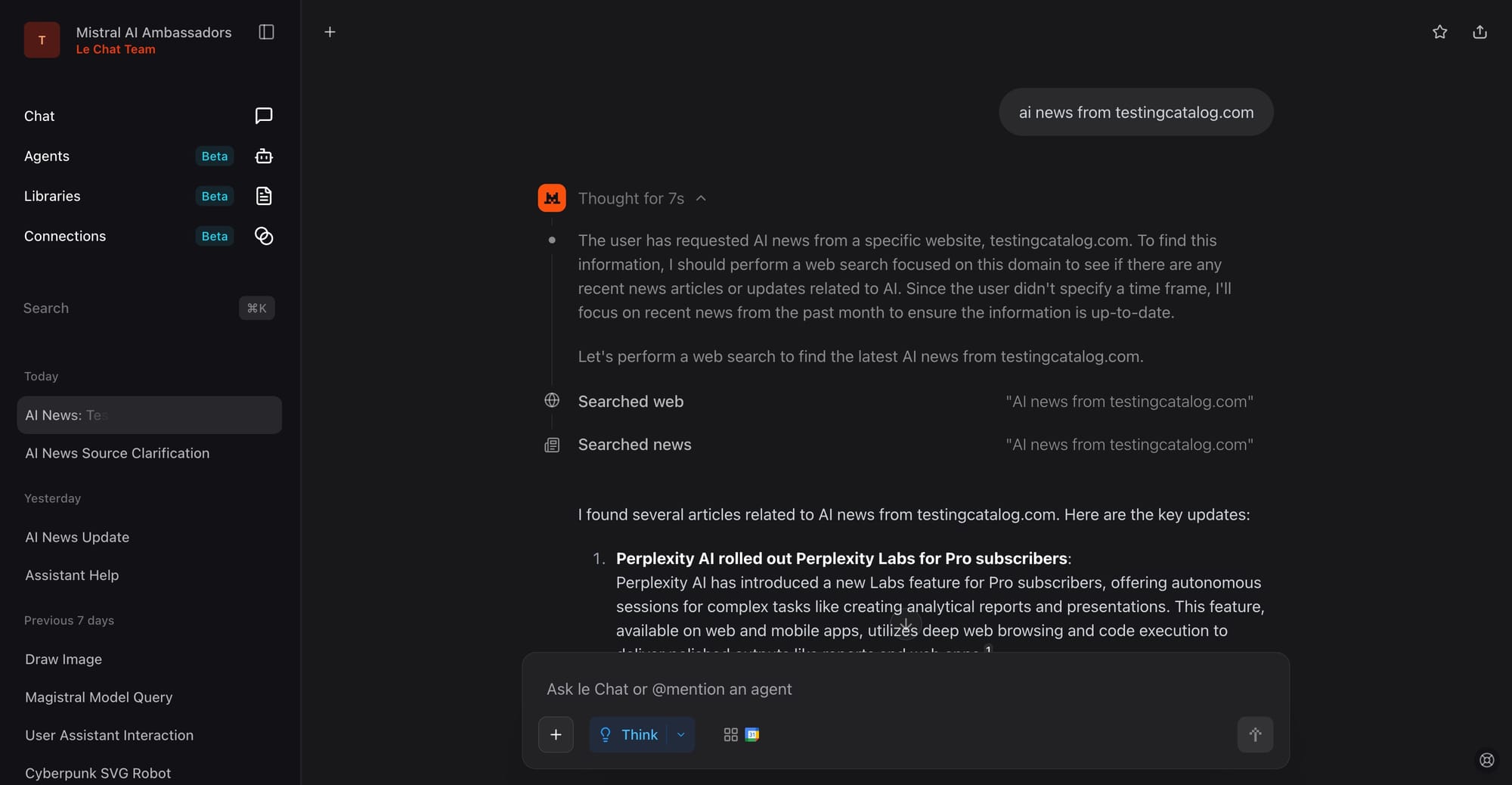

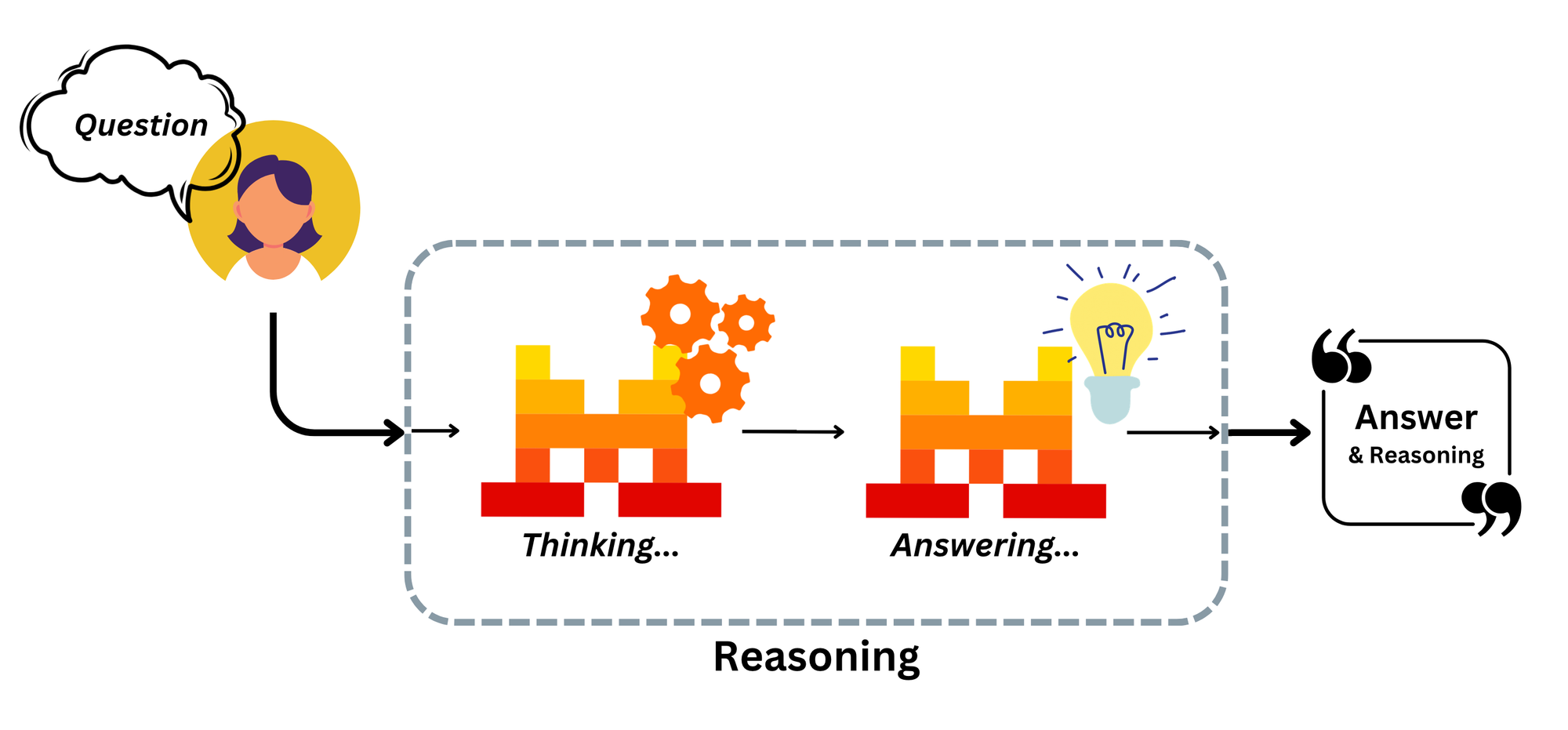

Magistral Medium has already appeared in internal references (magistral-medium-2506) as the default model for reasoning tasks. The public Le Chat interface now includes a new “Think” button, hinting at its integration and support for transparent, step-by-step reasoning. Behind the scenes, traces in the configuration suggest connections to accelerated answer modes, anonymous inference, and speculative decoding, highlighting performance and privacy as design goals.

Mistral, known for its open model strategy and efficient transformers like Mistral 7B and Mixtral, is now making a direct move into high-reasoning AI. Magistral Medium positions itself against OpenAI’s o3 model and Anthropic’s Claude 4 Opus, both of which have recently emphasized complex reasoning and tool integration. With its dual-track release strategy, Mistral is addressing both enterprise-grade applications and open research needs, keeping flexibility at the center of its roadmap.

The models are being gradually deployed across Le Chat and select APIs, with broader access expected to follow the announcement.