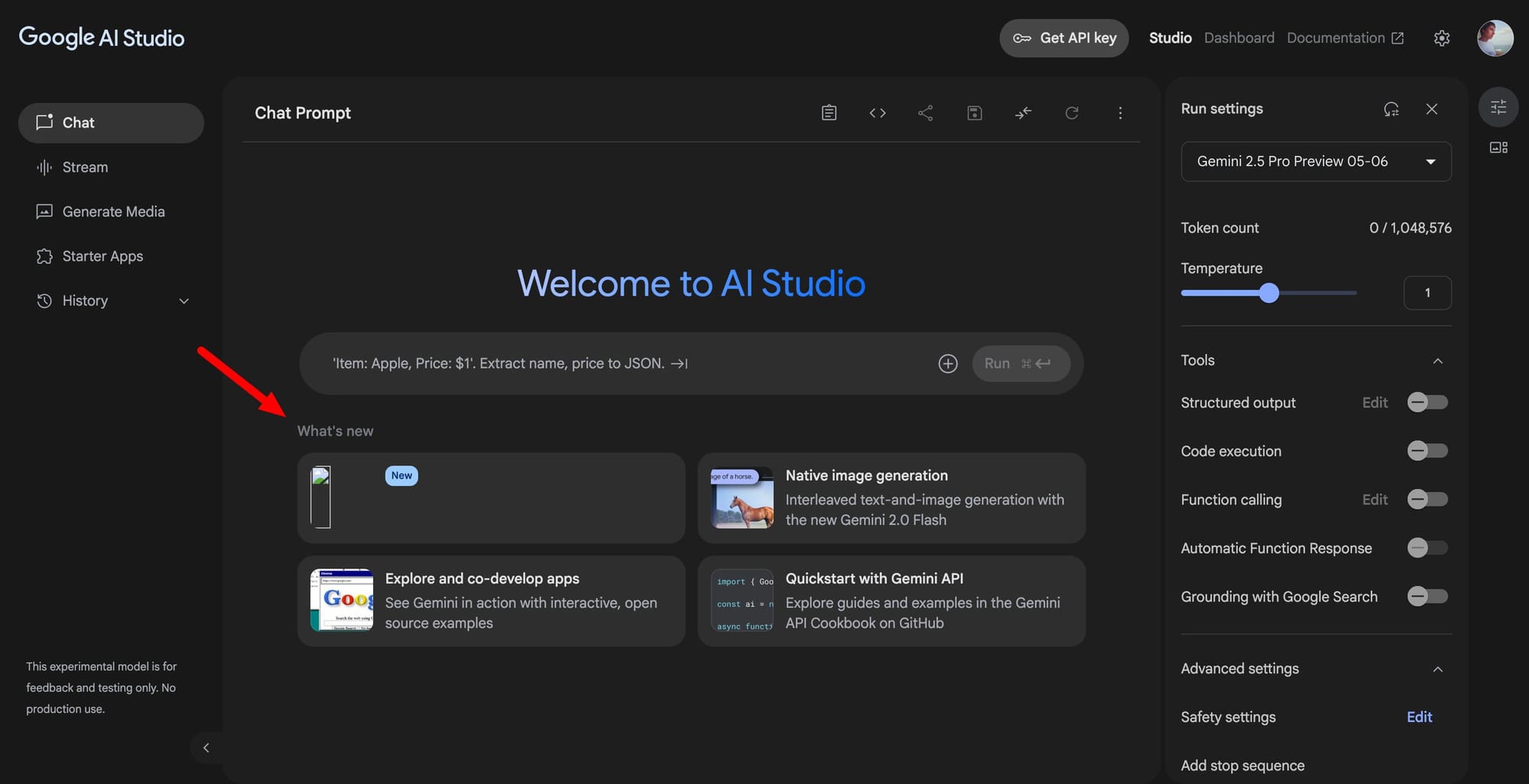

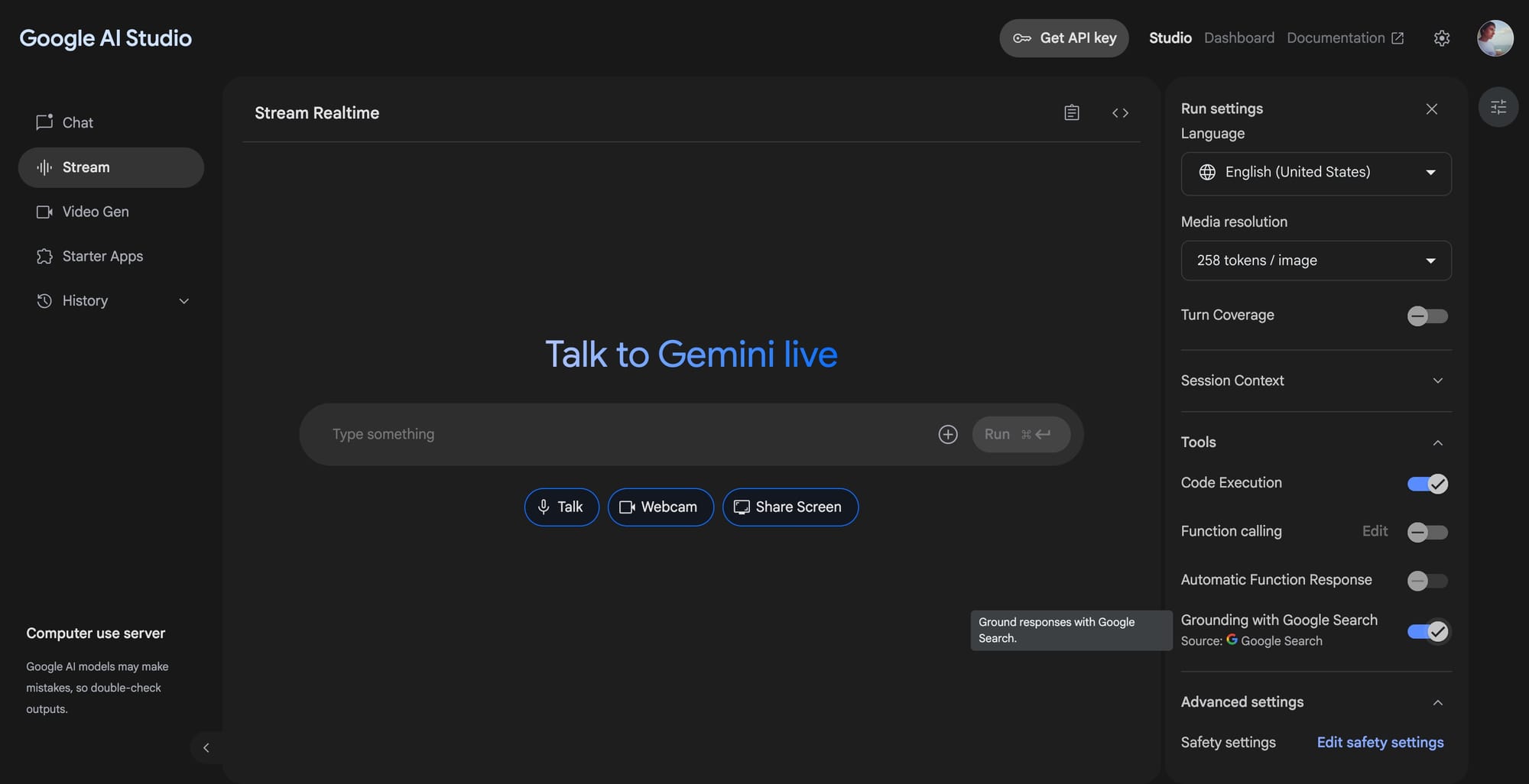

Over the weekend, Google quietly updated AI Studio on the web, introducing a concealed feature flagged only by a generic “new” label in the What’s New section. While the tile lacks a functional description, clicking on it redirects users to the Stream Realtime section, suggesting the update is tied to Gemini’s live multimodal processing. This area currently leverages the Flash 2.0 model, and speculation points toward an imminent upgrade to Flash 2.5. If true, this would mark a key leap for Gemini’s ability to process real-time image, video, and possibly audio inputs. Such a model update could be announced during Google I/O, where broader model advancements are expected, including new versions of Veo and Imagen.

The hidden flag placement indicates this change is being staged quietly, potentially awaiting a formal unveiling during the keynote. While the feature itself may not yet expose its purpose, its placement within the live-streaming multimodal interface implies Google is ramping up its capability for real-time media analysis and generation, possibly paving the way for end-to-end input-output pipelines—from visual input to spoken responses.

A separate backend tweak spotted by early users enables simultaneous use of web search and code execution, previously a mutually exclusive choice. Though undocumented, this adjustment hints at an evolving agent stack where tasks can be handled with greater autonomy and context switching.

Should we build coding agents into Google AI Studio?

— Logan Kilpatrick (@OfficialLoganK) May 17, 2025

Adding to the speculation is a broader conversation around agentic workflows. Logan Kilpatrick recently polled developers on X about integrating an engineering agent into AI Studio, comparable to OpenAI’s Codex agent inside ChatGPT. This came shortly after reports that Google is developing its own agent-like system, possibly aimed at automated software development tasks. While currently there’s no confirmation that AI Studio will mirror OpenAI’s pull request–level automation, Google’s push to unify model capabilities with deployability via Cloud Run suggests they are preparing a seamless coding and deployment flow. Whether these strands will converge at I/O remains to be seen.