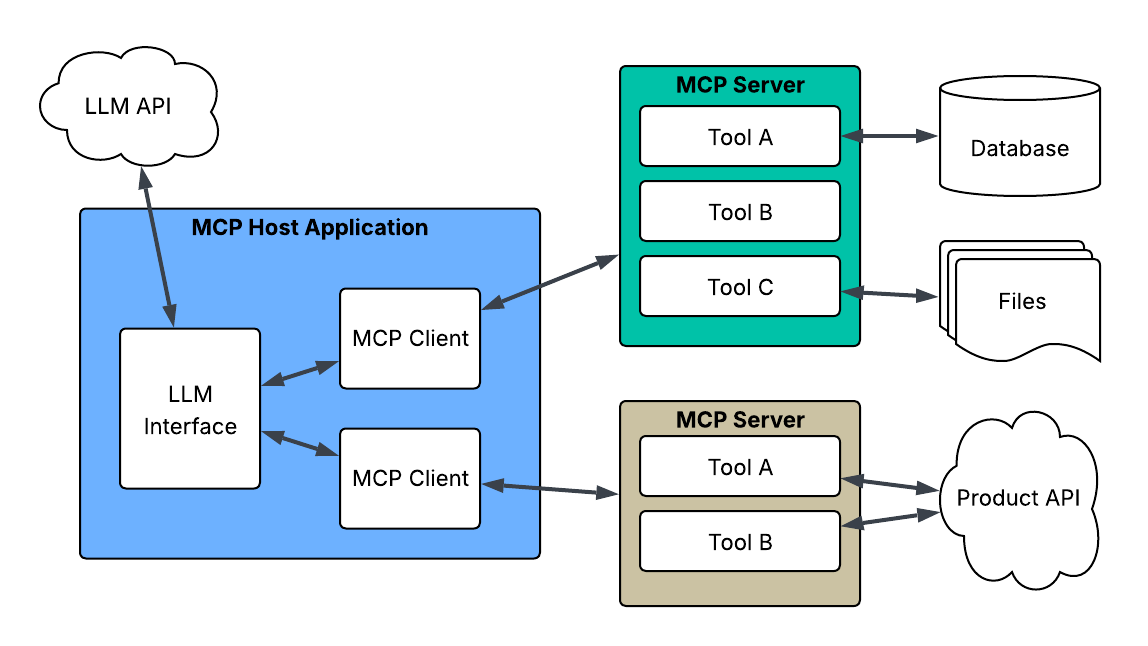

OpenAI appears to be laying the groundwork for a new phase of ChatGPT extensibility through a mechanism that mirrors Anthropic’s Model Context Protocol (MCP), a format aimed at streamlining how external data sources interface with large language models. MCP gained early traction in Claude’s ecosystem, particularly for enterprise use cases where teams can pipe in structured context from third-party tools. Now, recent findings suggest that OpenAI has begun testing a “custom connection” feature behind a gated flag in ChatGPT, hinting at a similar direction.

The new ChatGPT web app version includes an option to add custom connectors based on the Model Context Protocol (MCP) pic.twitter.com/lVyCLYyFV4

— Tibor Blaho (@btibor91) May 13, 2025

This experimental capability would allow users to set up bespoke integrations, potentially linking services like Zapier, Notion, or internal APIs directly into the ChatGPT workflow. If adopted widely, it could benefit enterprise teams, developers, and advanced individual users who require persistent context across sessions or access to dynamic external data. However, unlike Anthropic’s approach, where remote MCPs are restricted to higher tiers (e.g., not available on Claude Pro), OpenAI’s plan remains unclear. It is possible that the feature will follow a similar limitation—reserved for Team and Enterprise subscribers rather than Plus or Pro tiers.

people love MCP and we are excited to add support across our products.

— Sam Altman (@sama) March 26, 2025

available today in the agents SDK and support for chatgpt desktop app + responses api coming soon!

The presence of this toggle suggests that OpenAI is actively testing infrastructure to support connector-style extensions, possibly as part of a broader move toward agent-based interactions. While OpenAI has previously hinted at more robust workflows and integrations, this marks one of the first technical clues tying it directly to the MCP concept. The implementation was rolled back shortly after being discovered, indicating either an internal test or a premature exposure of an upcoming release. Given the company’s recent push for ChatGPT as a workplace assistant, this step would align well with its vision of embedding the AI deeper into productivity ecosystems.