Adobe has put its generative-AI suite in your pocket with the Firefly app, released globally on June 17, 2025, for iOS and Android. The app lets anyone create images or short clips from text, refine them with generative fill or expand tools, and sync results to ongoing Creative Cloud projects.

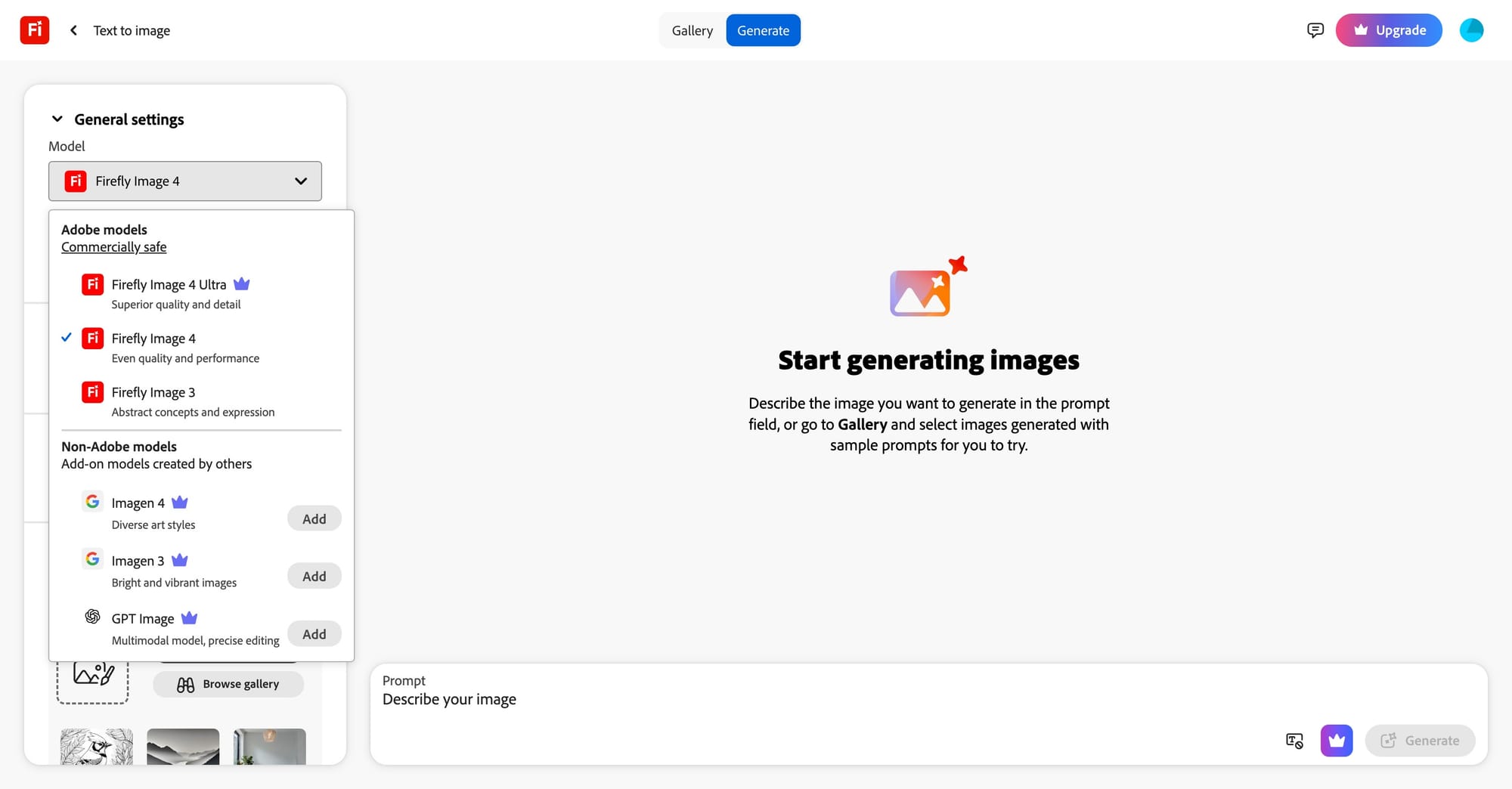

I'm happiest than ever to tell you that Adobe partnered with different AI model makers. Our partners and models you can use in Adobe Firefly (Boards, Mobile, etc.)

— Kris Kashtanova (@icreatelife) June 17, 2025

Google DeepMind (Veo3, Veo2, Imagen 4)

Flux Kontext, Flux Pro, etc.

Ideogram 3.0

Pika

Luma

Runway

GPT

🎉 pic.twitter.com/cQ4CJ4AY2V

Within a single canvas, users can switch between Adobe’s Image 3 and Video 1 models or choose partner engines from OpenAI, Google’s Imagen 4 and Veo 3, Ideogram, Luma, Pika, and Runway, giving a broader palette than the previous web beta. Prompt history, layer-based edits, and Firefly Boards support step-by-step concept development across devices. Free subscribers receive unlimited basic renders; higher-resolution or third-party models cost extra tokens under the same $10-per-month plan as the web version.

Until now, Firefly existed as a browser experience and as discrete tools such as Photoshop’s generative fill; the dedicated app unifies those abilities and adds text-to-video, placing it head-to-head with rivals like Midjourney and Runway while keeping Adobe’s commercially safe training policy for enterprise use. Early testers highlight the fast hand-off to Premiere Pro and the convenience of drafting mood reels on location.

Firefly runs on Adobe’s Sensei GenAI platform, credited by the company with generating more than 24 billion assets to date and driving double-digit Creative Cloud revenue growth this quarter. The mobile launch aims to attract first-time creators while deepening value for existing subscribers, reinforcing Adobe’s strategy of integrating generative AI across its core portfolio.